Lucas Kanade Optical Flow Tracking Problem

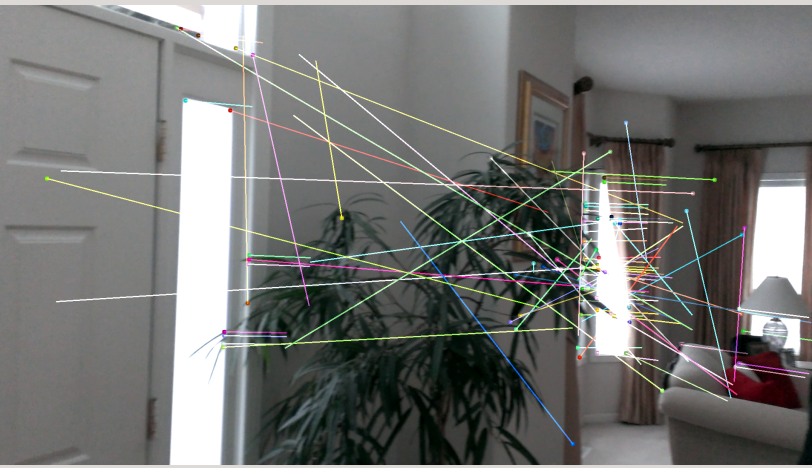

I have been trying to do some homography estimation between different frames in a video using Lucas Kanade Optical Flow Tracking (yes, I have already taken a look at the opencv sample). I have written up some code and tested it to see if I could start out by just tracking points in some videos I took. In every video, the points start out fine, and are tracked well for a few frames. Then, all of a sudden, the following happens:

This happens about 10 frames in after the points seem to be tracked just fine. Similar results occur in all of the other videos I have tested. Why is this happening and how can I fix it?

Update #1

Here is a code snippet that may help in solving the issue (ignore the formatting errors that occurred while posting):

def findNthFeatures(prevImg, prevPnts, nxtImg):

nxtDescriptors = []

prevGrey = None

nxtGrey = None

nxtPnts = prevPnts[:]

prevGrey = cv2.cvtColor(prevImg, cv2.COLOR_BGR2GRAY)

nxtGrey = cv2.cvtColor(nxtImg, cv2.COLOR_BGR2GRAY)

lucasKanadeParams = dict( winSize = (19,19), maxLevel = 10, criteria = (cv2.TERM_CRITERIA_EPS | cv2.TERM_CRITERIA_COUNT, 10, 0.03))

nxtPnts, status, err = cv2.calcOpticalFlowPyrLK(prevGrey, nxtGrey, prevPnts, None, **lucasKanadeParams)

goodNew = nxtPnts[status==1]

return goodNew

def stitchRow(videoName):

color = np.random.randint(0,255,(100,3))

lastFrame = None

currentFrame = None

lastKeypoints = None

currentKeypoints = None

lastDescriptors = None

currentDescriptors = None

firstImage = True

feature_params = dict( maxCorners = 100,

qualityLevel = 0.1,

minDistance = 8,

blockSize = 15)

frameCount = 0

Homographies = []

cv2.namedWindow('display', cv2.WINDOW_NORMAL)

cap = cv2.VideoCapture(videoName)

flags, frame = cap.read()

while flags:

if firstImage:

firstImage = False

lastFrame = frame[:,:].copy()

lastGray = cv2.cvtColor(lastFrame, cv2.COLOR_BGR2GRAY)

lastKeypoints = cv2.goodFeaturesToTrack(lastGray, mask = None, **feature_params)

flags, frame = cap.read()

frameCount += 1

else:

mask = np.zeros_like(lastFrame)

currentFrame = frame[:,:].copy()

frameCount += 1

#if(frameCount % 3 == 0):

cv2.imshow('display', currentFrame)

currentKeypoints = findNthFeatures(lastFrame, lastKeypoints, currentFrame)

#for i,(new,old) in enumerate(zip(currentKeypoints, lastKeypoints)):

# a, b = new.ravel()

# c, d = old.ravel()

# mask = cv2.line(mask, (a,b), (c,d), color[i].tolist(), 2)

# frame = cv2.circle(frame, (a,b), 5, color[i].tolist(), -1)

#img = cv2.add(frame,mask)

cv2.imshow('display', img)

cv2.waitKey(0)

for i in range(0, len(lastKeypoints)):

lastKeypoints[i] = tuple(lastKeypoints[i])

print lastKeypoints[i]

cv2.waitKey(0)

homographyMatrix = cv2.findHomography(lastKeypoints, currentKeypoints)

Homographies.append(homographyMatrix)

lastFrame = currentFrame

lastDescriptors = currentDescriptors

lastKeypoints = currentKeypoints

flags, frame = cap.read()

If points which are to be tracked(from first frame), are not present in frames, they are not tracked properly. When there are less points tracked, algorithm present in Opencv examples, initialized itself. Can you post code snippet, so that we can see what's problem is?