EDIT 2 : you can change width&height ratio if(dist0 > dist1 *4) and angle if( fabs(angle) > 35 & fabs(angle) < 150 )

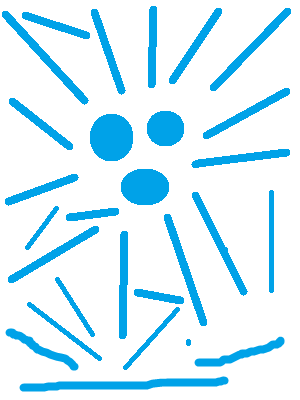

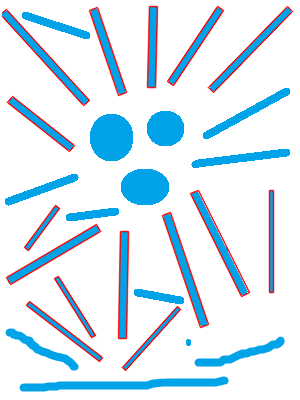

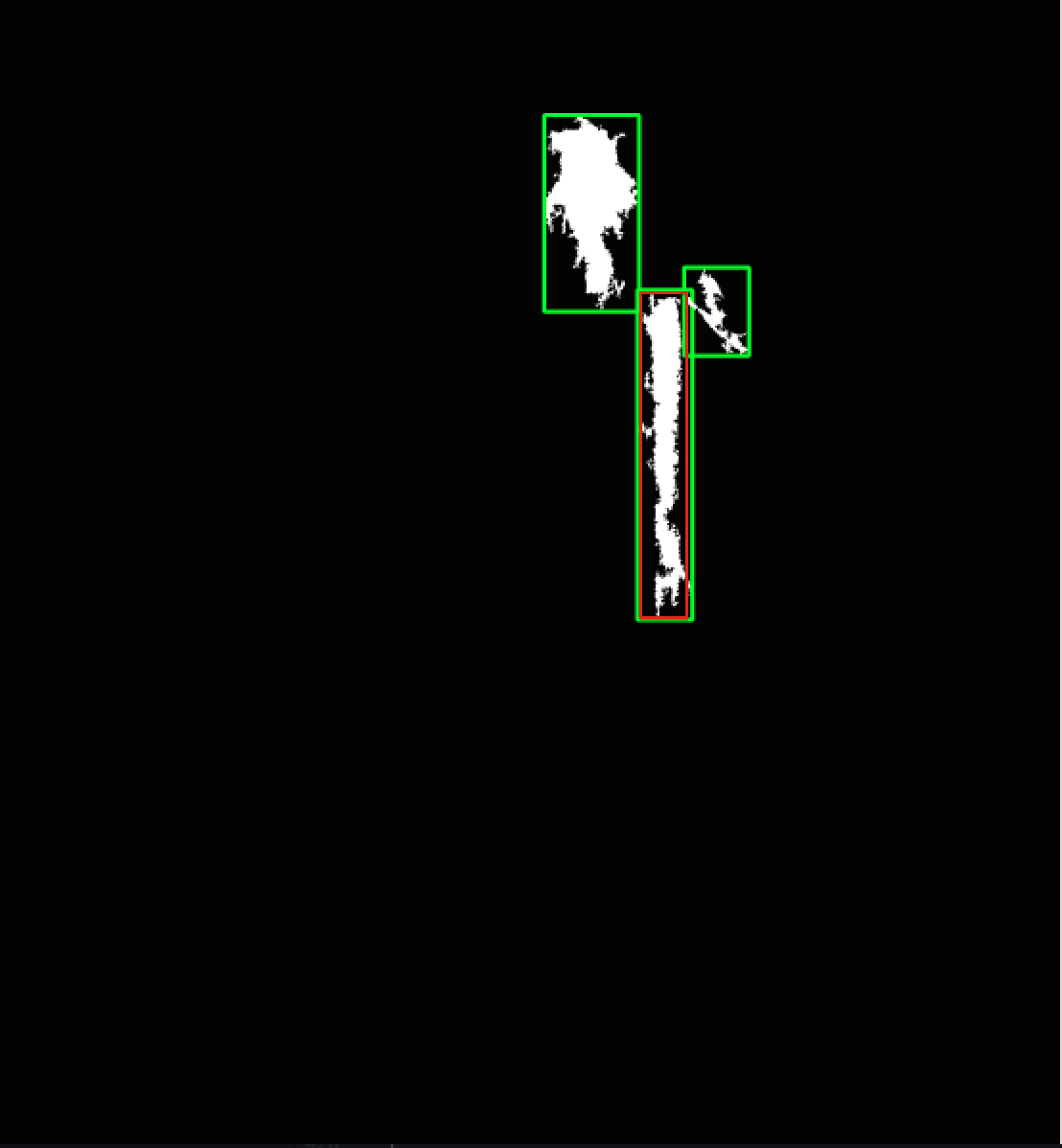

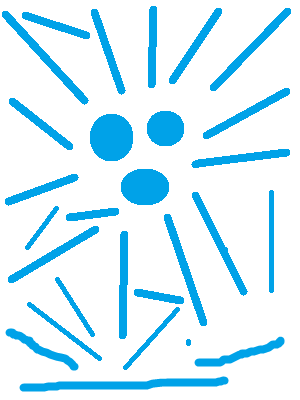

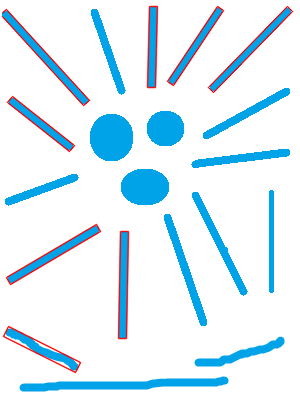

input & output Image:

#include "opencv2/imgproc.hpp"

#include "opencv2/highgui.hpp"

using namespace cv;

using namespace std;

//! Compute the distance between two points

/*! Compute the Euclidean distance between two points

*

* @Param a Point a

* @Param b Point b

*/

static double distanceBtwPoints(const cv::Point2f &a, const cv::Point2f &b)

{

double xDiff = a.x - b.x;

double yDiff = a.y - b.y;

return std::sqrt((xDiff * xDiff) + (yDiff * yDiff));

}

int main( int argc, char** argv )

{

Mat src,gray;

src = imread(argv[1]);

if(src.empty())

return -1;

cvtColor( src, gray, COLOR_BGR2GRAY );

gray = gray < 200;

vector<vector<Point> > contours;

findContours(gray.clone(), contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE);

RotatedRect _minAreaRect;

for (size_t i = 0; i < contours.size(); ++i)

{

_minAreaRect = minAreaRect( Mat(contours[i]) );

Point2f pts[4];

_minAreaRect.points(pts);

double dist0 = distanceBtwPoints(pts[0], pts[1]);

double dist1 = distanceBtwPoints(pts[1], pts[2]);

double angle = 0;

if(dist0 > dist1 *4)

angle =atan2(pts[0].y - pts[1].y,pts[0].x - pts[1].x) * 180.0 / CV_PI;

if(dist1 > dist0 *4)

angle =atan2(pts[1].y - pts[2].y,pts[1].x - pts[2].x) * 180.0 / CV_PI;

if( fabs(angle) > 35 & fabs(angle) < 150 )

for( int j = 0; j < 4; j++ )

line(src, pts[j], pts[(j+1)%4], Scalar(0, 0, 255), 1, LINE_AA);

}

imshow("result", src);

waitKey(0);

return 0;

}

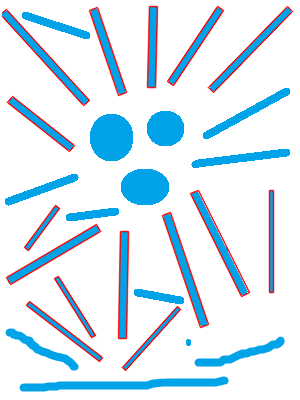

EDIT 1:

you can improve the code below by changing

if( _minAreaRect.angle < -30 & (dist0 > dist1 *4 | dist1 > dist0 *4) )

here you can change width&height ratio and angle of RotatedRect ( need your care )

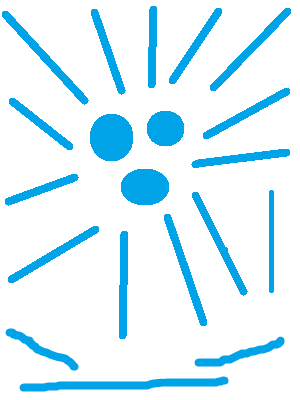

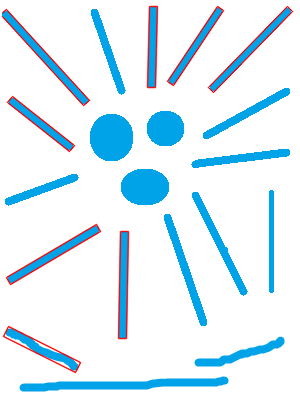

input & output Image:

#include "opencv2/imgproc.hpp"

#include "opencv2/highgui.hpp"

using namespace cv;

using namespace std;

//! Compute the distance between two points

/*! Compute the Euclidean distance between two points

*

* @Param a Point a

* @Param b Point b

*/

static double distanceBtwPoints(const cv::Point2f &a, const cv::Point2f &b)

{

double xDiff = a.x - b.x;

double yDiff = a.y - b.y;

return std::sqrt((xDiff * xDiff) + (yDiff * yDiff));

}

int main( int argc, char** argv )

{

Mat src,gray;

src = imread(argv[1]);

if(src.empty())

return -1;

cvtColor( src, gray, COLOR_BGR2GRAY );

gray = gray < 200;

vector<vector<Point> > contours;

findContours(gray.clone(), contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE);

RotatedRect _minAreaRect;

for (size_t i = 0; i < contours.size(); ++i)

{

_minAreaRect = minAreaRect( Mat(contours[i]) );

Point2f pts[4];

_minAreaRect.points(pts);

double dist0 = distanceBtwPoints(pts[0], pts[1]);

double dist1 = distanceBtwPoints(pts[1], pts[2]);

if( _minAreaRect.angle < -30 & (dist0 > dist1 *4 | dist1 > dist0 *4) )

for( int j = 0; j < 4; j++ )

line(src, pts[j], pts[(j+1)%4], Scalar(0, 0, 255), 1, LINE_AA);

}

imshow("result", src);

waitKey(0);

return 0;

}

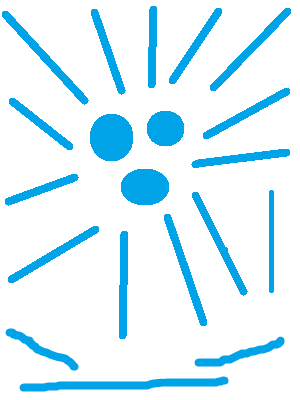

you can filter contours by using height and width of bounding rectangels .

like if ( minRect.height > minRect.width*4 ) as shown with the code below:

#include "opencv2/imgproc.hpp"

#include "opencv2/highgui.hpp"

using namespace cv;

using namespace std;

int main( int argc, char** argv )

{

Mat src,gray;

src = imread(argv ...

(more)

please share your code and a sample image

then from the bounding box that you are applying just filter the blobs that you want by filtering the

heightandwidthof the the bounding box.