solvePnP returning incorrect values

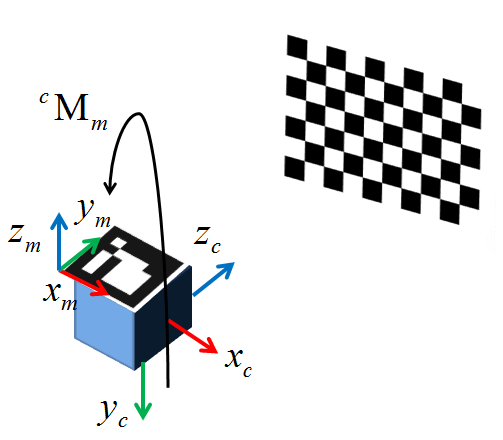

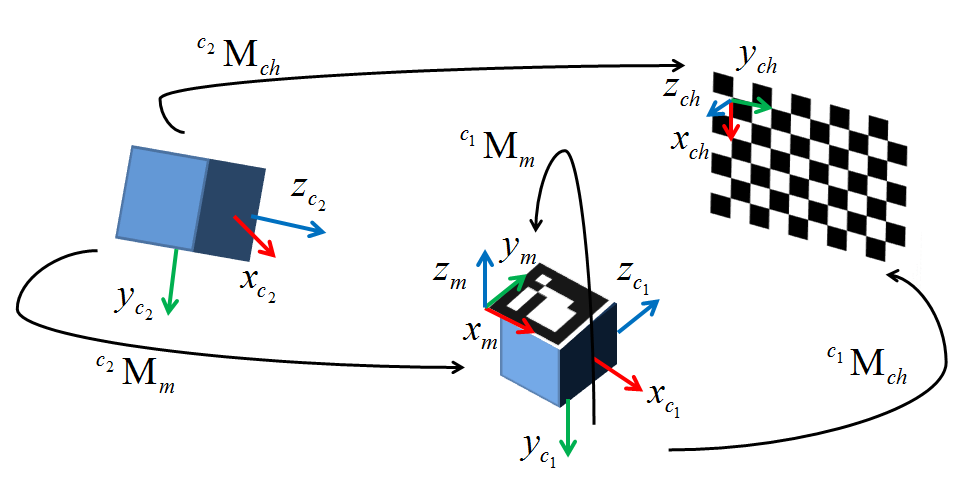

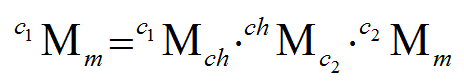

I'm currently trying to implement an alternate method to webcam-based AR using an external tracking system. I have everything in my environment configured save for the extrinsic calibration. I decided to use cv::solvePnP() as it supposedly does pretty much exactly I want, but after two weeks I am pulling my hair out trying to get it to work. A diagram below shows my configuration. c1 is my camera, c2 is the optical tracker I'm using, M is the tracked marker attached to the camera, and ch is the checkerboard.

As it stands I pass in my image pixel coordinates acquired with cv::findChessboardCorners(). The world points are acquired with reference to the tracked marker M affixed to the camera c1 (The extrinsic is thus the transform from this marker's frame to the camera origin). I have tested this with data sets up to 50 points to mitigate the possibility of local minima, but for now I'm testing with only four 2D/3D point pairs. The resulting extrinsic I get from the rvec and tvec returned from cv::solvePnP() is massively off in terms of both translation and rotation relative to both a ground truth extrinsic I manually created as well as a basic visual analysis (The translation implies a 1100mm distance while the camera is at most 10mm away).

Initially I thought the issue was that I had some ambiguities in how my board pose was determined, but now I'm fairly certain that's not the case. The math seems pretty straightforward and after all my work on setting the system up, getting caught up on what is essentially a one-liner is a huge frustration. I'm honestly running out of options, so if anyone can help I would be hugely in your debt. My test code is posted below and is the same as my implementation minus some rendering calls. The ground truth extrinsic I have that works with my program is as follows (Basically a pure rotation around one axis and a small translation):

1 0 0 29

0 .77 -.64 32.06

0 .64 .77 -39.86

0 0 0 1

Thanks!

#include <opencv2\opencv.hpp>

#include <opencv2\highgui\highgui.hpp>

int main()

{

int imageSize = 4;

int markupsSize = 4;

std::vector<cv::Point2d> imagePoints;

std::vector<cv::Point3d> markupsPoints;

double tempImage[3], tempMarkups[3]; // Temp variables or iterative data construction

cv::Mat CamMat = (cv::Mat_<double>(3,3) << (566.07469648019332), 0, 318.20416967732666,

0, (565.68051204299513), -254.95231997403764, 0, 0, 1);

cv::Mat DistMat = (cv::Mat_<double>(5,1) << -1.1310542849120900e-001, 4.5797249991542077e-001,

7.8356355644908070e-003, 3.7617039978623504e-003, -1.2734302146228518e+000);

cv::Mat rvec = cv::Mat::zeros(3,1, cv::DataType<double>::type);

cv::Mat tvec = cv::Mat::zeros(3,1,cv::DataType<double>::type);

cv::Mat R;

cv::Mat extrinsic = cv::Mat::eye(4, 4, CV_64F);

// Escape if markup lists aren't equally sized

if(imageSize != markupsSize)

{

//TODO: Replace with try, throw error code, and catch in qSlicerLocalizationModuleWidget

return 0;

}

// Four ...

Glad you solve your issue !