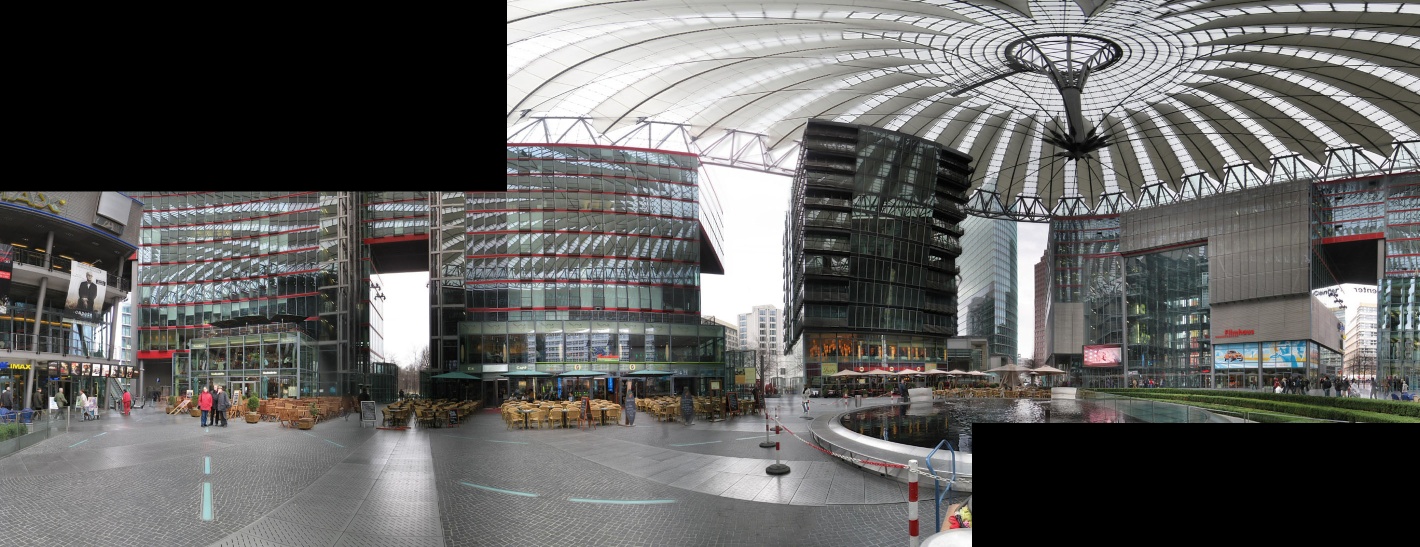

Panorama mosaic from Aerial Images

I'm writing a program that creates a panorama mosaic in real time from a video. The steps that I've done are:

- Find features between the n-th frame and the (n-1)th mosaic.

- Calculate homography

- Use the homography with warpPerspective for stitch the images.

I'm using this code for stitch the images together:

warpPerspective(vImg[0], rImg, H, Size(vImg[0].cols, vImg[0].rows), INTER_NEAREST);

Mat final_img(Size(rImg.cols, rImg.rows), CV_8UC3);

Mat roi1(final_img, Rect(0, 0, vImg[1].cols, vImg[1].rows));

Mat roi2(final_img, Rect(0, 0, rImg.cols, rImg.rows));

rImg.copyTo(roi2);

vImg[1].copyTo(roi1);

If you see, from second 0.33 it starts to lose part of the mosaic. I'm pretty sure that depends by the ROI I've defined. My program should work like this : https://www.youtube.co/watch?v=59RJeL....

What can I do?

EDIT 2

Here's my code, I hope someone could help me to see the light at the end of the tunnel!!!

// I create the final image and copy the first frame in the middle of it

Mat final_img(Size(img.cols * 3, img.rows * 3), CV_8UC3);

Mat f_roi(final_img,Rect(img.cols,img.rows,img.cols,img.rows));

img.copyTo(f_roi);

//i take only a part of the ccomplete final image

Rect current_frame_roi(img.cols, img.rows, final_img.cols - img.cols, final_img.rows - img.rows);

while (true)

{

//take the new frame

cap >> img_loop;

if (img_loop.empty()) break;

//take a part of the final image

current_frame = final_img(current_frame_roi);

//convert to grayscale

cvtColor(current_frame, gray_image1, CV_RGB2GRAY);

cvtColor(img_loop, gray_image2, CV_RGB2GRAY);

//First step: feature extraction with Orb

static int minHessian = 400;

OrbFeatureDetector detector(minHessian);

vector< KeyPoint > keypoints_object, keypoints_scene;

detector.detect(gray_image1, keypoints_object);

detector.detect(gray_image2, keypoints_scene);

//Second step: descriptor extraction

OrbDescriptorExtractor extractor;

Mat descriptors_object, descriptors_scene;

extractor.compute(gray_image1, keypoints_object, descriptors_object);

extractor.compute(gray_image2, keypoints_scene, descriptors_scene);

//Third step: match with BFMatcher

BFMatcher matcher(NORM_HAMMING,false);

vector< DMatch > matches;

matcher.match(descriptors_object, descriptors_scene, matches);

double max_dist = 0; double min_dist = 100;

//distance between kepoint

//with orb it works better without it

/*for (int i = 0; i < descriptors_object.rows; i++)

{

double dist = matches[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

*/

//take just the good points

//with orb it works better without it

vector< DMatch > good_matches;

good_matches = matches;

/*for (int i = 0; i < descriptors_object.rows; i++)

{

if (matches[i].distance <= 3 * min_dist)

{

good_matches.push_back(matches[i]);

}

}*/

vector< Point2f > obj;

vector< Point2f > scene;

//take the keypoints

for (int i = 0; i < good_matches.size(); i++)

{

obj.push_back(keypoints_object[good_matches[i].queryIdx].pt);

scene.push_back(keypoints_scene[good_matches[i].trainIdx].pt);

}

//static Mat mat_match;

//drawMatches(img_loop, keypoints_object, current_frame, keypoints_scene,good_matches, mat_match, Scalar::all(-1), Scalar::all(-1),vector<char>(), 0);

// homography with RANSAC

if (obj.size() >= 4)

{

Mat H = findHomography(obj, scene, CV_RANSAC,5);

//take the x_offset and y_offset

/*the offset matrix is of the type

|1 0 x_offset |

|0 1 y_offset |

|0 0 1 |

*/

offset.at<double>(0, 2) = H.at<double>(0, 2);

offset.at ...

You could check this blog post on panorama image stitching:

Thanks Eduardo, but that blog was my starting point, so I modified the code you posted with the one posted by me! I really don't know how to solve this situation :S

I see the problem now. When you go right, you lose the left part. I don't know how exactly you could solve this.

Maybe you could try to always center the current image to avoid losing parts of mosaic ?

For your Edit 3, I think that the problem is that you try to find the homography between the panorama image and the current image. Repetitive pattern in the global panorama image could false the matching and thus the homoraphy matrix. You could try to:

Also, you could try to check the quality of the matching (I use SIFT in my tests, I found it more robust than ORB but much more time consuming if you have a real time constraint).

I've already tried with SIFT, but it fails too after a certain point. I think I'm going to calculate only the homography between two consecutive frames, and I'll let you know! Thanks again for all your support!

How can I proceed if I want to calculate the global homography? For now I'm trying to calculate the homography between two consecutive frames but seems it doesn't work at all :S One more question:is there a way from the code you posted for getting the part of the panorama where you past the last frame? In that way I can use that part as previous frame!

The global homography can be calculated by multiplying each homography between two consecutives frames. You could try to test to stich the images from 00:00 to 00:30 (when it begins to fail), and try to stich from 00:30 to 01:00, etc. to see what happens.

If the source videos is not private maybe you could post it in a private link on YouTube. I may give it a try if I have time.

Sorry bu I think that I can't upload the original video :S I'm trying to get the part of the stitch where I paste the last frame and calculate the homography just between that part and the current frame. By the way, if I take 1 frame over X (I tried with X=10, 20, 30,50) it works! How could be possible?

Hi @bjorn89 I am also working on uav image mosaic similar like boofcv as you mention but i also getting problem while stiching the images. If you have solve your above problem can you help me with your code.