Dense optical flow for stitching

Hi, I'm trying to stitch some pictures to create a cylindrical panorama with C# and EMGUCV, a wrapper for OpenCV. To do this I want to use a direct-tecnique because I have already tried with a feature-based tecnique (Harris corner detector + pyramid Lucas-Kanade) with good results. I follow these steps for the aligment:

- Remap every image in new image by cylindrical equations (as shown in "Image Alignment and Stitching: A Tutorial" by Richard Szeliski).

- Estimate optical flow by using a dense algorithm (OpenCV functions: cvCalcOpticalFlowLK or cvCalcOpticalFlowHS)

- Using my function to estimate translation vectors based on RANSAC or using OpenCV function "findHomography".

I'm trying with images taken from images set:

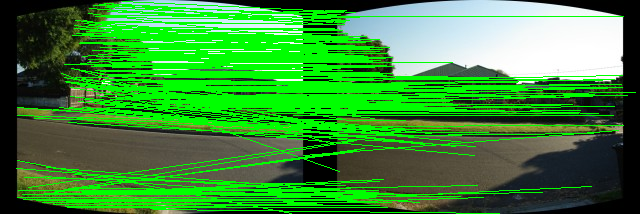

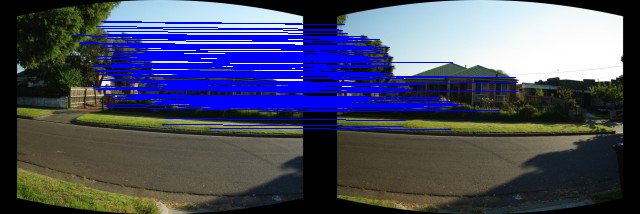

After the 1) step I obtain this:

After the 3) I obtain this:

After optical flow estimation I convert the two returned maps (CvArr* velx, CvArr* vely) describing optical flow in the two directions to two arrays of points filtering flow vectors lower than a value (e.g. < 0.1 pixels). Finally I use findHomography with array of points to estimate the homography (translation). I put here my code to translate maps to array but it's in C# language.

for (int j = 0; j < vely.Height; j += stepSize)

{

for (int i = 0; i < velx.Width; i += stepSize)

{

/* There's no need to calculate for every single point,

if there's not much change, just ignore it */

if (Math.Abs(velx.Data[j, i, 0]) < minDisplacement_x && Math.Abs(vely.Data[j, i, 0]) < minDisplacement_y)

continue;

sourcePlane[num].X = i;

sourcePlane[num].Y = j;

destPlane[num].X = i + velx.Data[j, i, 0];

destPlane[num].Y = j + vely.Data[j, i, 0];

num++;

}

}

// Resizes points found array

System.Array.Resize(ref sourcePlane, num);

System.Array.Resize(ref destPlane, num);

My problem is about the last step 3). findHomography give me bad results. For example, to estimate about a 100 pixels translation along horizontal direction, the findHomography give me a 2 or 3 pixels translation. I think that the problem is due to the outliers. In fact if I filter the arrays of points before findHomography to delete small vectors of translation by setting min value minDisplacement_x or minDisplacement_y to bigger than 0.1 (e.g. 50.0) the result is a little bit better but not enough. I know that feature-based tecniques are more robust than direct-tecnique but my results are very far from a good solution. Can someone help me? I don't want use feature-based tecnique (features or descriptors or blob) Thanks. Luca

Have you checked if the optical flow algorithms you are using are actually giving you correct matches? You have images with large texture-less zones, and you are using optical flow method which are intended mostly for short displacement. Also, the remapping which is done before the optical flow may cause bigger errors in the flow computing. You may want to use Brox's method (http://docs.opencv.org/modules/gpu/do...) for the optical flow, and maybe removing large texturless zones. good luck

Thank you juanmanpr! I know that dense algorithms are very useful with little displacements as you said but I was hoping that using a threshold (setting minDisplacement_x, minDisplacement_y) comparable with the real displacements I can obtaing good results, but unfortunately it's not so. I don't know anything about Brox's method but I will try! Why did you think that the remapping in the step 1) can cause big errors? The optical flow is computed with both images, and both are remapped before.

It was just a guess, since apparently they are adding more transformations that the flow should resolve.