Your setup is basically a stereo setup. Cameras and projectors are very similar and can almost be treated the same for many computer vision applications. For both systems, a pixels is identified with a direction in the world. For a camera, all points that lie on a line towards the camera are seen at the same pixel (modulo distorition). And for a projector, all points that lie on such a line are illuminated if a certain pixel is set to a color.

One approach to calibrate your system is to project a pattern onto a planar surface (e.g. a large piece of flat cardboard). This pattern can be detected with the camera so that you have corresponding pixels on both systems.

This information can be feed to the same algorithms that calibrate a stereo setup in which two cameras see the same marker points.

Maybe this can give you a rough direction. I wrote my master thesis about a sandbox with a projector/Kinect setup where I measured the positions with this approach. (Although it's a bit easier if you have a 3d Camera and not only a 2d)

A second approach (maybe similar to what secrestb meant in his answer):

Print a checkerboard pattern and fix it somewhere where both the camera and the projector can see it. With the calib3b-functions it's easy to get it's relative position to the camera. The points on the board are now your 3d marker for calibrating the projector (both intrinsic and extrinsic calibration relative to the camera). As the projector cannot see the pattern, you have to help him a bit. Move your mouse on the projector image until it points to the first corner of the projected pattern (the mouse icon is now visible on the printed checkboard at the first corner). Save this pixel position and continue until you have marked some points. Now you have pairs of 3d points (measured relative to your camera) and the corresponding pixels on your projector.

This is almost exactly the information you also have when calibrating a camera with a marker. Therefore you can also use the calib3d-funtions used to calibrate a camera to calibrate your projector. You have to repeat that procedure for several marker positions to get both intrinsic and extrinsic calibration. [ if you know the intrinsics of your projector, a single view with some points is enough to get the extrinsic calibration]

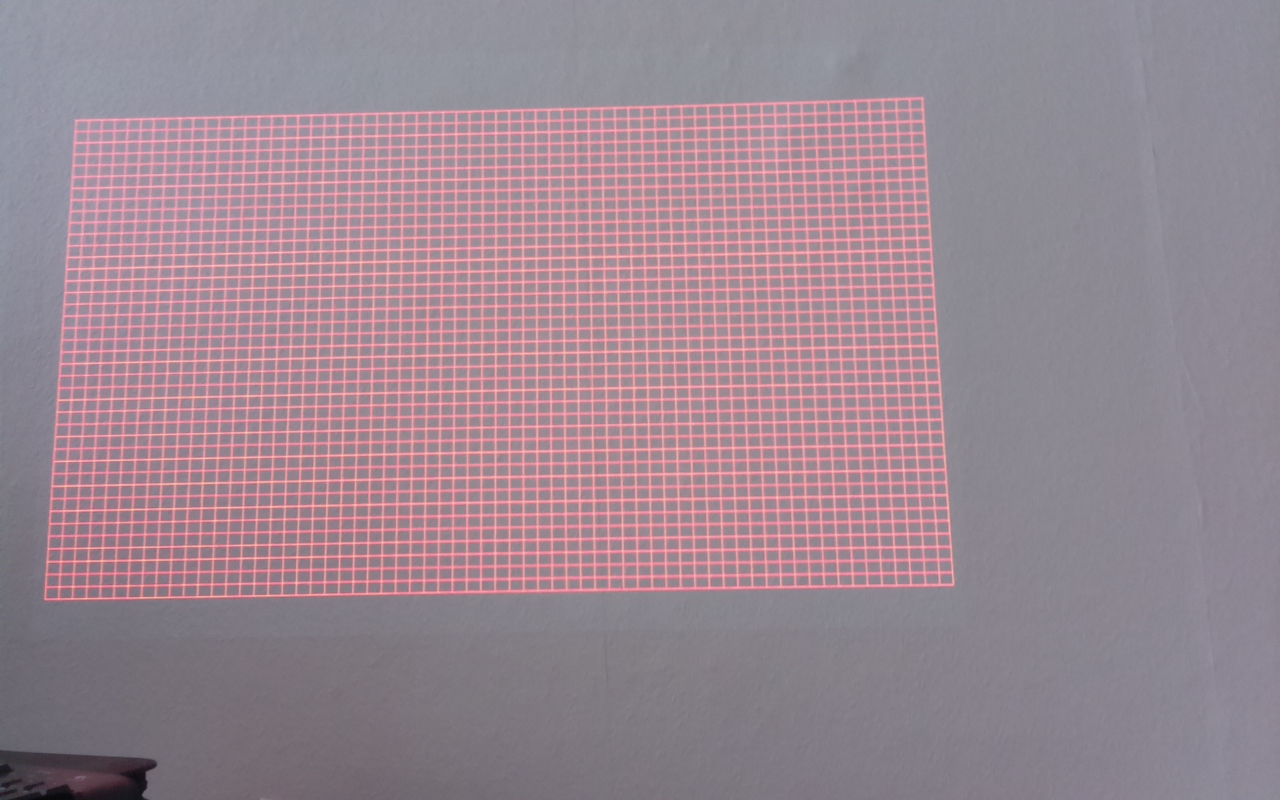

I updated my question and added 2 images. First is a raster made with pygame and second is a picture taken by the Raspberry PI NoIR cam.

I was thinking if it may be possible to mask, cut and resize the camera picture and the raster using OpenCV. Since i know the raster is 1280x800, i just have to find a way to match it. Is it possible to tilt and resize it so i can use this way to get what i want? It may even use less cpu resources, maybe...