Best method for multiple particle tracking with noise and possible overlap?

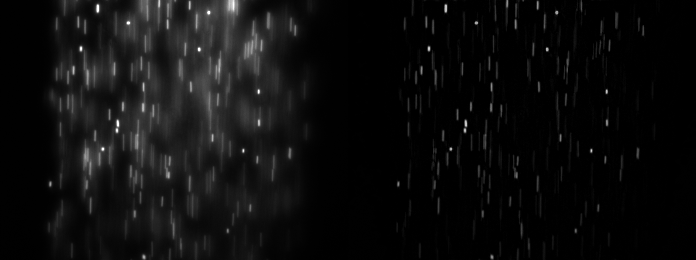

Hello, I am working on a school project where I want to track the number, direction, and velocity of particles moving across a flow chamber. I have a series of timestamped images which were taken under florescent light showing bright particles flowing over a view field.

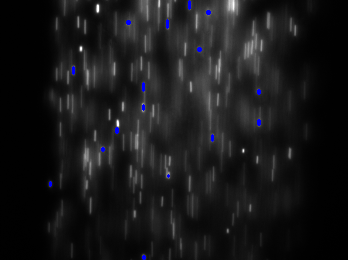

The particles I'm interested in tracking are the bright round dots (highlighted in green), while excluding motion blur from other particles that were not in focus.

Image Showing: Sample (green) vs Noise (red)

Here is a series of sample images from the data set: Sample Data

I have started working with both optical flow examples from the docs but they pick up all of the noise as tracks which I need to avoid. What method would you recommend for this application?

EDIT: Using the suggestion below I've added a tophat filter before running the sequence through an Lukas Kanade Motion Tracker. I just modified it slightly to return all of the tracks it picks up, and then I go through them to remove duplicates, and calculate velocities for each tracked particle.

This method still seems to pick up a lot of noise in the data, perhaps I haven't used optimal parameters for the LK filter?

lk_params = dict( winSize = (10, 10),

maxLevel = 5,

criteria = (cv2.TERM_CRITERIA_EPS | cv2.TERM_CRITERIA_COUNT, 10, 0.03))

feature_params = dict( maxCorners = 3000,

qualityLevel = 0.5,

minDistance = 3,

blockSize = 3 )

class App:

def __init__(self, video_src):

self.track_len = 50

self.detect_interval = 1

self.tracks = []

self.allTracks = []

self.cam = video.create_capture(video_src)

self.frame_idx = 0

def run(self):

maxFrame = 10000

while True:

ret, frame = self.cam.read()

if frame == None:

break

if self.frame_idx > maxFrame:

break

if frame.shape[2] == 3:

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE,(4,4))

tophat1 = cv2.morphologyEx(frame, cv2.MORPH_TOPHAT, kernel)

ret, frame_gray = cv2.threshold(tophat1, 127, 255, cv2.THRESH_BINARY)

else: break

vis = cv2.cvtColor(frame_gray, cv2.COLOR_GRAY2BGR)

if len(self.tracks) > 0:

img0, img1 = self.prev_gray, frame_gray

p0 = np.float32([tr[-1] for tr in self.tracks]).reshape(-1, 1, 2)

p1, st, err = cv2.calcOpticalFlowPyrLK(img0, img1, p0, None, **lk_params)

p0r, st, err = cv2.calcOpticalFlowPyrLK(img1, img0, p1, None, **lk_params)

d = abs(p0-p0r).reshape(-1, 2).max(-1)

good = d < 1

new_tracks = []

for tr, (x, y), good_flag in zip(self.tracks, p1.reshape(-1, 2), good):

if not good_flag:

continue

tr.append((x, y))

if len(tr) > self.track_len:

del tr[0]

new_tracks.append(tr)

cv2.circle(vis, (x, y), 2, (0, 255, 0), -1)

self.tracks = new_tracks

cv2.polylines(vis, [np.int32(tr) for tr in self.tracks], False, (0, 255, 0))

draw_str(vis, (20, 20), 'track count: %d' % len(self.tracks))

if self.frame_idx % self.detect_interval == 0:

mask = np.zeros_like(frame_gray)

mask[:] = 255

for x, y in [np.int32(tr[-1]) for tr in self.tracks]:

cv2.circle(mask, (x, y), 5, 0, -1)

p = cv2.goodFeaturesToTrack(frame_gray, mask = mask, **feature_params)

if p is not None:

for x, y in np.float32(p).reshape(-1, 2):

self ...

and

and

Any luck yet? I'm curious how you solve this.

I took your suggestion of using a top hat filter and implemented it before feeding the sequence to the LK tracker. I posted the code I'm now using in the edit above.

If you pick up a lot of noise, maybe you could have a look at blob tracking augmented with kalman filtering.