I'm going to put the code I perform in order to pre-process a face. I do the following:

//Step 1: detect landmarks over the detected face

vector<cv::Point2d> landmarks = landmark_detector->detectLandmarks(img_gray,Rect(r.x,r.y,r.width,r.height));

//Step 2: align face

Mat aligned_image;

vector<cv::Point2d> aligned_landmarks;

aligner->align(img_gray,aligned_image,landmarks,aligned_landmarks);

//Step 3: normalize region

Mat normalized_region = normalizer->process(aligned_image,Rect(r.x,r.y,r.width,r.height),aligned_landmarks);

//Step 4: tan&&triggs

normalized_region = ((FlandMarkFaceAlign *)normalizer)->tan_triggs_preprocessing(normalized_region, gamma_correct,dog,contrast_eq);

Step 3 tries to get only "face region", and step 4 performs tang&&triggs.

I'm going to put the code for step 1 and 2.

STEP 1

Step 1 calls a landmark detector. Flandmark detector is a good one (better than the one based on cascades for example).

Flandmark detector returns the position of the landmarks in the image calling flandmarks, but also does an additional task:

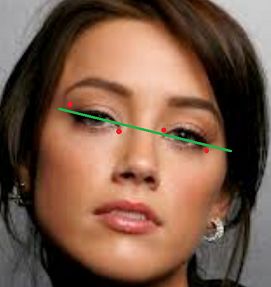

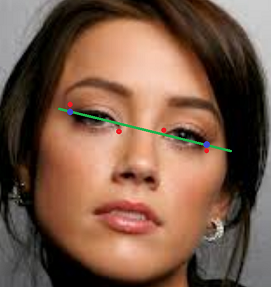

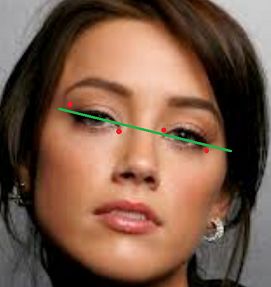

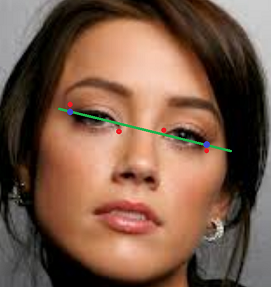

I create a line based on linear regresion using the four points for the eyes (LEFT_EYE_OUTER, LEFT_EYE_INNER, RIGHT_EYE_INNER, RIGHT_EYE_OUTER). Then i calculate two points based on this

linear regrestion (the blue ones). (These points are called pp1 and pp2 in the code).

I used this linear regresion class.

I USED THESE TWO POINTS TO ROTATE THE FACE --> THESE POINTS ARE MORE ROBUST THAN USING FOR EXAMPLE LEFT_EYE_OUTER/RIGHT_EYE_OUTER OR LEFT_EYE_INNER/RIGHT_EYE_INNER

So, with the first step i get the following results:

The code is as follows:

vector<cv::Point2d> FlandmarkLandmarkDetection::detectLandmarks(const Mat & image, const Rect & face){

vector<Point2d> landmarks;

int bbox[4] = { face.x, face.y, face.x + face.width, face.y + face.height };

double *points = new double[2 * this->model->data.options.M];

//http://cmp.felk.cvut.cz/~uricamic/flandmark/

if(flandmark_detect(new IplImage(image), bbox, this->model,points) > 0){

return landmarks;

}

for (int i = 0; i < this->model->data.options.M; i++) {

landmarks.push_back(Point2f(points[2 * i], points[2 * i + 1]));

}

LinearRegression lr;

lr.addPoint(Point2D(landmarks[LEFT_EYE_OUTER].x,landmarks[LEFT_EYE_OUTER].y));

lr.addPoint(Point2D(landmarks[LEFT_EYE_INNER].x,landmarks[LEFT_EYE_INNER].y));

lr.addPoint(Point2D(landmarks[RIGHT_EYE_INNER].x,landmarks[RIGHT_EYE_INNER].y));

lr.addPoint(Point2D(landmarks[RIGHT_EYE_OUTER].x,landmarks[RIGHT_EYE_OUTER].y));

double coef_determination = lr.getCoefDeterm();

double coef_correlation = lr.getCoefCorrel();

double standar_error_estimate = lr.getStdErrorEst();

double a = lr.getA();

double b = lr.getB();

cv::Point pp1(landmarks[LEFT_EYE_OUTER].x, landmarks[LEFT_EYE_OUTER].x*b+a);

cv::Point pp2(landmarks[RIGHT_EYE_OUTER].x, landmarks[RIGHT_EYE_OUTER].x*b+a);

landmarks.push_back(pp1); //landmarks[LEFT_EYE_ALIGN]

landmarks.push_back(pp2); //landmarks[RIGHT_EYE_ALIGN]

delete[] points;

points = 0;

return landmarks;

}

STEP 2

Step 2 aligns the face. It is based also on

https://github.com/MasteringOpenCV/code/blob/master/Chapter8_FaceRecognition/preprocessFace.cpp

The code is as follows:

double FlandMarkFaceAlign::align(const Mat &image, Mat &dst_image, vector<Point2d> &landmarks, vector<Point2d> &dst_landmarks){

const double DESIRED_LEFT_EYE_X = 0.27; // Controls how much of the face is visible after preprocessing.

const double DESIRED_LEFT_EYE_Y = 0.4;

int desiredFaceWidth = 144;

int desiredFaceHeight = desiredFaceWidth;

Point2d leftEye = landmarks[LEFT_EYE_ALIGN];

Point2d rightEye = landmarks[RIGHT_EYE_ALIGN];

// Get the center between the 2 ...

(more)

i'm wondering about this, too. did you get any results, so far ?

applying AAM or flandmark to find eye position might be another idea.

this is very complex/difficult, but gets the job done