How to use contours and Harris corners Functions in solvePnP?

Hi

I have code to extract the 2D coordinates from prior knowledge, using e.g. Harris Corners and contour of the object. I'm using these features because the objects are without textures so ORB or SIFT or SURF not going to work. My goal is to get the 2D correspondences for my 3D cad model points and use them in solvePnPRansac to track the object and get 6D Pose in real-time.

I created code for Harris Corners Detection and Contours Detection as well. They are in two different C++ source codes. Here is the C++ code for Harris Corners Detection

void CornerDetection::imageCB(const sensor_msgs::ImageConstPtr& msg)

{

if(blockSize_harris == 0)

blockSize_harris = 1;

cv::Mat img, img_gray, myHarris_dst;

cv_bridge::CvImagePtr cvPtr;

try

{

cvPtr = cv_bridge::toCvCopy(msg, sensor_msgs::image_encodings::BGR8);

}

catch (cv_bridge::Exception& e)

{

ROS_ERROR("cv_bridge exception: %s", e.what());

return;

}

cvPtr->image.copyTo(img);

cv::cvtColor(img, img_gray, cv::COLOR_BGR2GRAY);

myHarris_dst = cv::Mat::zeros( img_gray.size(), CV_32FC(6) );

Mc = cv::Mat::zeros( img_gray.size(), CV_32FC1 );

cv::cornerEigenValsAndVecs( img_gray, myHarris_dst, blockSize_harris, apertureSize, cv::BORDER_DEFAULT );

for( int j = 0; j < img_gray.rows; j++ )

for( int i = 0; i < img_gray.cols; i++ )

{

float lambda_1 = myHarris_dst.at<cv::Vec6f>(j, i)[0];

float lambda_2 = myHarris_dst.at<cv::Vec6f>(j, i)[1];

Mc.at<float>(j,i) = lambda_1*lambda_2 - 0.04f*pow( ( lambda_1 + lambda_2 ), 2 );

}

cv::minMaxLoc( Mc, &myHarris_minVal, &myHarris_maxVal, 0, 0, cv::Mat() );

this->myHarris_function(img, img_gray);

cv::waitKey(2);

}

void CornerDetection::myHarris_function(cv::Mat img, cv::Mat img_gray)

{

myHarris_copy = img.clone();

if( myHarris_qualityLevel < 1 )

myHarris_qualityLevel = 1;

for( int j = 0; j < img_gray.rows; j++ )

for( int i = 0; i < img_gray.cols; i++ )

if( Mc.at<float>(j,i) > myHarris_minVal + ( myHarris_maxVal - myHarris_minVal )*myHarris_qualityLevel/max_qualityLevel )

cv::circle( myHarris_copy, cv::Point(i,j), 4, cv::Scalar( rng.uniform(0,255), rng.uniform(0,255), rng.uniform(0,255) ), -1, 8, 0 );

cv::imshow( harris_win, myHarris_copy );

}

And here the C++ function for Countrours Detection

void TrackSequential::ContourDetection(cv::Mat thresh_in, cv::Mat &output_)

{

cv::Mat temp;

cv::Rect objectBoundingRectangle = cv::Rect(0,0,0,0);

thresh_in.copyTo(temp);

std::vector<std::vector<cv::Point> > contours;

std::vector<cv::Vec4i> hierarchy;

cv::findContours(temp, contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE);

if(contours.size()>0)

{

std::vector<std::vector<cv::Point> > largest_contour;

largest_contour.push_back(contours.at(contours.size()-1));

objectBoundingRectangle = cv::boundingRect(largest_contour.at(0));

int x = objectBoundingRectangle.x+objectBoundingRectangle.width/2;

int y = objectBoundingRectangle.y+objectBoundingRectangle.height/2;

cv::circle(output_,cv::Point(x,y),10,cv::Scalar(0,255,0),2);

}

}

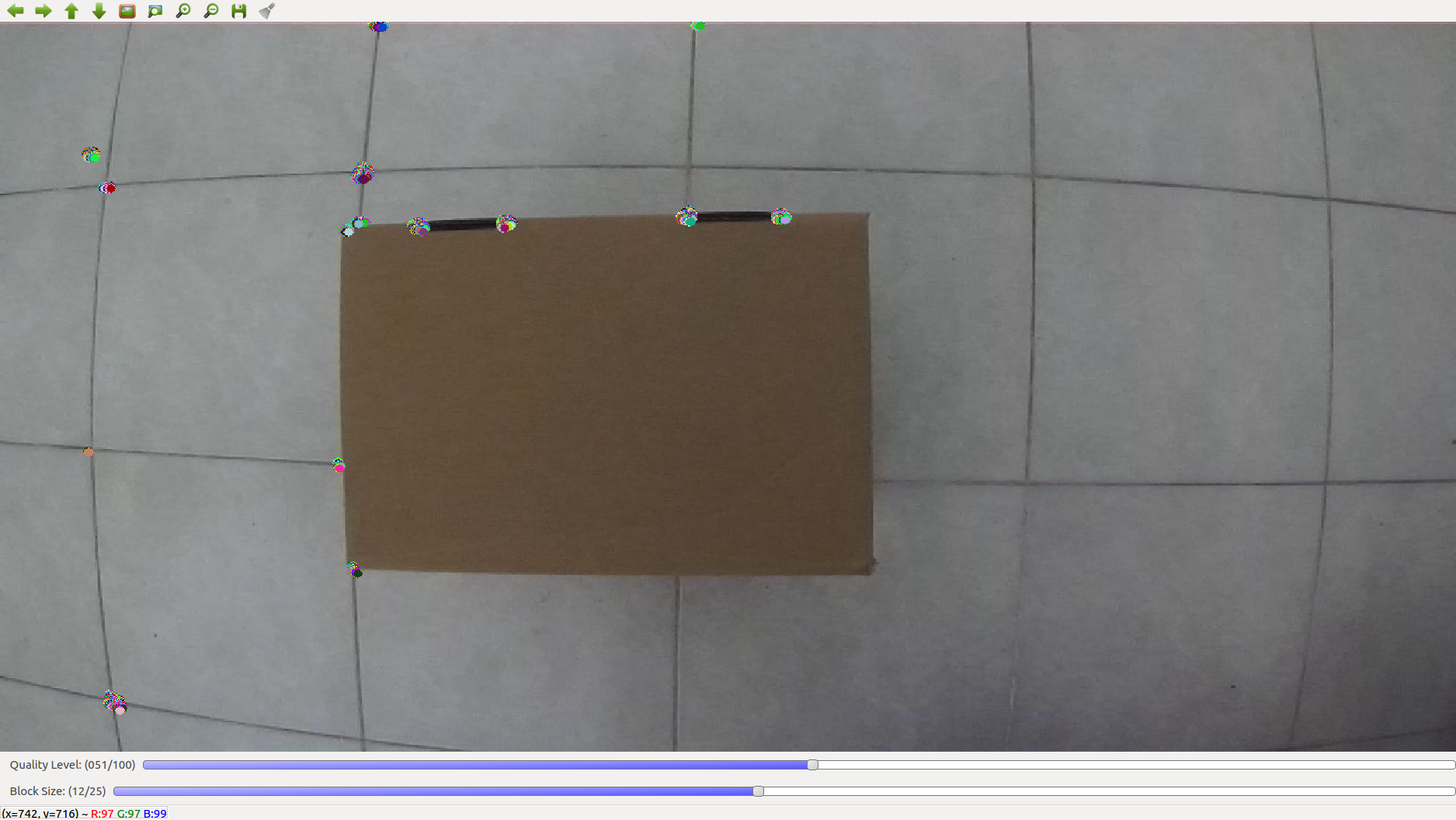

Here the image od detected 2d Points (Harris Corners)

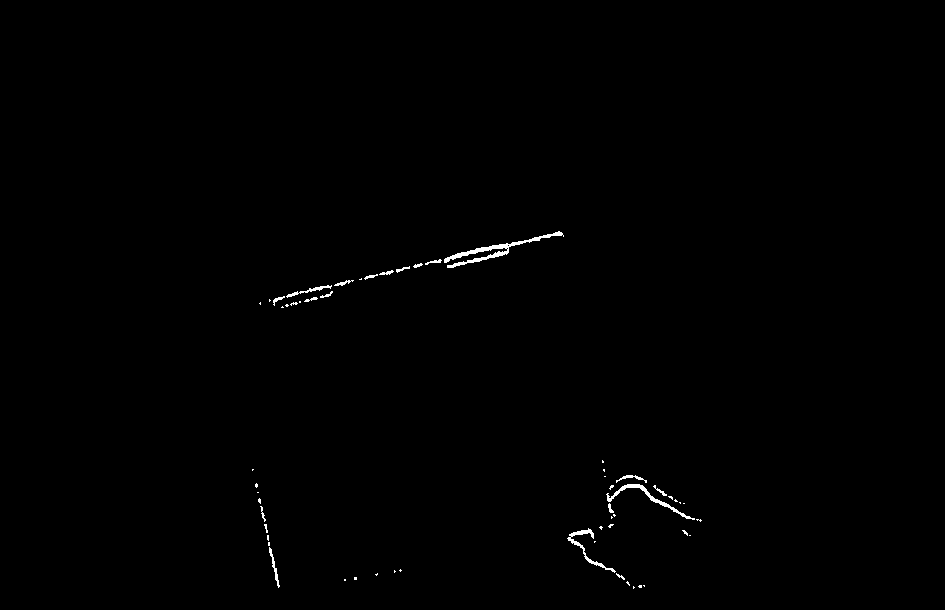

And Countours

Also, I have the 3D CAD model of the object I like to track and estimate the 6D Pose. My question is how to use the detected 2D Points from Harris Corners and Contours Functions in solvePnPRansac or solvePnP to track the object and get the 6D Pose in real-time?

Thanks

whatever you use to find 2d points, the main problem will be assigning them to corresponding 3d points (entirely non-trivial)

see, this works nicely, if you have 2d facial landmarks (which are ordered !), and a (dense) 3d head model, so you could sample 3d points for an initial 2d detection, and later calculate the pose for a landmarks detection "in the wild"

what is your actual 3d object ? (i remember some cardboard box in some prev. post, which also will have ambiguities, since you can only see 5 or so of 8 corners)

please explain "prior knowledge"

if you have an image of your detected 2d points, please show us !

3D object is a box. I have an Image of the detected 2D Points. Ok I show it in my question. Will add the image of the detected Corners and Contour. Ok?

Its a box like in the image. And the Camera is on the top. Can be from different angles but always must be the camera on the top down view

sorry to say so, but your images are all shite. low contrast, bad lighting, terrible lens distortion, improper thresholding, you can't do anything without improving that first

then, as long as your box is lying flat, you only have 1 rotation angle, and 2 translation dims, it's not a 6DoF problem.

also, your contours are not visible, if you want to draw a green circle, you need a 3 channel image

maybe you can try to find better contours again, get the

minAreaRect()from that, then you already have the rotation around z, and the translation against (0,0) in the image (you might not need solvePnP at all)Well, I will get better images, better contrast, rectify them, proper thresholding. Secondly also will get a better contour. What do you mean by draw a green circle, I need a 3 channel image? What green circle? The problem is not 3D as the camera can move and then I have a translation in all 3 axes plus one rotation. Then also box can move. So, if get better Harris Corners and Contours then still how to solve the problem using solvePnP ?

last line in your contour code:

no circles visible (in fact you draw black circles on black bg), bc. only 1st channel of the Scalar is used with 1 channel image there

i have no idea how to, and it might be, you simply can't:

- you can't use all 8 3d points from the box, bc. not all of them are visible

- even if you restrict it to the top plane (4 pts), you have a sorting problem (which 2d point belongs to which 3d one ?). for a rectangle it's also ambiguous for 90° rot

- even if you have better corners, you have no way to tell if they're from top or ground plane ...

(more)So mainly even if I get the other corners points by moving the camera and view with different angles then still remain the sorting problem (which 2d point belongs to which 3d one ?). Correct? But must be some research and some solution for this kind of Problem, no? Well, I can get other points from Houghlines, Then I know the dimension of the box so also I can get at least which point belongs to which side of the box. Would be that helpful? Because I will have the 3D CAD model before doing 6D Pose Estimation

what about the Surface Matching Algorithm. Using Point Clouds I got some good results. So means, point clouds would be a better option?

maybe ! but how do you get a point cloud from your current setup (single camera) ?` and how would you "reduce" it, so it mainly contains the box points ?