Disparity Map to Point Cloud gone wrong

Hello everyone! I'm trying to do a face reconstruction using 3 images in Python using OpenCV, but I've run into some issues, namely generating the point cloud from the disparity map. The steps I followed so far:

Intrinsic calibration, using checkerboard images

cv2.calibrateCamera, with the flagcv2.CALIB_FIX_PRINCIPAL_POINT(although removing the flag didn't seem to change anything)Extrinsic calibration, using

cv2.stereoCalibrate, with the flagcv2.CALIB_FIX_INTRINSIC, andcv2.stereoRectify, with the final image size the same as the initial one (for some reason the flagcv2.CALIB_ZERO_DISPARITYdidn't seem to change anything as well)Normalization of the new images, and then rectification using

cv2.initUndistortRectifyMap, and afterwards cropping the two images using some combination of the ROI parameters (maybe it's worth noting that the sizes of the 2 ROIs are different)cv2.StereoSGBMalgorithm to compute the stereo map. I changed the parameters until I got the image shown belowcv2.reprojectImageTo3Don the resulting disparity map using the Q matrix fromcv2.stereoRectify. I then used the Pythonopen3dlibrary to plot the resulting point cloud.

The results are presented here:

the rectified and cropped images

the obtained disparity map (variant)

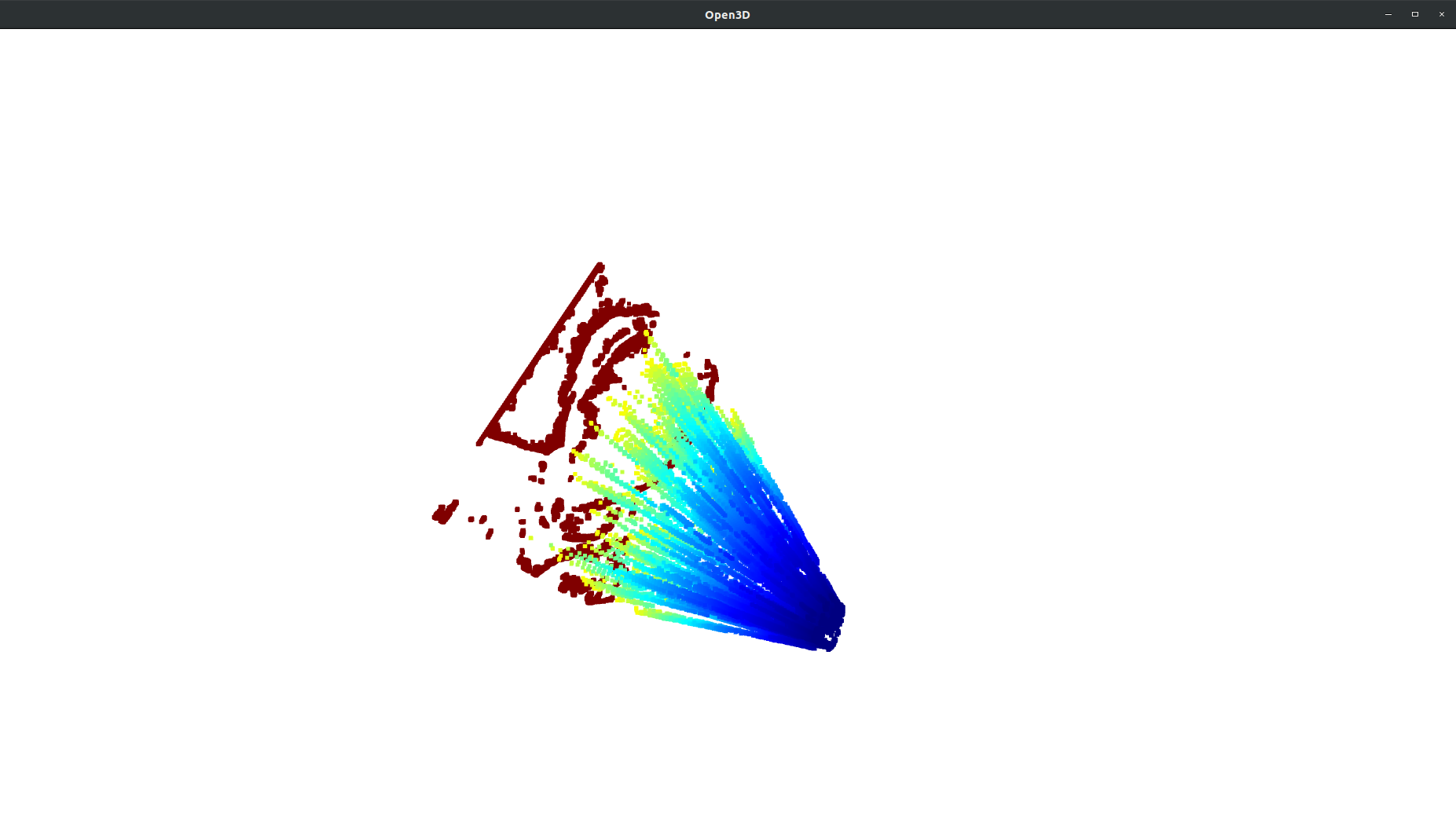

the point cloud visualized in

open3d(it looks like the disparity from a very specific angle)

I also tried reimplementing the cv2.reprojectImageTo3D without success. Following the advice from here I also converted the disparity map into a float and divided by 16 (which resulted in some better results for the disparity map, however the point cloud still looks the same). Maybe I should mention that for a minDisparity of 0 I get a -16 as the minimum value in the disparity (which I found very weird).

I also tried using cv2.CALIB_USE_INTRINSIC_GUESS in the cv2.stereoCalibrate, but that resulted in worse rectified images (and automatically worse disparity). I also tried following the link above to not crop the images, and use cv2.getValidDisparityROI to crop the disparity map afterwards, but then the resulting images don't really look epipolar, and the resulting disparity map is way worse.

What am I doing wrong? Can you please help me with this?

The output of the stereo disparity computation is a 2D Mat of 16-bit signed values corresponding to the first input array's (typ. left camera) rectified image. The least significant 4 bits are a fraction, so you are correct to convert the array float and divide by 16.0. The -16 value (-1.0 after division) is the 'not a number' value - it means 'disparity could not be determined at this cell'. The only valid disparities then range from min disparity to min disparity + num disparities.

You might try googling for 'opencv stereo depth to point cloud example' and reading thru example code to look for inconsistencies with your implementation.