Fewer, larger features with ORB?

I am using ORB in Python to detect features in images, in order to match a new image against a set of reference images. The new images have sub-images of interest against unknown backgrounds.

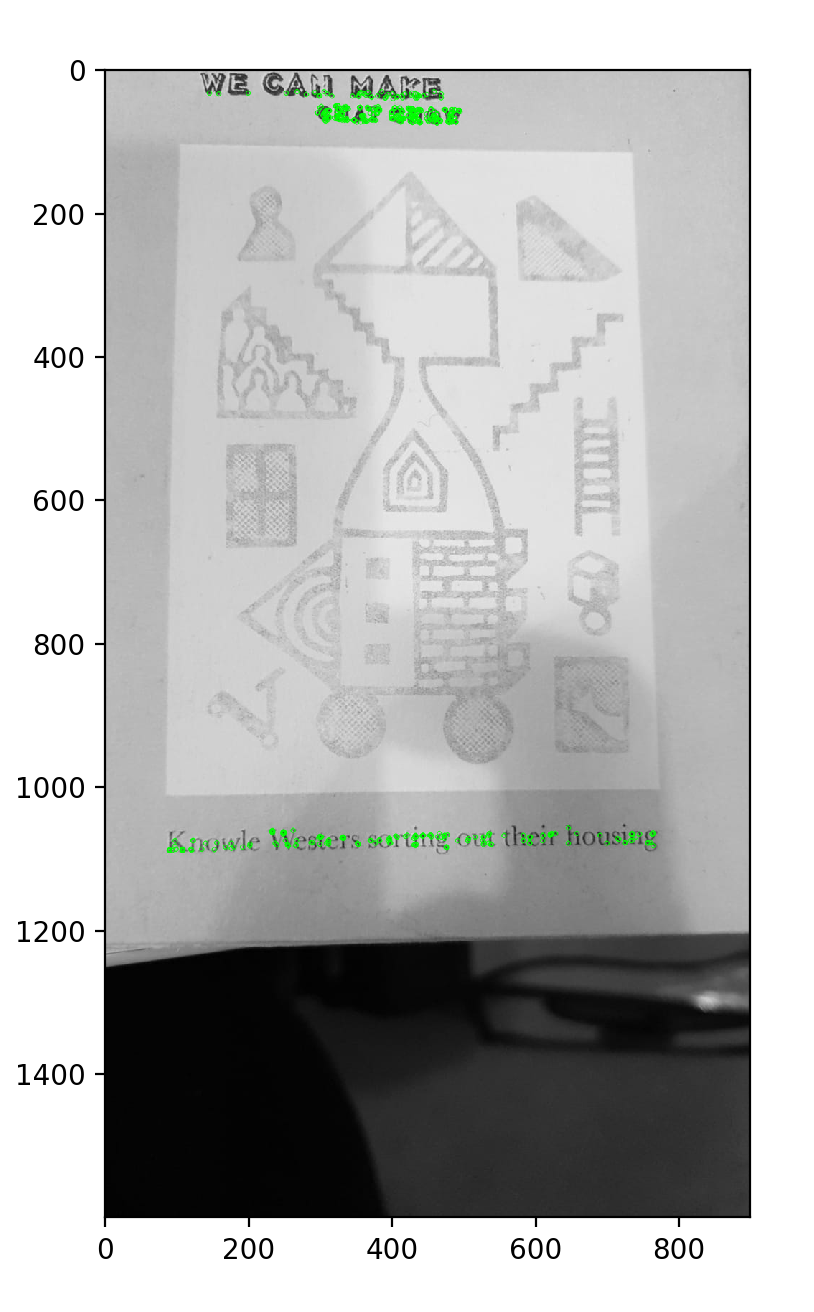

I am finding that, in some cases, many keypoints are being detected to the exclusion of larger keypoints of interest. Take the following example:

If I request the (default) 500 keypoints, none of them is in the region of interest. The writing in the image is actually 'background' to what I am trying to match, which is the large sub-image against a paler background. If I request (a lot) more keypoints, I start to get some in the region of interest, but now everything runs correspondingly more slowly: the detection and the matching.

In this case, looking for larger features would help. What parameter(s) can I provide to cv2.ORB_create() to increase the size of the features I am interested in as a proportion of the overall image size? (The images vary in size.) Or is this not possible?

And a bigger question (I am pretty new to computer vision): are there any heuristics people have tried to help match objects somewhere inside user-taken snapshots (on mobiles) with unknown objects in the background?

Thanks for your help.

i'm afraid, feature detection does not work like you intended it.

it's NOT to detect or match objects (it does not even know about those), it's to find a homography between 2 rotated / translated / scaled views of the same scene.

Thanks, I'm aware of all of that and I am using homography. But those processes are a function of the detected keypoints/features in the first place, and my question is relevant to that aspect alone.

Did you find a solution for your problem? If not, can you add pls the original image to do some tests and see if I can find a solution for your problem.

Thanks for your offer. I haven't got it working yet but one approach I thought of was to (a) request far more keypoints so that there is a reasonable number in all areas of the image, (2) use an approach like non-maximal suppression algorithms to filter them down into a subset with a more-or-less uniform distribution across the image.

The original image is here

Hi, sorry if I am late, I have tried your image with different feature detectors (ORB, SIFT,...) and I am getting the same result as you. the key points are detected only in the top and below the image. I think it's because these descriptors are using some kind of filter to blur the image (for example Sift use a Gaussian filter that blurred the image). And the center of your image is already blurred, that make the contours of this part of your image very blurred and the feature detector can't find any key points in this part of your image.