Delay-induced performance hit - context switching costs?

Hi folks, I'm experiencing an odd behaviour in calls to cv_stereoBM where the time taken to do the work is dependent on the time between calls. I don't think that this is necessarily directly related to cv_stereoBM, merely that it is an example of where it is exhibited. (I'm using OpenCV 3.4, though I do not believe the issue is version specific.)

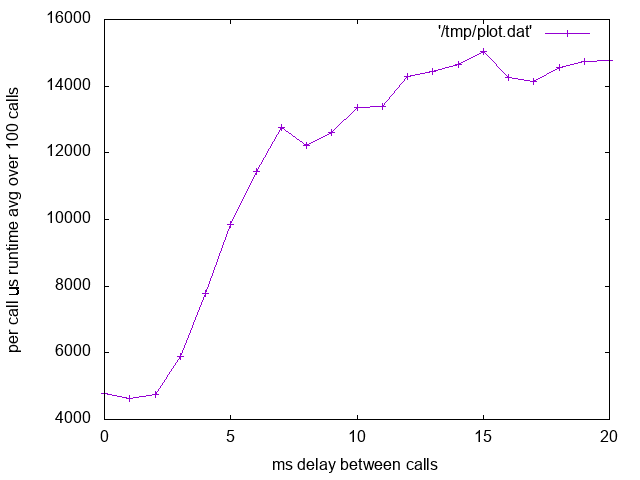

First, here is what I see. If I call out to cv_stereoBM as a sequence of 100 calls, all passing the same images and parameters, then the average time per call is 4772 microseconds. However, if I systematically increase the delay between calls, one millisecond at a time, and compute the average runtime of the call (taking into account the delay), I observe an increasing time-per-call that eventually flat-lines at approximately 14600 microseconds. Here is a plot of the observed behaviour:

I began investigating this because of an observed slow-down when using OpenCV on more recent hardware (i7-7820x) than what I was observing on older hardware (an old i5).

I thought it might be related to AVX/AVX2 context switching overhead, but the cv_stereoBM algorithm only uses SSE, which I believe does not suffer the same hit. I've also recompiled (gcc 5.5) OpenCV with -mno-avx and -mno-avx2 just to be sure, with no change.

Has anyone else experienced this kind of behaviour or have any idea as to what its root cause might be?

Thank you so much for any suggestions you might have.

Sam

You should add the code and the data to reproduce the issue.

Maybe a profiler (Gprof, Intel VTune, ...) could give more insight on the problem.