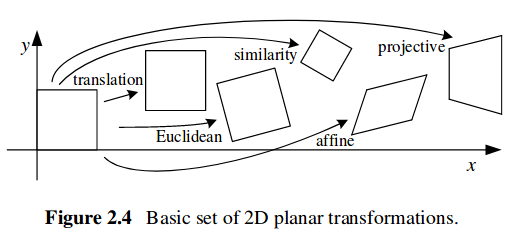

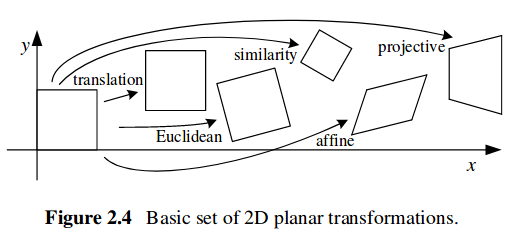

See the Computer Vision: Algorithms and Applications book by Richard Szeliski. There is a chapter about 2D transformations:

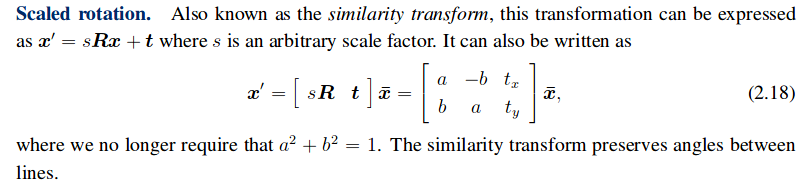

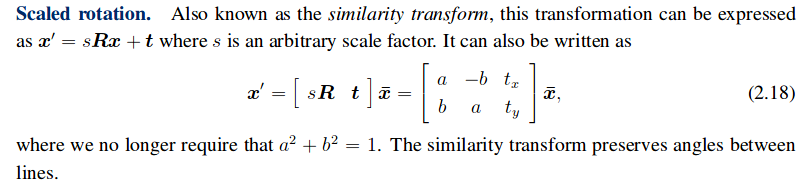

If you can assume that the two objects follow a similarity transformation, you have:

And you can use findHomography() in a robust scheme to discard the outliers. You should get H that corresponds to this transformation with the last row being [0,0,1].

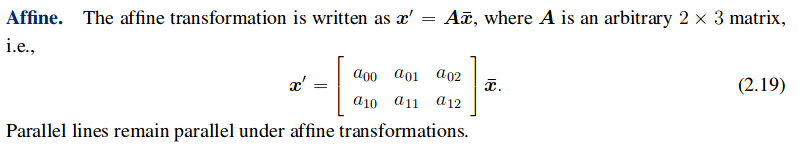

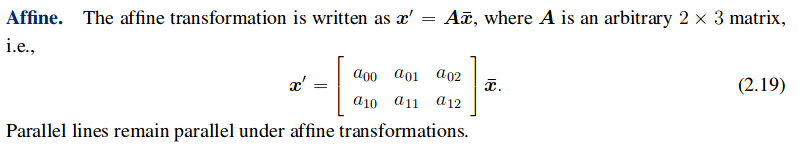

Or more generaly, the affine transformation with some specific operations:

In this case, you should be able to decompose the affine transformation, e.g. here or here.

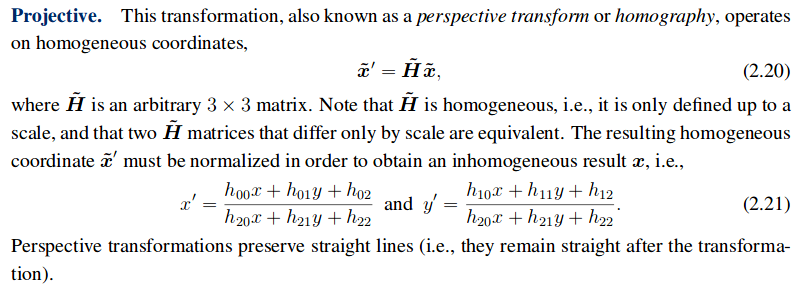

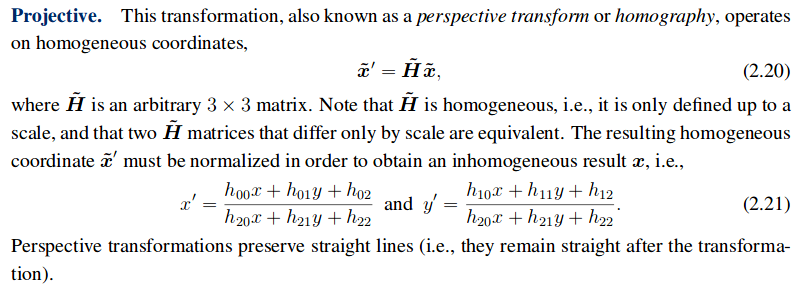

Most likely you are dealing with pictures of object taken by different viewpoints. In this case, the induced transformation in the image plane is a perspective transform or homography:

For some specific camera motions, the homography will encode a simplified transformation. But generally this should not be true. Also, the homography holds if:

- the considered object is planar

- or the camera motion is purely a rotation

See this tutorial: Basic concepts of the homography explained with code for additional information.