Is there any way to thin out the characters after Otsu thresholding?

Hi

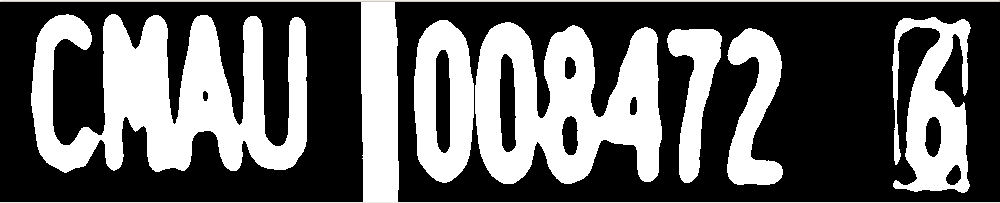

I am trying to segment all the characters (see the image below) and there is one issue I still can't solve. THRESH_OTSU works the best with the only exception that it merges some characters.

When I tried to use adaptive thresholding, there was no problem with character merging but I was unable to control the parameters so that it could work for most images.

I know that some combinations of erosion and dilation might work in such cases. Trouble is, even though the characters get thinner as a result of erosion, the areas between the characters only increase. Probably, I am misusing/misunderstanding erosion.

Thanks in advanced for any suggestions.

Here's the source image.

Here's the source image.

Unlike the more classical case of car number plates, the font is often black on a light background. This is why I have to count the number of contours or rects returned by minAreaRect to invert the image in such cases.

Unlike the more classical case of car number plates, the font is often black on a light background. This is why I have to count the number of contours or rects returned by minAreaRect to invert the image in such cases.

could you add the original images

@sturkmen, sure. Added two sources images

have you tried segmenting with kmeans clustering? adjusting the number of clusters might give you the segmentation you want

You can skeletonize the image, to thin it out: http://felix.abecassis.me/2011/09/ope...