net.forward() crash in Faster-RCNN Object Detection Sample

Hello,

I'm trying to run the Objection Detection Sample (object_detection.cpp) from the opencv repo. I'm using "faster_rcnn_inception_v2_coco_2018_01_28" model from open model zoo. But I'm experiencing error when I my code reaches net.forward()->...->initInfEngine().

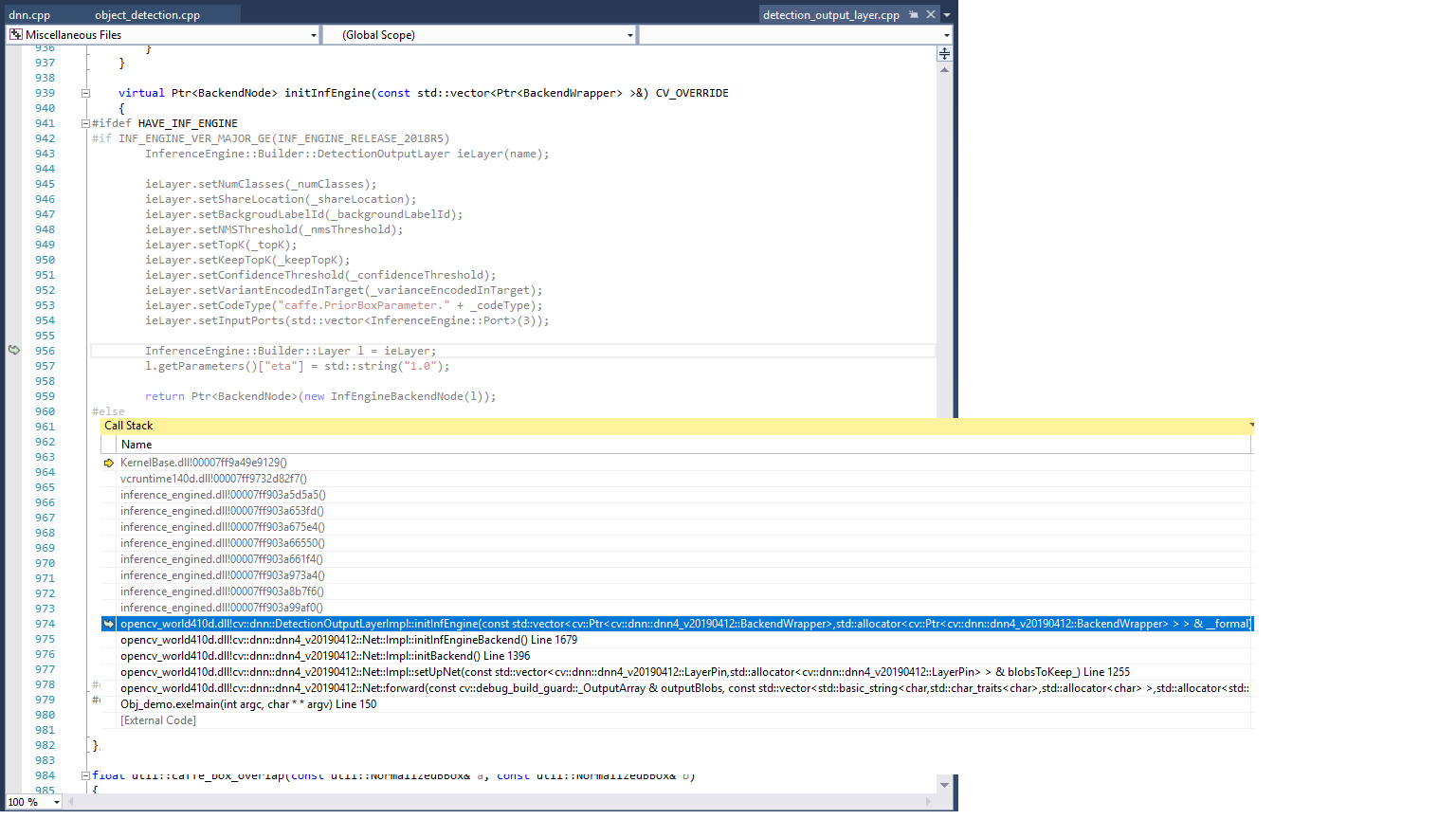

Stack Trace:

opencv_world410d.dll!cv::dnn::DetectionOutputLayerImpl::initInfEngine(const std::vector<cv::ptr<cv::dnn::dnn4_v20190412::backendwrapper>,std::allocator<cv::ptr<cv::dnn::dnn4_v20190412::backendwrapper> > > & __formal) Line 956 C++

opencv_world410d.dll!cv::dnn::dnn4_v20190412::Net::Impl::initInfEngineBackend() Line 1679 C++ opencv_world410d.dll!cv::dnn::dnn4_v20190412::Net::Impl::initBackend() Line 1396 C++ opencv_world410d.dll!cv::dnn::dnn4_v20190412::Net::Impl::setUpNet(const std::vector<cv::dnn::dnn4_v20190412::layerpin,std::allocator<cv::dnn::dnn4_v20190412::layerpin> > & blobsToKeep_) Line 1255 C++

opencv_world410d.dll!cv::dnn::dnn4_v20190412::Net::forward(const cv::debug_build_guard::_OutputArray & outputBlobs, const std::vector<std::basic_string<char,std::char_traits<char>,std::allocator<char> >,std::allocator<std::basic_string<char,std::char_traits<char>,std::allocator<char> > > > & outBlobNames) Line 2844 C++

Obj_demo.exe!main(int argc, char * * argv) Line 150 C++

OS: Windows 10

IDE: Visual Studio 2015

Opencv 4.1.0 (opencv-master)

Openvino 2019 R1

Test 1: crash

modelPath = frozen_inference_graph.pb from faster_rcnn_inception_v2_coco_2018_01_28.tar.gz

configPath = faster_rcnn_inception_v2_coco_2018_01_28.pbtxt

Test 2: Same as test1, except I put the model through Openvino's model optimizer. Still crashes.

python "C:\Program Files (x86)\IntelSWTools\openvino_2019.1.087\deployment_tools\model_optimizer/mo_tf.py" --input_model=C:\models\faster_rcnn_inception_v2_coco_2018_01_28\frozen_inference_graph.pb --tensorflow_use_custom_operations_config "C:\Program Files (x86)\IntelSWTools\openvino_2019.1.087\deployment_tools\model_optimizer\extensions\front\tf\faster_rcnn_support.json" --tensorflow_object_detection_api_pipeline_config C:\models\faster_rcnn_inception_v2_coco_2018_01_28\pipeline.config

And then I feed the xml and bin to dnn:readnet()

Test3: Use DNN_BACKEND_OPENCV, works. (just for information)

net.setPreferableBackend(DNN_BACKEND_OPENCV);

Appreciate any help!

Hmm, it's a bit strange because OpenVINO 2019R1 has a bug which don't let me run Faster-RCNN network with the following error:

ConfidenceThreshold parameter is wrong in layer detection_out. It should be > 0..Good to know someone's also having problems too, lol. I've also tried "ssd_mobilenet_v2_coco" model with both the (pb/pbtxt) and (xml/bin) version and it works. So it could be just an isolated case. Hmm.

I have the same problem. SSD works, but FasterRCNN and MaskRCNN don't work.

Hi.

I'm having the same issue:

tested with xml/bin, converted using Openvino 2019.R2 tools.

-- Faster-RCNN and R-FCN crash, with this msg: Traceback (most recent call last): File "detector_v0.1/ocv-fotodetector_r-fcn.py", line 61, in <module> cvOut = cvNet.forward() cv2.error: OpenCV(4.1.1-openvino) /home/jenkins/workspace/OpenCV/OpenVINO/build/opencv/modules/dnn/src/dnn.cpp:2182: error: (-215:Assertion failed) inp.total() in function 'allocateLayers'

-- model made from SSD, via TFOD-API (tf 1.12.3 and tfod_1.12), or a pre-trained with caffe work OK, in native tf (pb and pbtxt), caffe, or converted to intel's xml/bin

Seems like it's an open bug: https://github.com/opencv/opencv/issu...