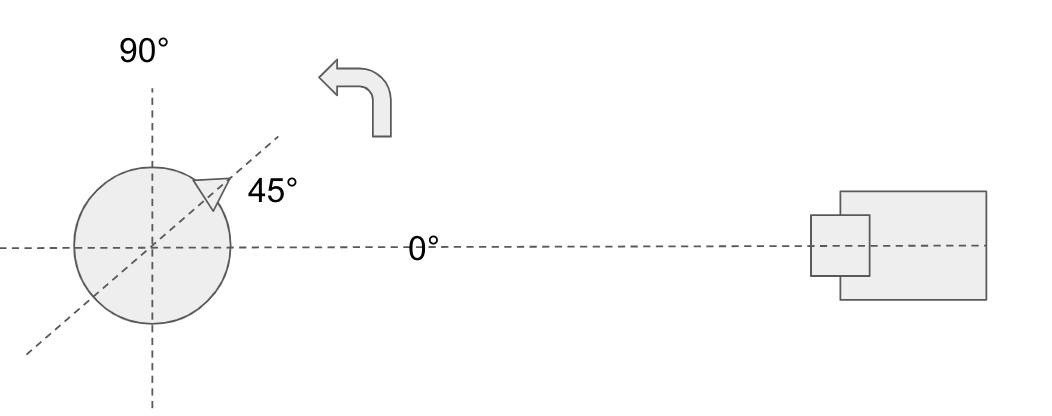

i still think, you want a head pose estimation here. the gist is: you can use solvePnP to get an rvec and a tvec, containing rotation / translation wrt. the camera.

for this, you will need 2d landmarks (e.g from dlib, openpose, or from opencv's landmark models) and corresponding 3d points. i'll try with FacemarkLBF here:

// create and load the facemarks model

cv::Ptr<cv::face::Facemark> facemark;

facemark = cv::face::createFacemarkLBF();

facemark->loadModel(landmarksModel);

// load the 68 3d points from file (see below !)

std::vector<cv::Point3d> pts3d;

cv::FileStorage fs2("points3d.yml",0);

fs2["points"] >> pts3d;

fs2.release();

then for each image, detect a face, then get the current landmarks:

std::vector<cv::Rect> rects;

face_cascade.detectMultiScale(gray_img, faces, 1.4, 2, cv::CASCADE_SCALE_IMAGE, cv::Size(30, 30));

std::vector<cv::Rect> faces(1,rects[0]);

std::vector< std::vector<cv::Point2f> > shapes;

facemark->fit(gray_img,faces,shapes)

std::vector<cv::Point2d> &pts2d;

for(size_t k=0; k<shapes[0].size(); k++)

pts2d.push_back(shapes[0][k]);

now we can apply solvePnP:

// if you did not calibrate it, use a camMatrix based on img size:

cv::Mat rvec,tvec;

cv::Mat camMatrix;

int max_d = std::max(s.width,s.height);

camMatrix = (cv::Mat_<double>(3,3) <<

max_d, 0, s.width/2.0,

0, max_d, s.height/2.0,

0, 0, 1.0);

// 2d -> 3d correspondence

cv::solvePnP(pts3d, pts2d, camMatrix, cv::Mat(1,4,CV_64F,0.0), rvec, tvec, false, cv::SOLVEPNP_EPNP);

the whole code is here

and, for your convenience, here's points3d.yml:

%YAML:1.0

---

points: [ -7.4077796936035156e+001, 4.5610500335693359e+001,

1.7611330032348633e+001, -7.6078399658203125e+001,

2.4455335617065430e+001, 1.4652364253997803e+000,

-7.0680282592773438e+001, 3.8770267963409424e+000,

1.6104341506958008e+001, -6.9542381286621094e+001,

-1.8663349151611328e+001, -5.0522098541259766e+000,

-6.0891132354736328e+001, -3.7201663970947266e+001,

-4.9577393531799316e+000, -4.7551403045654297e+001,

-5.1671474456787109e+001, 2.1515935897827148e+001,

-3.3833507537841797e+001, -6.4209655761718750e+001,

3.3763854980468750e+001, -1.8493196487426758e+001,

-7.5527656555175781e+001, 4.2787197113037109e+001,

-2.5200850963592529e+000, -7.7372253417968750e+001,

4.5473331451416016e+001, 1.3970505714416504e+001,

-7.3213897705078125e+001, 6.4313529968261719e+001,

3.0962856292724609e+001, -6.6279350280761719e+001,

3.5533737182617188e+001, 4.6108547210693359e+001,

-5.3055961608886719e+001, 1.9751256942749023e+001,

5.1060363769531250e+001, -3.2454692840576172e+001,

6.6386039733886719e+001, 5.8377300262451172e+001,

-1.4232730865478516e+001, 5.9445739746093750e+001,

6.3227752685546875e+001, 4.3665418624877930e+000,

4.6228359222412109e+001, 7.0979812622070312e+001,

2.6926740646362305e+001, 4.6090355515480042e-001,

6.5315643310546875e+001, 4.6220058441162109e+001,

4.7723823547363281e+001, -5.8375602722167969e+001,

5.7522071838378906e+001, 7.4911415100097656e+001,

-4.7820030212402344e+001, 6.2965705871582031e+001,

9.6279922485351562e+001, -3.4796894073486328e+001,

6.4059089660644531e+001, 1.0583456420898438e+002,

-2.3467020034790039e+001, 6.1613960266113281e+001,

1.1014395904541016e+002, -1.2404515266418457e+001,

5.8514854431152344e+001, 1.1110581970214844e+002,

9.6587600708007812e+000, 5.9617969512939453e+001,

1.1002123260498047e+002, 2.0711694717407227e+001,

6.3654747009277344e+001, 1.0981579589843750e+002,

3.2074752807617188e+001, 6.5145515441894531e+001,

1.0512077331542969e+002, 4.5245258331298828e+001,

6.3173934936523438e+001, 9.4144226074218750e+001,

5.4559543609619141e+001, 5.6469257354736328e+001,

7.4634750366210938e+001, -1 ...

(more)

maybe use dlib to get face landmark then caculate the angle like: https://youtu.be/j49RbZf_SgY

i think, you need a "head pose estimation", maybe something like this

I used this tensorflow code for head pose estimation a while back and it worked well for me