Optimization for Singleboard PC(like orangePi, RasberryPi...) on linux

Hello.

My problem in short: on desktop my application works good, on singleboard PC it freezes. Even with simple video output from webcamera (imshow() ) it glitches.

I have created application on desktop, it uses USB webcamera and make processing video stream. Parameters of desktop: Win7 64-bit

AMD A4-4000(2 cores @3.00GHz)

integrated Graphics Radeon HD7480D

8 GB RAM

On this PC my application runs without issues (I didnt measure actual FPS value, but video looks smooth enough).

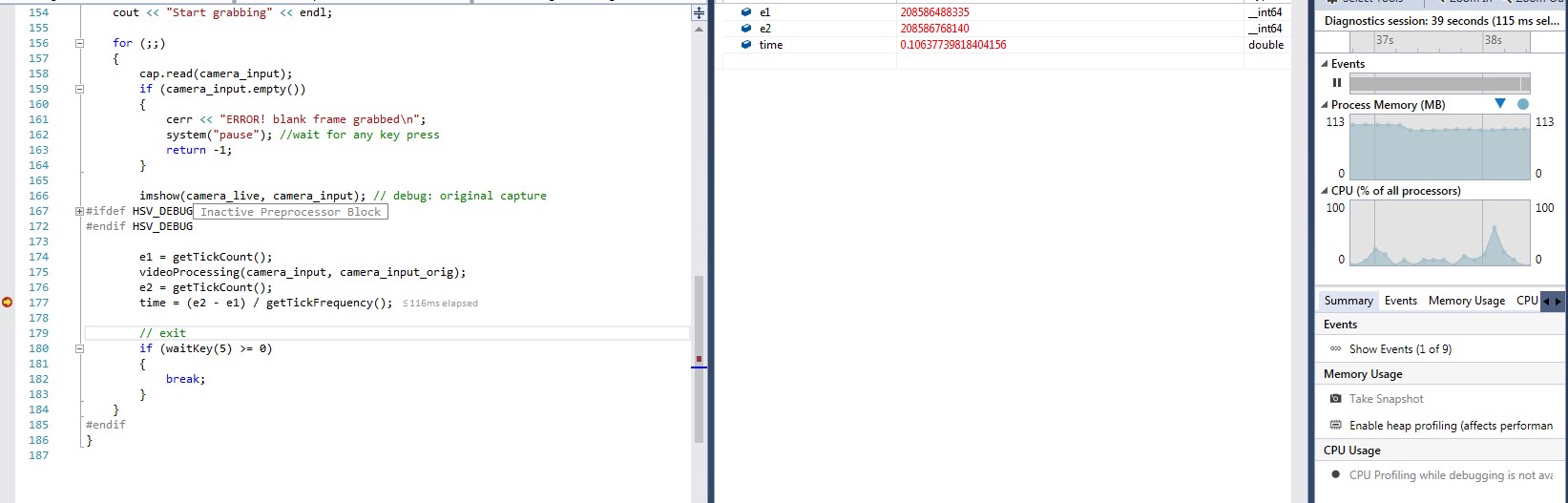

My video processing algoritm consumes ~120MB of RAM, CPU loaded at 50%;

Also it takes about 70~120 ms per frame for processing.

Goal is to port my algoritm on single board PC. My SBPC: Sopine Parameters:

CPU: ARM A64 (4 cores @1.1GHz , 64 bit)

GPU: ARM Mali400MP2 Dual-core

RAM: 2 GB

I have tested it on next operation systems: Armbian, Xenial Mate. Even when I tried to show in opencv video output from webcamera, application freezes and CPU load becomes up to 100%.

Also, I'm testing web camera output with simple linux application - cheese. On Xenial Mate distributive it shows stream smoothly. My application in "imshow only" mode still freezes. On Armbian both (cheese and my application ) are freezing.

I'm not advanced user on Linux, but from my experience with Windows it looks like some drivers are missing.

Code is below. Maybe there are some issues with it? In general, it makes HSV selection for specific color and finds coutours with large areas, then prints does object with this color finded or no.

CODE:

void videoProcessing(Mat& camera_input, Mat& original_img){

camera_input.copyTo(original_img);

// object color

// H: 25 - 90

// S: 0 - 255

// V: 50 - 255

cvtColor(camera_input, camera_input, COLOR_BGR2HSV); //Convert the captured frame

inRange(camera_input, Scalar(25, 0, 50), Scalar(90, 255, 255), camera_input);

//imshow("camera_ranged", camera_input); // debug: HSV colorspace image

//morphological operations

erode(camera_input, camera_input, getStructuringElement(MORPH_ELLIPSE, Size(5, 5)));

dilate(camera_input, camera_input, getStructuringElement(MORPH_RECT, Size(10, 10)));

dilate(camera_input, camera_input, getStructuringElement(MORPH_ELLIPSE, Size(10, 10)));

//imshow("camera_morph", camera_input); // debug: morph image

vector < vector <Point>> contours;

findContours(camera_input, contours, RETR_LIST, CHAIN_APPROX_NONE, Point(0, 0));

Mat drawing = Mat::zeros(camera_input.size(), CV_8UC3);

for (size_t i = 0; i < contours.size(); i++)

{

Scalar color = Scalar(121, 113, 96);

drawContours(drawing, contours, i, color, -1);

//imshow("contour", drawing); // debug: show contours

}

gl_obj_decected = false;

// Find areas

vector <double> areas;

for (size_t i = 0; i < contours.size(); i++)

{

areas.push_back(contourArea(contours[i], false));

//printf("--------------contour #%d area:%f\n", i, areas[i]); // debug

if (areas[i] >= 40000)

{

if (counterFound > 3)

{

gl_obj_decected = true;

}

}

}

// prevent bouncing

if (counterFound > 3)

{

if ((gl_obj_decected == true) && (gl_obj_last_state == false))

{

printf("[FOUND] Open solenoid...\n");

gl_obj_last_state = true;

}

else if ((gl_obj_decected == false) && (gl_obj_last_state == true))

{

printf("[NOT FOUND] Close solenoid\n");

gl_obj_last_state = false;

}

counterFound = 0;

}

counterFound++;

if (gl_obj_decected)

{

// show some text on initial image

printVideoOverlay(original_img);

}

}

int main(int argc, char* argv[]){

Mat camera_input, camera_input_orig;

VideoCapture cap;

//----------------------

int64 e1, e2; // testing clock counts for operations

double time;

//----------------------

cap.open(0);

if (!cap.isOpened())

{

cerr << ...

I think the performance of pin64 is much bad than your pc,so you should reduce the resolution ratio of your image or do something other to speed up

But when I'm testing camera stream with some default linux program (well, cheese is most lightweight and simple) video looks smooth on certain distributive. So I assume problem is not about bad hardware.

Any info about how to work with to enable Mali GPU support in openCV?