Camera position in world coordinate is not working but object pose in camera co ordinate system is working properly

I am working on the camera (iphone camera) pose estimation for head mount device (Hololens) using LEDs as a marker, using the solvepnp. I have calibrated the camera below is the camera intrinsic parameters

/* approx model*/

double focal_length = image.cols;

Point2d center = cv::Point2d(image.cols/2,image.rows/2);

iphone_camera_matrix = (cv::Mat_<double>(3,3) << focal_length, 0, center.x, 0 , focal_length, center.y, 0, 0, 1); iphone_dist_coeffs = cv::Mat::zeros(4,1,cv::DataType<double>::type);

/* caliberated(usng opencv) model */

iphone_camera_matrix = (cv::Mat_<double>(3,3) <<839.43920487140315, 0, 240, 0, 839.43920487140315, 424, 0, 0, 1);

iphone_dist_coeffs = (cv::Mat_<double>(5,1) <<4.6476561543838640e-02, -2.0580084834071521, 0, 0 ,2.0182662261396342e+01);

usng solvpnp am able to get the proper object pose in camera co-ordinate system below is the code

cv::solvePnP(world_points, image_points, iphone_camera_matrix, iphone_dist_coeffs, rotation_vector, translation_vector, true, SOLVEPNP_ITERATIVE);

the ouput is

rotation_vector :

[-65.41956646885059;

-52.49185328449133;

36.82917796058498]

translation_vector :

[94.1158604375937;

-164.2178023980637;

580.5666657301058]

using this rotation_vector and translation_vector am visualizing pose by projecting the trivector whose points are

points_to_project :

[0, 0, 0;

20, 0, 0;

0, 20, 0;

0, 0, 20]

projectPoints(points_to_project, rotation_vector, translation_vector, iphone_camera_matrix, iphone_dist_coeffs, projected_points);

the output of projectedPoints given as

projected_points :

[376.88803, 185.15131;

383.05768, 195.77643;

406.46454, 175.12997;

372.67371, 155.56181]

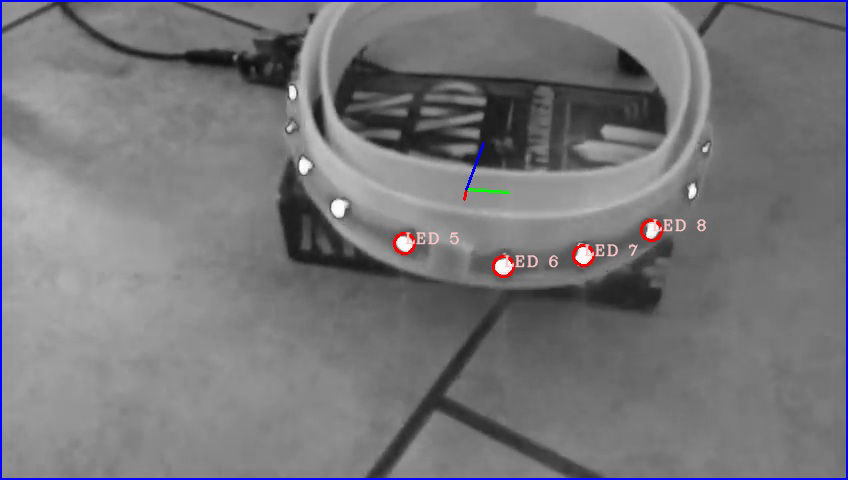

which seems correct as shown below

I try to find the camera pose in world/object coordinate system by using the transformation of rotation_vector and translation_vector given by solvepnp as

cv::Rodrigues(rotation_vector, rotation_matrix);

rot_matrix_wld = rotation_matrix.t();

translation_vec_wld = -rot_matrix_wld * translation_vector;

I used the rot_matrix_wld, translation_vec_wld to visualize the pose (same way as how I visualized the pose of the object in the camera coordinate system as said in the above)

projectPoints(points_to_project, rot_matrix_wld, translation_vec_wld, iphone_camera_matrix, iphone_dist_coeffs, projected_points);

with

points_to_project :

[0, 0, 0;

20, 0, 0;

0, 20, 0;

0, 0, 20]

am getting wrong translation vector (below 2 projected_points are for 2 different image frames of a video)

projected_points :

[-795.11768, -975.85846;

-877.84937, -932.39697;

-868.5517, -1197.4443;

projected_points :

[589.42999, 3019.0732;

590.64789, 2665.5835;

479.49728, 2154.8057;

187.78407, 3333.3054]

-593.41058, -851.74432]

I have used the approx camera model and calibrated camera model both are giving the wrong translation vector.

I have gone through the link here and verified my calibration procedure, I did it correctly.

I am not sure where am doing wrong can anyone please help me with this.

thanks in advance.

If I understand what you're doing correctly, you must also transform points_to_project the same way you did the translation_vector.

As it is now, it seems like you are trying to display the points that are on, to the right, below, and behind the camera. Of course, those can't be projected onto the focal plane, because three of them are in line with it, and the other is behind.

@Tetragramm, Thankyou for the response. You understood my problem correctly. do you mean I need to transform the each of points_to_project by multiplying with rotataton_matrix_wld as below points_to_project_wld[0] = -rot_matrix_wld * points_to_project[0] can you please explain (or suggest any reference) me why to do this?

I know that the points are outside of the image, but how you are telling some of the poins behind the camera?

The camera coordinate system is defined as being centered at (0,0,0) with +X to the right, +Y is down, and +Z is "out" of the image, towards the viewer. So the point (0,0,20) is "behind" the camera.

When you change the coordinate system to be the camera coordinate system, the 3d points you are projecting are no longer in the same locations. By transforming them too, you put them into the coordinate system you are now using.

OK got it, will try transforming the poins (points_to_project_wld[0] = -rot_matrix_wld * points_to_project[0])as you suggested and let you know. Thanks

I have tried as you suggested but still am getting the wrong projected points

each coloumn of orientation_vector_points is a 3D point

orientation_vector_points : 0 20 0 0 0 0 20 0 0 0 0 20 rotation_matrix : [0.1323273224250383, -0.7805876706490585, -0.6108783579162851; 0.8601572321893868, 0.3966910684964114, -0.3205709470421; 0.4925637173524999, -0.4830311424803294, 0.7239211971907122]

have I understood something wrong?

@Tetragramm, you write: "+Z is 'out' of the image, towards the viewer. So the point (0,0,20) is "behind" the camera." Why then do all the standard diagrams of the pinhole camera model show the cameraspace z axis pointing TOWARDS the image plane, i.e. into the scene?

This is exactly what I am asking in my Q1 here: https://answers.opencv.org/question/2...

Many thanks.

You are correct. +Z should be in front of the camera. Not sure what I was thinking.