Getting error using SVM with SURF

Below is some part of my code , which is running fine but after a long processing it show me the run time error

Initialization part

std::vector< DMatch > matches;

Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create("FlannBased");

Ptr<DescriptorExtractor> extractor = new SurfDescriptorExtractor();

SurfFeatureDetector detector(500);

std::vector<KeyPoint> keypoints;

int dictionarySize = 1500;

TermCriteria tc(CV_TERMCRIT_ITER, 10, 0.001);

int retries = 1;

int flags = KMEANS_PP_CENTERS;

BOWKMeansTrainer bow(dictionarySize, tc, retries, flags);

BOWImgDescriptorExtractor dextract(extractor,matcher);

// Initialize constant values

const int nb_cars = files.size();

const int not_cars = files_no.size();

const int num_img = nb_cars + not_cars; // Get the number of images

As my personal approach i think error starts from here

// Initialize your training set.

cv::Mat training_mat(num_img,image_area,CV_32FC1);

cv::Mat labels(num_img,1,CV_32FC1);

std::vector<string> all_names;

all_names.assign(files.begin(),files.end());

all_names.insert(all_names.end(), files_no.begin(), files_no.end());

// Load image and add them to the training set

int count = 0;

Mat unclustered;

vector<string>::const_iterator i;

string Dir;

for (i = all_names.begin(); i != all_names.end(); ++i)

{

Dir=( (count < files.size() ) ? YourImagesDirectory : YourImagesDirectory_2);

tmp_img = cv::imread( Dir +*i, 0 );

resize( tmp_img, tmp_dst, tmp_dst.size() );

Mat row_img = tmp_dst; // get a one line image.

detector.detect( row_img, keypoints);

extractor->compute( row_img, keypoints, descriptors_1);

unclustered.push_back(descriptors_1);

//bow.add(descriptors_1);

++count;

}

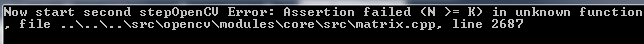

cout<<"second part";

int count_2=0;

vector<string>::const_iterator k;

Mat vocabulary = bow.cluster(unclustered);

dextract.setVocabulary(vocabulary);

for (k = all_names.begin(); k != all_names.end(); ++k)

{

Dir=( (count_2 < files.size() ) ? YourImagesDirectory : YourImagesDirectory_2);

tmp_img = cv::imread( Dir +*k, 0 );

resize( tmp_img, tmp_dst, tmp_dst.size() );

Mat row_img = tmp_dst; // get a one line image.

detector.detect( row_img, keypoints);

dextract.compute( row_img, keypoints, descriptors_1);

training_mat.push_back(descriptors_1);

labels.at< float >(count_2, 0) = (count_2<nb_cars)?1:-1; // 1 for car, -1 otherwise*/

++count_2;

}

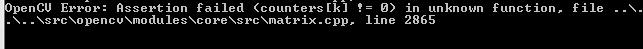

After processing sometime it show me the below runtime error

Error :

Edit :

Mat training_mat(0,dictionarySize,CV_32FC1);

to

Mat training_mat(1,extractor->descriptorSize(),extractor->descriptorType());

but didn't solve the problem

You are not willing to learn, are you? Your "question" is still a heap of code without proper formatting nor a precise problem description. sigh

(this question is a copy-paste from http://answers.opencv.org/question/19344/getting-error-using-svm-with-surf/ and http://answers.opencv.org/question/19325/how-to-know-my-svm-is-trained-accordingly/)

@SR How i know where i am doing mistake its runtime logical error , if it was syntax than i will paste only that part , if i knw where i did mistake than why i post the question here ? and i post the small peace of code here and its dont have error than i post the second part and so on ? i think i did the coding on my own and i am here for help not for coding , thats why i mention the error here , i think my question is short and clear , i just want to know the error and that's it