Background subtraction for vein detection

Hello I'm ansking about this topic again, but in another way. I'm currently working in a project in that I have to do a vein detector using IR images, to achieve a better segmentation with my teacher I have to make a mask where in that mask only consider the arm area, everything else doesn't matter. We want to isolate the arm area an in that area do the processing, to do theresholding, erosion, etc. A good example to explain the idea is this video that I found on youtube. EXAMPLE VIDEO

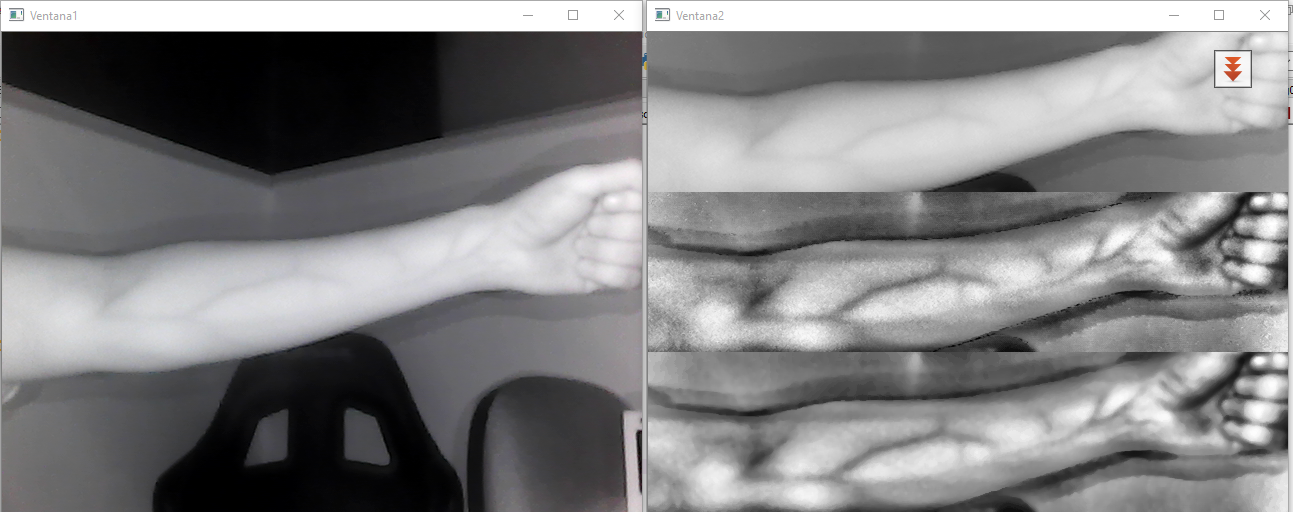

Currently, the results are expressed in this image.

import matplotlib.pyplot as plt

import matplotlib.image as img

import numpy as np

import cv2

#IM=img.imread("img01.jpg")

#nF,nC=IM.shape #Obtiene el tamaño de la imagen

camera = cv2.VideoCapture(1)

fourcc = cv2.VideoWriter_fourcc(*'XVID')

out = cv2.VideoWriter('output3.avi',fourcc, 20.0, (640,480))

cv2.namedWindow('Ventana1')

while cv2.waitKey(1)==-1:

retval, img = camera.read()

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

#Def. ROI

nf,nc=gray.shape

nf3=round(nf/3)

gray = gray[nf3:2*nf3,:]

CAP1 = gray.copy()

#Ecualización

clahe = cv2.createCLAHE(clipLimit=20.0, tileGridSize=(8,8))

gray= clahe.apply(gray)

CAP2 = gray.copy()

#Filtro Mediana

gray = cv2.medianBlur(gray,5)

CAP3 = gray.copy()

#Binarización

ret,gray = cv2.threshold(CAP2,0,255,cv2.THRESH_BINARY+cv2.THRESH_OTSU)

CAP4 = 255-gray.copy()

#Muestra por pantalla

cv2.imshow('Ventana1',CAP3)

out.write(np.concatenate((CAP1,CAP2,CAP3,CAP4),axis=0))

cv2.imshow('Ventana2',np.concatenate((CAP1,CAP2,CAP3,CAP4),axis=0))

#CIERRA

cv2.destroyAllWindows()

camera.release()

I hope you can help me. Thank you

Can you afford a depth camera? You can subtract the background quite easily: there is a discontinuity there, between the arm depth and the background depth(s); a jump.

May I recommend the Kinect sensor? There is C++ code for both 360-era Kinects and One-era Kinects, from Microsoft. It has a depth camera. Works just like you'd expect.

Converting between a data pointer and a Mat is trivial: I have C++ code for both versions of the Kinect sensor: https://github.com/sjhalayka/kinect_o...

I've only tested the 360-era Kinect. The One-era Kinect is still too expensive. Don't forget that the PC adaptor costs extra (like $30).

https://docs.opencv.org/master/db/d5c...

solve https://www.youtube.com/watch?v=NS68e... ? Try to use something as : : typical pulse oximeter uses an electronic processor and a pair of small light-emitting diodes (LEDs) facing a photodiode through a translucent part of the patient's body, usually a fingertip or an earlobe. One LED is red, with wavelength of 660 nm, and the other is infrared with a wavelength of 940 nm.