How to improve the performance of a Haar cascade classifier during training?

Hi to everyone!!!

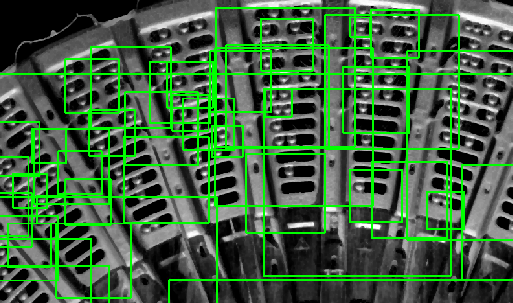

I'm trying to train a Haar cascade classifier to detect "balls" of a SAG mill. Specifically the images are from lateral faces of the mill and they're in grayscale. Each of the original images has an app resolution of 2000x1500 pixels and each "ball" falls inside of a bounding box of 20x20 pixels.

ROI of an Original Image:

After some pre-processing, I annotated manually each ball present in the original images, so I can obtain a positive dataset of app 2300 images of 20x20 pixels each one.

Positive Dataset Generation:

Positive Dataset Example:

The negative dataset was generated from the original images with the regions where there are balls filled with black. Specifically I used a sliding window on the original images that allowed me to cut out an app 13,000 images of 50x50 pixels without balls (I do not shure if this last step is correct.)

Negative Dataset Example:

Then I use the opencv_createsamples tool setting the -w and -h parameters to 15pixels (all balls bounding boxs are of 20 pixels). I read in the documentation and in some books like (OpenCV 3 Blueprints) that this set the minimum detectable object size. I was performed test using the remainder parameters as default and setting them to zero (maxxangle, maxyangle, maxzangle) since in the original images the balls always have the same perspective and size.

I read in the same book that it is not convenient to perform the "data augmentation" if the positive images do not have a "clean" background to be considered transparent, as in this case.

Finally I run the opencv_traincascade tool with the following parameters:

- -numStages 20 (usually used in the documentation),

- -minHitRate 0.999 (recomended in the documentation),

- -maxFalseAlarmRate 0.5 (recomended in the documentation)

- -numPos 1904 (total_positive_images*0.85 as recommended in the mentioned book)

- -numNeg 650 (total_negative_images/num_of_stages since the negative images stop being considered in the training once they are correctly classified by a stage)

- -w 15

- -h 15

- -mode ALL

- -precalcValBufSize 4096

- -precalcIdxBufSize 4096

By varying some parameters such as: numPos, numNeg, my results are not better than what is seen in the following image:

Obtained Results:

What recommendations would you give me to try to improve the performance of the classifier (I am doing something wrong?), or maybe I should use some other approach, maybe DeepLearning?