ToF and RGB stereo vision system

Hello!

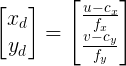

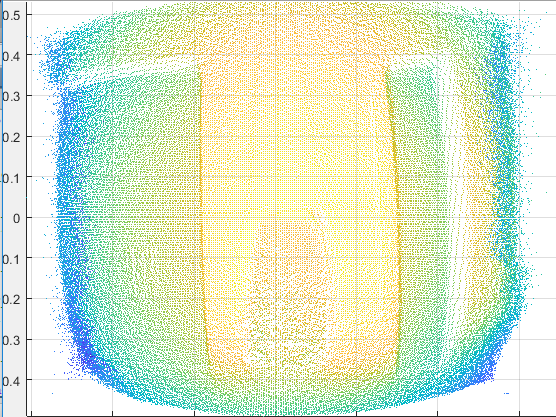

I have a hybrid stereo system which consists of RGB camera and ToF camera. Both of the camera resolutions are set to 320x240. Right now I have one variable which consists of 76800x3 array of points with [x,y,z] values

and I have a color image 240x320x3 of the scene which looks something like

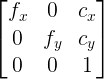

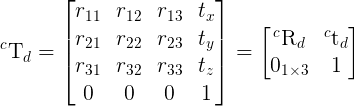

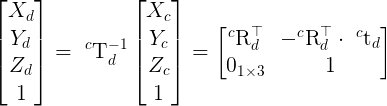

as you can see, the cameras are a bit missaligned. But i have done stereo vision calibration and have obtained RGB and ToF cam intrinsic and ToF extrinsic parameters in relation to RGB cam. The problem is how to align these two scenes in Matlab properly usign OpenCV and how to get a colorful point cloud in the result? Any infromation will be much appreciated.

Regards, Andris