Getting inaccurate results using tensorflow net and opencv dnn

I'm trying to use a tensorflow frozen graph to detect hands in an image. I get some weird extra boxes when I'm loading the net with opencv.

I am using the frozen graph from this handtracking github repo, for which I am generating the text graph using tf_text_graph_ssd.py

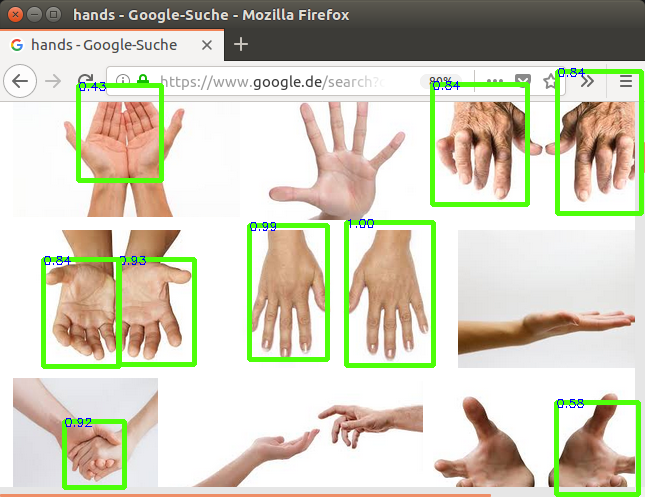

Result from Tensorflow:

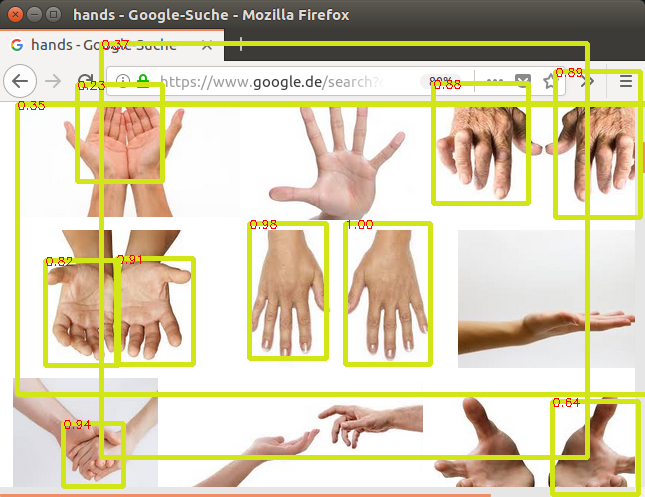

Result from OpenCV:

The code and files are here: Github

System configuration:

- Ubuntu 16.04

- Python 2.7

- OpenCV 3.4.1

- Tensorflow 1.6.0

The only difference between the two methods that I can think about is the graph generation. Tensorflow loads directly the *.pb file, whereas opencv needs the *.pbtxt. Maybe the tool generating the text graph isn't completely accurate? Any suggestions are welcome.

@dan01, May I ask you to compare your case with the following one? http://answers.opencv.org/question/18... . Did you use a default

*.cofigfile (https://github.com/tensorflow/models/...) with modified number of classes or you changedmin_scale/max_scale? Your case is very useful to fix it. Thank you!Hi @dkurt, thanks for the fast reply. I didn't train the model myself, I just wanted to use it, so I actually didn't use the config file, just the frozen graph. The config file is from here

So here's the diff between the original config and the one on the tensorflow github out.diff

TL;DR:

@dan01, Thank you! The model is correct. All that you need is just use higher confidence threshold (0.2 is now).

Hmm, I don't think it's correct... I've printed the scores and they differ from one model to another (updated images in original post), which led me to try another image. Now i get results similar to this post. Please see the image

P.S.: Tensorflow method yields correct results, see here.

@dkurt, setting it to

if score > 0.8:yieldsthe correct box has a score of only 0.26, here's a higher resolution image.

@dan01, besides trying to increase a confidence threshold from 0.2 to 0.6 (

if score > 0.2:->if score > 0.6:), may I ask you to test the first image again but with.pngimage format but not.jpg? You may convert it using OpenCV'scv.imwrite('/path/to/png', cv.imread('/path/to/jpg')).@dkurt, done, but the results are the same. Please see below:

if score > 0.2:result1if score > 0.6:result2@dan01, I got the problem. This is a deprecated SSD graph since TensorFlow 1.4. You need to modify an every

PriorBox_*layer (exceptPriorBox_0) in the following way: inwidthandheightattribute move the lastfloat_valto be the second one (float_val[0], float_val[1], ..., float_val[N-1]- >float_val[0], float_val[N-1], float_val[1], ..., float_val[N-2]). So it reverts the thing mentioned here.@dkurt Wow that fixed it! Thank you! So if I re-train the model, this problem shouldn't come up?

@dan01, It's not necessary if you use TensorFlow >= 1.4 and train the model from scratch. However if you wanted to tune this one I think you need to do the same again.