Finding the real-world distance of object from pixel coordinates

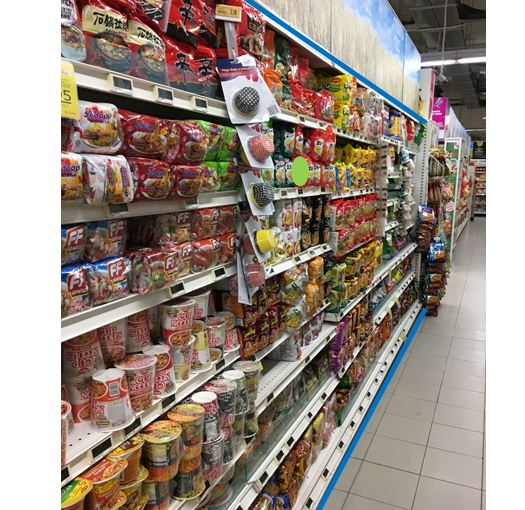

I have a picture of a supermarket shelf, with its top-most and bottom most row detected (as blue lines).

I know the height (say 2.5 meters) of the shelf, and that it is a fixed value throughout the entire shelf (this also implies that the 2 blue lines are always parallel in the real world). The pixel coordinates of the blue lines are known.

I have marked out a point (in green), with pixel coordinates only. This point will always be in between the top - bottom most rows.

In this case, given the above information, is there a way to calculate the distance (in meters) of the green point (in the real environment) to the top-most shelf?

I am thinking of using information such as the vanishing point, but I can't figure out how to do that.

https://www.pyimagesearch.com/2015/01...

@berak unfortunately i do not know the distance to the camera; i only know the actual distance between the 2 blue lines.

well, you'll have to calibrate your camera somehow, that was more for showing a cheap way to do so.

Multiple view geometry in computer vision p222