findContours() does not find accurately contours (Python) [closed]

I am using OpenCV+Python to detect and extract eyeglasses from a (face) image. I followed the line of reasoning of this post(https://stackoverflow.com/questi... which is the following:

1) Detect the face

2) Find the largest contour in the face area which must be the outer frame of the glasses

3) Find the second and third largest contours in the face area which must be the frame of the two lenses

4) Extract the area between these contours which essentially represents the eyeglasses

However, findContours() does find the outer frame of the glasses as contour but it does not accurately find the frame of the two lenses as contours.

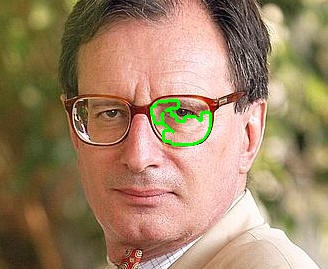

My results are the following:

The original image is here:

What can I do to fix this?

import cv2

import numpy as np

import matplotlib.pyplot as plt

img = cv2.imread('Luc_Marion.jpg')

RGB_img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

gray_img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# Detect the face in the image

haar_face_cascade = cv2.CascadeClassifier('haarcascade_frontalface_default.xml')

faces = haar_face_cascade.detectMultiScale(gray_img, scaleFactor=1.1, minNeighbors=8);

# Loop in all detected faces - in our case it is only one

for (x,y,w,h) in faces:

cv2.rectangle(RGB_img,(x,y),(x+w,y+h),(255,0,0), 1)

# Focus on the face as a region of interest

roi = img[int(y+h/4):int(y+2.3*h/4), x:x+w]

roi_gray = gray_img[int(y+h/4):int(y+2.3*h/4), x:x+w]

# Apply smoothing to roi

roi_blur = cv2.GaussianBlur(roi_gray, (5, 5), 0)

# Use Canny to detect edges

edges = cv2.Canny(roi_gray, 250, 300, 3)

# Dilate and erode to thicken the edges

kernel = np.ones((3, 3), np.uint8)

edg_dil = cv2.dilate(edges, kernel, iterations = 3)

edg_er = cv2.erode(edg_dil, kernel, iterations = 3)

# Thresholding instead of Canny does not really make things better

# ret, thresh = cv2.threshold(roi_blur, 127, 255, cv2.THRESH_BINARY+cv2.THRESH_OTSU)

# thresh = cv2.adaptiveThreshold(blur_edg, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY, 115, 1)

# Find and sort contours by contour area

cont_img, contours, hierarchy = cv2.findContours(edg_er, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

print("Number of contours: ", len(contours))

cont_sort = sorted(contours, key=cv2.contourArea, reverse=True)

# Draw n-th contour on original roi

cv2.drawContours(roi, cont_sort[0], -1, (0, 255, 0), 2)

mask_roi = mask[int(y+h/4):int(y+2.3*h/4), x:x+w]

cv2.drawContours(mask_roi, cont_sort[0], -1, (255,255,255), 2)

plt.imshow(RGB_img)

plt.show()

an entirely silly idea. what do you think, this is based upon ?

I found it here:https://stackoverflow.com/questi... and it worked very well for the image of this post. But in other images where the contrast between the eyeglasses and the face is not so high it is not working so well. What else do you suggest then?

I agree with berak. You can't just use the size of the contours. This is not robust. Just an idea: You could draw a template of glasses and try to use the function matchTemplate to get the best match. If I have the time I will give it a try. Could you just add the original image (without the green markers)?

Thank you for your response. Hm, yes this is obviously a better idea but still keep in mind that I want to process various types of glasses which vary in size and shape so I am not sure that this idea will fare significantly better. Perhaps I am wrong. (P.S. I uploaded the original image)

Also keep in mind that I do not want only to detect eyeglasses but to accurately localise them and extract them from a face image so I am not sure that matchTemplate is really suitable for this task.

The xml can be found in folder..

opencv/data/haarcascades/haarcascade_eye_tree_eyeglasses.xmlIf not found....eyeglasses.xml

By the way, I like the fact that @berak said that this solution is sort of silly but he did not suggest anything better...haha...cool...