What's the issue with Perspective Transformation?

I am trying perspective transformation example from the documentation but I am getting different result than the example.

import cv2

import matplotlib.pyplot as plt

import numpy as np

img = cv2.imread('sudoku.png')

rows,cols,ch = img.shape

pts1 = np.float32([[56,65],[368,52],[28,387],[389,390]])

pts2 = np.float32([[0,0],[300,0],[0,300],[300,300]])

M = cv2.getPerspectiveTransform(pts1,pts2)

dst = cv2.warpPerspective(img,M,(300,300))

plt.subplot(121),plt.imshow(img),plt.title('Input')

plt.subplot(122),plt.imshow(dst),plt.title('Output')

plt.show()

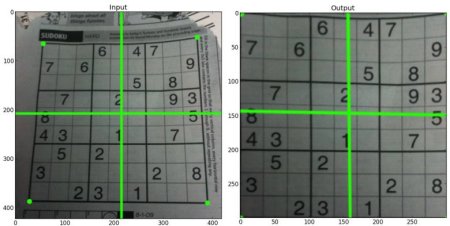

The result according to the example should look like this(ignore the green lines):

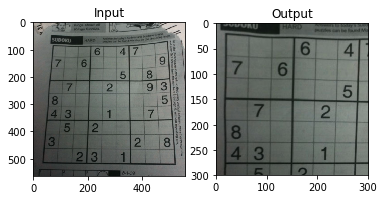

whereas what I got looks like this:

Any ideas what's going on here? I am on macOS 10.13, openCV 3.3.1 and using python 3.6