Reverse projection from 2D to 3D, I have intrinsic parameter of camera but no extrinsic parameter. I do have multiple images form same camera.

I am trying to back project pixels to lines which originate from the camera and travel trough the image plane.I have camera center C, direction vector for each line originating from the camera center v and the plane equation ax+by+cz+d=0. Now I can write a(C_x+v_xt) + b(C_y+v_yt) + c(C_z+v_z*t) + d = 0. Now solving t and use it to find the 3D position. - here since I do not have extrinsic parameter, I am doing the calculation with an estimating using different values- The below is my algorithm-

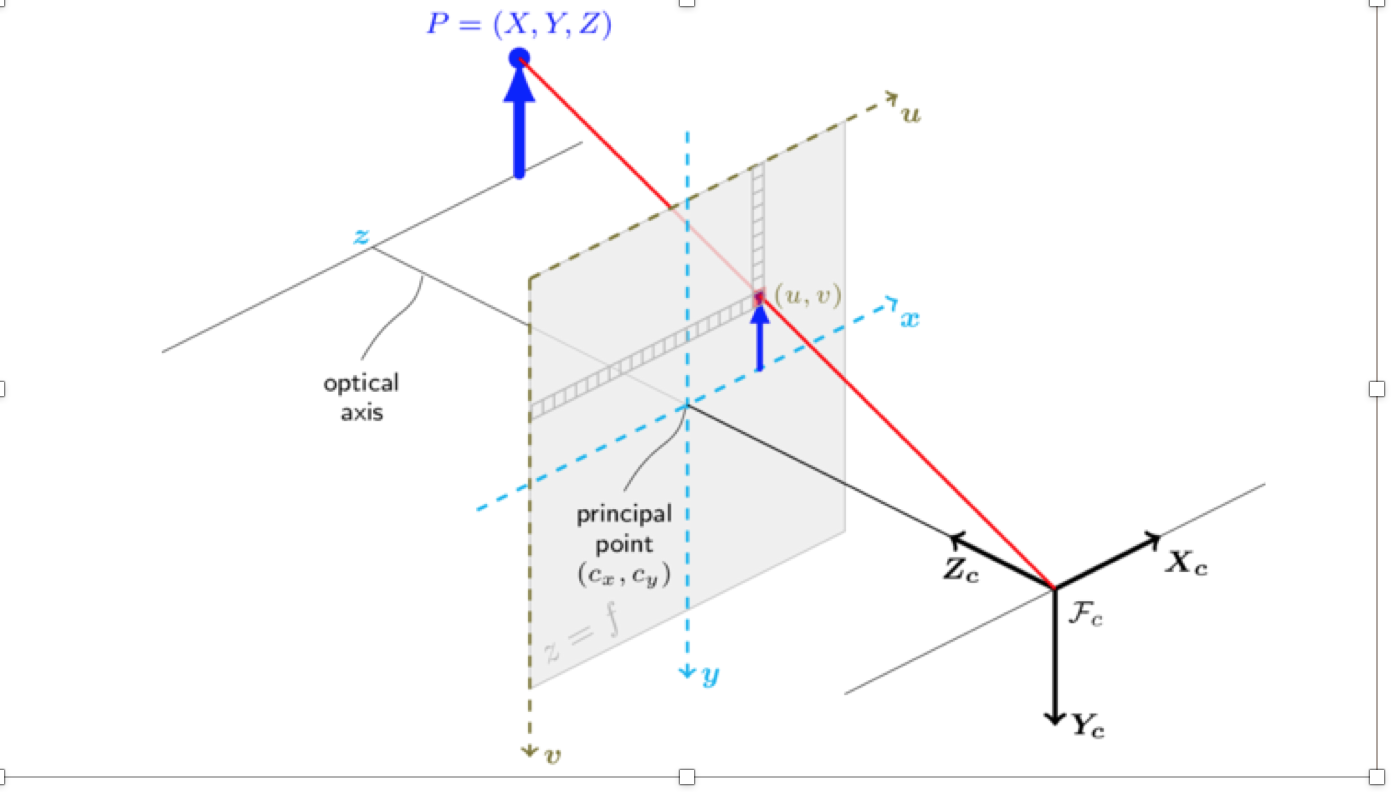

Here, O_c = focal point = camera center, the camera opening through which light enters.

F- focal length. The length between optical center and the center of the image plane( image plane is the plane where we are getting 2d version of the image)

C_x,C_y= centre of image plane.

R = rotation matrix.

n = rays of back projections

X,Y,Z= coordinates of points P

We are assuming Z= 1, this plane.

(u,v) )= pixel points on image plane.

From which we will do back projection. Now we are assuming the center of the crucible as universal plane. Here, we are trying to find the ray – plane intersection. We have, pixel points of image plane. Now, we are doing back projections in order to find the rays intersecting the plane , At first we find a vector from the focal point through the pixel,

Now, when we are doing back projection , focus on the line connecting and (u,v).

So , in case of (u,v) point t, The distance between Fc and the center of image plane (principle point)-Fx

n(0) = (u - C_x) / F. // pixel/pixel

n(1) = (v - C_y) / F;

n(2) = 1.0;

Here, I am changing F in mm to pixel by using this focal_pixel = (focal_mm / sensor_width_mm) * image_width_in_pixels.

Now , we are representing unit vectors in terms of universal plane,( center of crucible)

n = transpose(R) * n,

which is the coordinate transformation from the pinhole camera coordinate system to the crucible centered one. Here, I am using different values of R ( different angle of roll, pitch , yaw )- mostly changing the value of pitch, keeping yaw small and roll 0;

Then, we are using the ray plane intersection, in order to find the t,

Now, Here we are assuming the plane Z = 1,

O_c + t * n = (X,Y,Z)

Equalizing the third coordinate we will get t, and after that the coordinates (X Y) are calculated and will be used later to find the circle center.

My query is-

Is my algorithm correct? Is there any way to do this conversion (2D to 3D) and get external parameter of camera using same image of a camera? In my case Z is not equal to 0; I have read all the answers posted here and really stuck in this problem for a very long time, any kind of suggestion would be highly appreciated.