LSVM translation invariant

Hi,

I am using LatentSVM in opencv 2.4 on models from opencv_extra as suggested here.

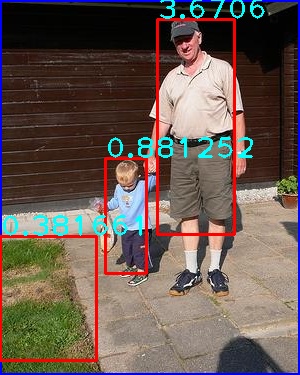

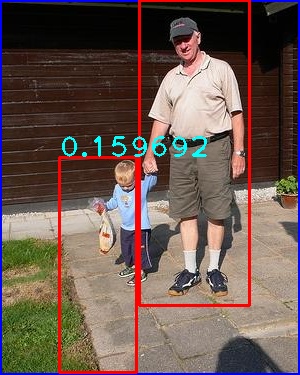

This is a voc sample Test image, the results with 1 different shift

I noticed different scores and bounding boxes each 1-pixel shift, what are the causes of this variation although the object is fully present in these cases? Here is the code

- I want to ask about why different translation/roi image size produces different scores and predictions? And are there any way to guarantee what kind of displacements are best to achieve good detection (e.g the object is in the middle or in the boarder of the image, etc)

- Are there any down resolution factors other than HOG blocks calculation? like stride in convolution and score calculation step, is it dense overlapped with 1 pixel stride?

- For speeding up the detection, I changed LAMBDA to 5 instead of 10. Other than misdetection from different pyramid scale size, are there any miscalculation or dependent parameters in LSVM code to be ware of? or is it safe to change LAMBDA only limiting pyramid scale size?

Edit 1 Here are important more in details points:

- Sliding window applied is only for illustration of variance output for different ROI/input, LatentSVM in opencv already applies sliding window of the input image so I am not trying to apply sliding window in here. I am just evaluating output stability with respect to translation.

- My main interest is to detect people in general not pedestrian as there are far more structure and deformation in general person detector than in pedestrian (for example DPM achieves 88% with INRIA pedestrian but 50% with VOC person class)

- Finally, I am interested on a non-deep learning method .. LSVM/DPM method is as far as I know is the state of the art classical method (any other high accuracy classical method?)