register ir image to distorted rgb

Hi guys, I need some help/hints regarding the following problem. I have an rgb and an ir image of the same scene but in different resolution.

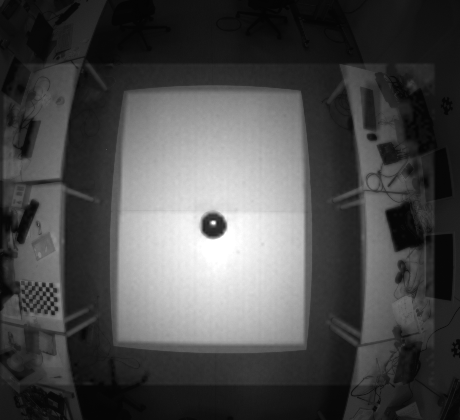

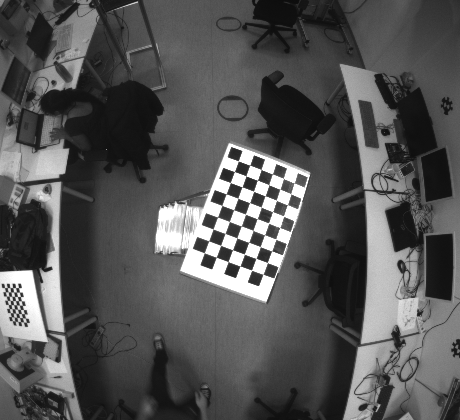

RGB image (420x460):

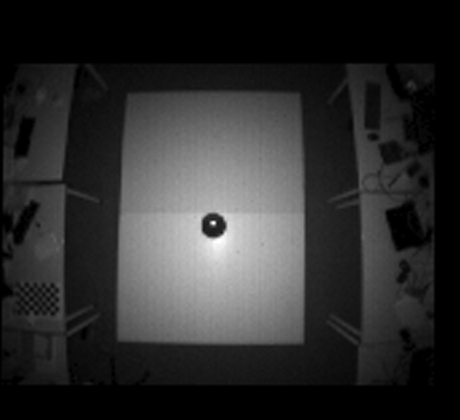

and the ir corresponding (120x160):

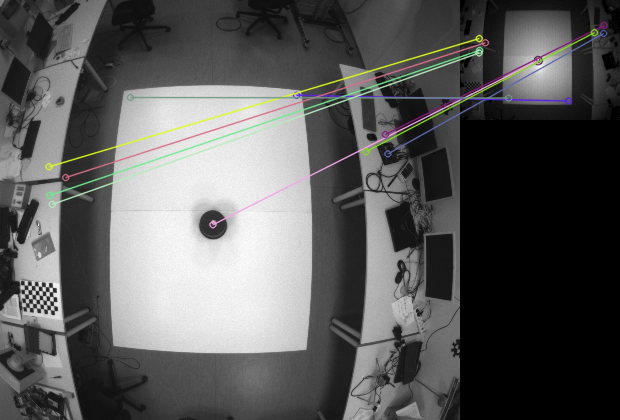

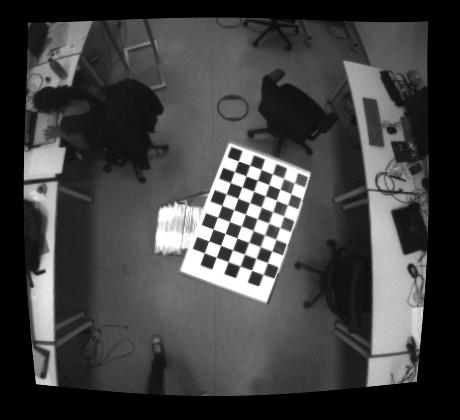

Now I want to register the ir image to the distorted RGB one. The way I am doing it at the moment is by extracting the homography between the two images and then applying the transformation to the ir image. My upper goal is to use this transformation to the depth output and since the ir with the depth sensor output are aligned I am using the ir images since I can have access to more visual information. In the beginning I tried to use an automatic keypoints detector algorithm like SURF (it seems to perform a bit better, in regards to others) however the result is not that good since also the points that if finds are not that accurate as you can see below:

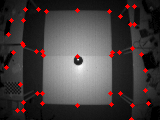

Keypoints detected:

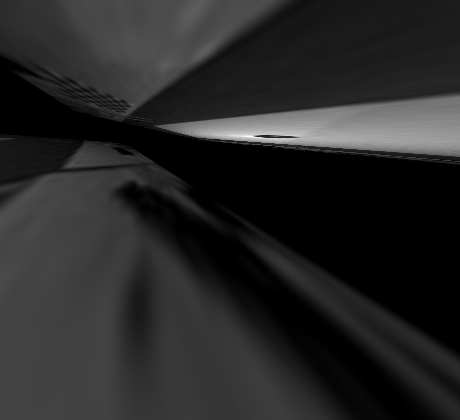

Registration based on the above keypoints (not good at all):

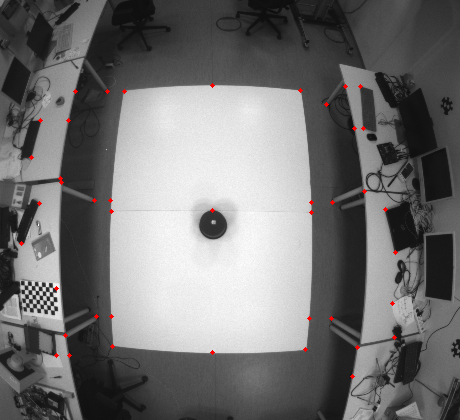

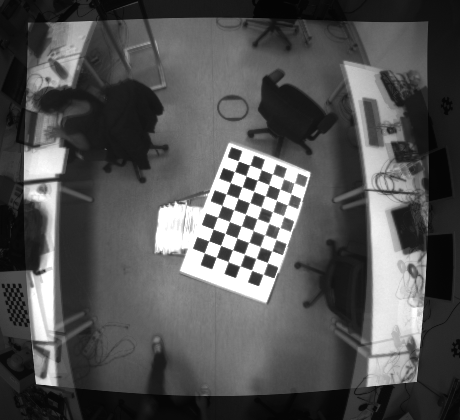

I tried other keypoint detection algorithms as well but the result though it might improved it was not acceptable. Therefore, I decided to provide the matching points manually:

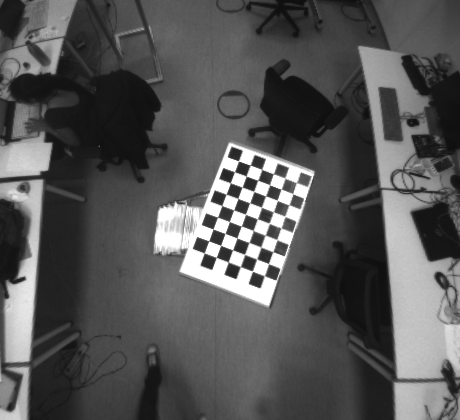

the registered image is by far much better now

and if I overlay the two images

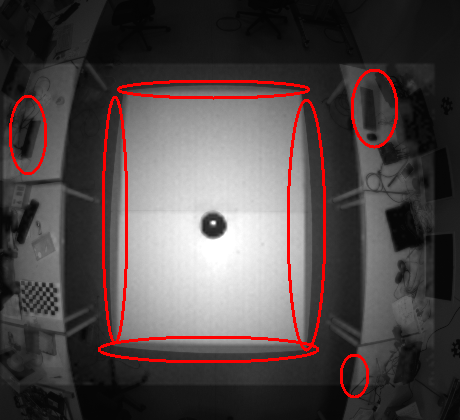

However, you will notice that there is a kind of misplacement (or not good registration) in some spots:

Therefore, I was just wondering if there is a way to get a better result from what I am getting already. Note that I need to keep the distortion in the rgb image, therefore following the normal procedure (chessboard, extracting intrinsic/extrinsic coeffs, undistort, rectify, etc...) is out of the grid for the moment at least.

Thanks.

Adiitional code:

#include <iostream>

#include <stdio.h>

#include <opencv2/opencv.hpp>

#include "opencv2/core.hpp"

#include "opencv2/imgproc.hpp"

#include "opencv2/features2d.hpp"

#include "opencv2/highgui.hpp"

#include "opencv2/calib3d.hpp"

#include "opencv2/xfeatures2d.hpp"

using namespace std;

using namespace cv;

using namespace cv::xfeatures2d;

int main(int argc, char *argv[])

{

cout << "Hello World!" << endl;

Mat rgb = imread("../frame_5.png", IMREAD_GRAYSCALE);

Mat ir = imread("../frame_5a.png", IMREAD_GRAYSCALE);

if(!rgb.data || rgb.empty())

{

cerr << "Problem loading rgb image!!!" << endl;

return -1;

}

if(!ir.data || ir.empty())

{

cerr << "Problem loading ir image!!!" << endl;

return -1;

}

Mat ir2 = ir * 2;

imshow("rgb", rgb);

imshow("ir", ir);

imshow("ir2", ir2);

waitKey();

//-- Step 1: Detect the keypoints and extract descriptors using SURF

int minHessian = 400;

Ptr<SURF> detector = SURF::create( /*minHessian*/600);

// Ptr<BRISK> detector = BRISK::create(100);

std::vector<KeyPoint> keypoints_object, keypoints_scene;

Mat descriptors_object, descriptors_scene;

detector->detectAndCompute( rgb, Mat(), keypoints_object, descriptors_object );

detector->detectAndCompute( ir, Mat(), keypoints_scene, descriptors_scene );

//-- Step 2: Matching descriptor vectors using FLANN matcher

BFMatcher matcher(NORM_L2, true);

// FlannBasedMatcher matcher;

std::vector< DMatch > matches;

matcher.match( descriptors_object, descriptors_scene, matches );

double max_dist = 0; double min_dist = 100;

//-- Quick calculation of max and min distances between keypoints

// for( int i = 0; i < descriptors_object.rows; i++ )

for( int i = 0; i < matches.size(); i++ )

{ double ...

Normally, the homography relates the transformation between two planes. There is also something called "infinite homography" when the scene is very far and when the camera motion is purely (mostly) a rotation: it is this assumption that is made for image stitching if I am not wrong. This is why you should try to rotate around the camera optical center when taking pictures for a panorama to get the best results.

Unfortunatelly the sensors are fixed in a position, therefore not possible to rotate around or move them even a bit (if this is what you mean).

It is more a general comment about the fact that maybe the homography is not so well estimated as the chosen points do not lie on the same plane (points on the floor, points on the tables, ...). Also, I am not sure if the warping will be correct in this case (multiple planes)? I cannot tell as I don't have not so much experience with homography and image warping.

What I am sure is that the homography relates the transformation between two planar objects/scenes.

actually @Tetragramm has a nice link to a paper here but I am not sure how directly is related to what I want to achieve, I was also trying to see if I can find their code but no success

@theodore I have edited my answer with a sample code to align a color image to a depth/IR image.

@Eduardo thanks. I'll have a look.