Mapping not working as expected when using remap

I am trying to warp an 640x360 image via the OpenCV remap function (in python 2.7). The steps executed are the following

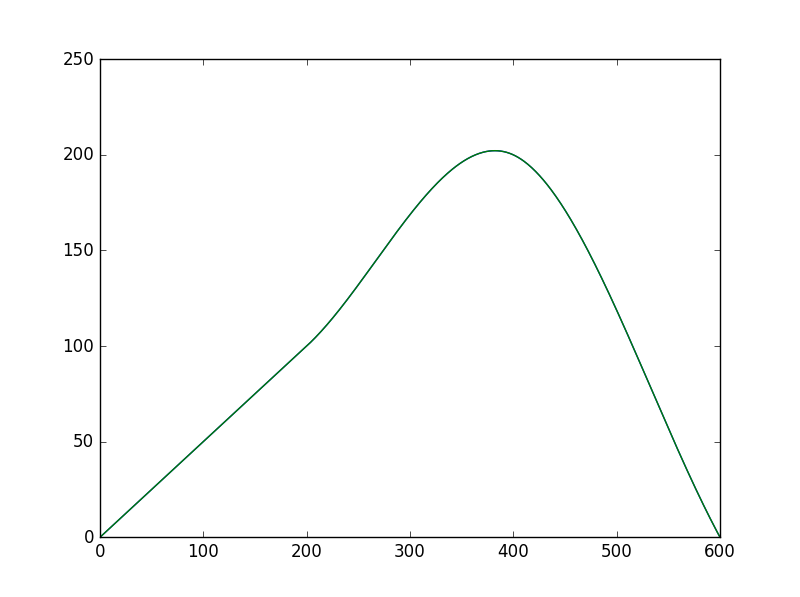

Generate a curve and store its x and y coordinates in two seperate arrays, curve_x and curve_y.I am attaching the generated curve as an image(using pyplot):

Load image via the opencv imread function

original = cv2.imread('C:\\Users\\User\\Desktop\\alaskan-landscaps3.jpg')Implement a mapping function to translate the y-coordinate of each pixel upwards by a distance proportional to the curve height. As each column of y-coordinates must be squeezed in a smaller space a number of pixels are removed during the mapping.

Code:

#array to store previous y-coordinate, used as a counter during mapping process

floor_y=np.zeros((x_size),np.float32)

#for each row and column of picture

for i in range(0, y_size):

for j in range(0,x_size):

#calculate distance between top of the curve at given x coordinate and top

height_above_curve = (y_size-1) - curve_y_points[j]

#calculated a mapping factor, using total height of picture and distance above curve

mapping_factor = (y_size-1)/height_above_curve

# if there was no curve at given x-coordinate then do not change the pixel coordinate

if(curve_y_points[j]==0):

map_y[i][j]=j

#if this is the first time the column is traversed, save the curve y-coordinate

elif (floor_y[j]==0):

#the pixel is translated upwards according to the height of the curve at that point

floor_y[j]=i+curve_y_points[j]

map_y[i][j]=i+curve_y_points[j] # new coordinate saved

# use a modulo operation to only translate each nth pixel where n is the mapping factor.

# the idea is that in order to fit all pixels from the original picture into a new smaller space

#(because the curve squashes the picture upwards) a number of pixels must be removed

elif ((math.floor(i % mapping_factor))==0):

#increment the "floor" counter so that the next group of pixels from the original image

#are mapped 1 pixel higher up than the previous group in the new picture

floor_y[j]=floor_y[j]+1

map_y[i][j]=floor_y[j]

else:

#for pixels that must be skipped map them all to the last pixel actually translated to the new image

map_y[i][j]=floor_y[j]

#all x-coordinates remain unchanges as we only translate pixels upwards

map_x[i][j] = j

#printout function to test mappings at x=383

for j in range(0, 360):

print('At x=383,y='+str(j)+'for curve_y_points[383]='+str(curve_y_points[383])+' and floor_y[383]='+str(floor_y[383])+' mapping is:'+str(map_y[j][383]))

The original and final pictures are shown below

I have two issues:

As all the pixels are translated upwards I would expect the bottom part of the picture to be black - or some other background colour - and that this blank area should match the area below the curve

There is a hugely exaggerated upwards warping effect in the picture which I cannot explain. For example, a pixel that in the original picture was at around y=140 is now ...