Converting 2D image coordinate to 3D World Coordinate

Hello ,

I have been assigned the task of converting a 2D pixel coordinates to corresponding 3D world coordinates. I have a bit of Image processing experience from my school projects and Zero experience in openCV.

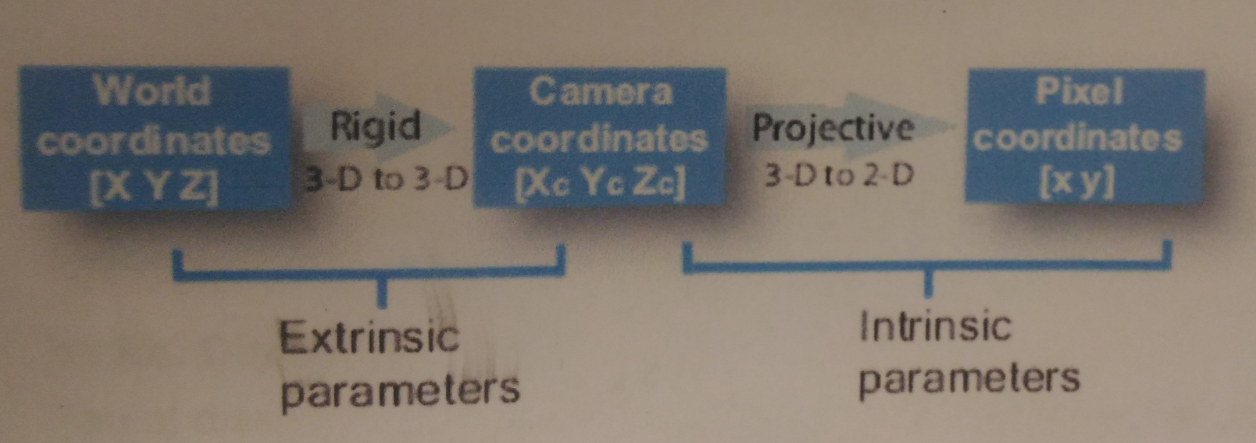

I started going through the PinHole camera model and understood that I need to do inverse perspective projection to be able to find the 3D world point of correspoding 2D pixel coordinates.

I am bit confused and think that I am not following the exact learning way which is supposed to be. I have few questions like

1) I guess i need to do camera calibration first to find the estimate of extrinsic and intrinsic parameters so as to know how my camera projects a 3D image into 2D pixel values. (Reference : https://www.mathworks.com/help/vision...)

Is this the proper approach for my problem statement ; Like first understanding and finding Extrinsic & Intrinsic matrices and them moving to inverse perspective projection.

2) On Some references, I see World coordinates in mm and In others, I See (lat,long,alt) as world coordinates.

Which one i should pick as world Coordinates ,

Since we will be considering the focal point as ORIGIN , Is the world coordinates (X mm ,Y mm, Z mm) are w.r.t focal point ?

There are tons of resources available online which I think I'm getting misleaded and wandering here and there. If you guyz know of any particular resource which is quite st.forward to learn . please let me know.

~ Ashish

Quick answer: you cannot convert from a 2D image coordinate to a 3D coordinate as you lose the depth information with the perspective projection.

That's why we need a stereo rig to reconstruct the depth or structure from motion techniques.

Otherwise, calibrate the camera is a good starting point in general in computer vision.

if you have a single image/camera - look at wikipedia again. (you'll need at least 2)