Reading from RGB24 buffer is producing weird results

Hi, I am trying to get Unity3D to work with OpenCV. This looks good so far, but now I need to get some data from Unity3D to OpenCV. I created a RGB24 buffer from Unity and am reading it from OpenCV, but the output is not what I expect it to be. Here is how I read it:

Mat fromunity(imageSize, CV_8UC3, buffer, Mat::AUTO_STEP);

I am checking the result by writing it into a file, like this:

imwrite("test.png", fromunity);

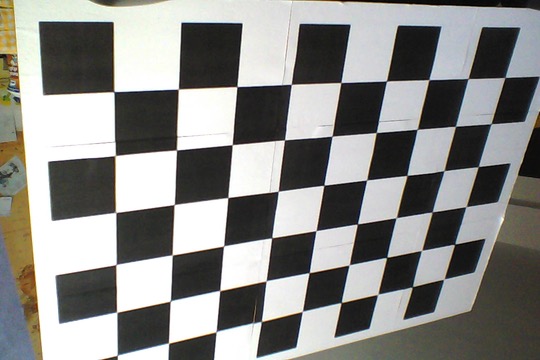

This is a sample image that I get:

But it should look like this (taken directly from the camera, without unity) EDIT> This image is actually upside-down:

This is how the buffers are created in Unity:

Texture2D left = new Texture2D (640, 480,TextureFormat.RGB24, false, true);

left.SetPixels (((WebCamTexture)leftImage.texture).GetPixels ());

left.Apply ();

detectCheckerboard (left.GetRawTextureData()); //call to native plugin with OpenCV

I know that OpenCV expects the channels to be in BGR order, but the next step would be to convert to grayscale anyway so I do not care if the colors are right.

Does this have to do anything with the step argument? I never used it, AUTO_STEP always did the job. What would other values that I could try out be?