This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

A small fast update since I am in a hurry this period:

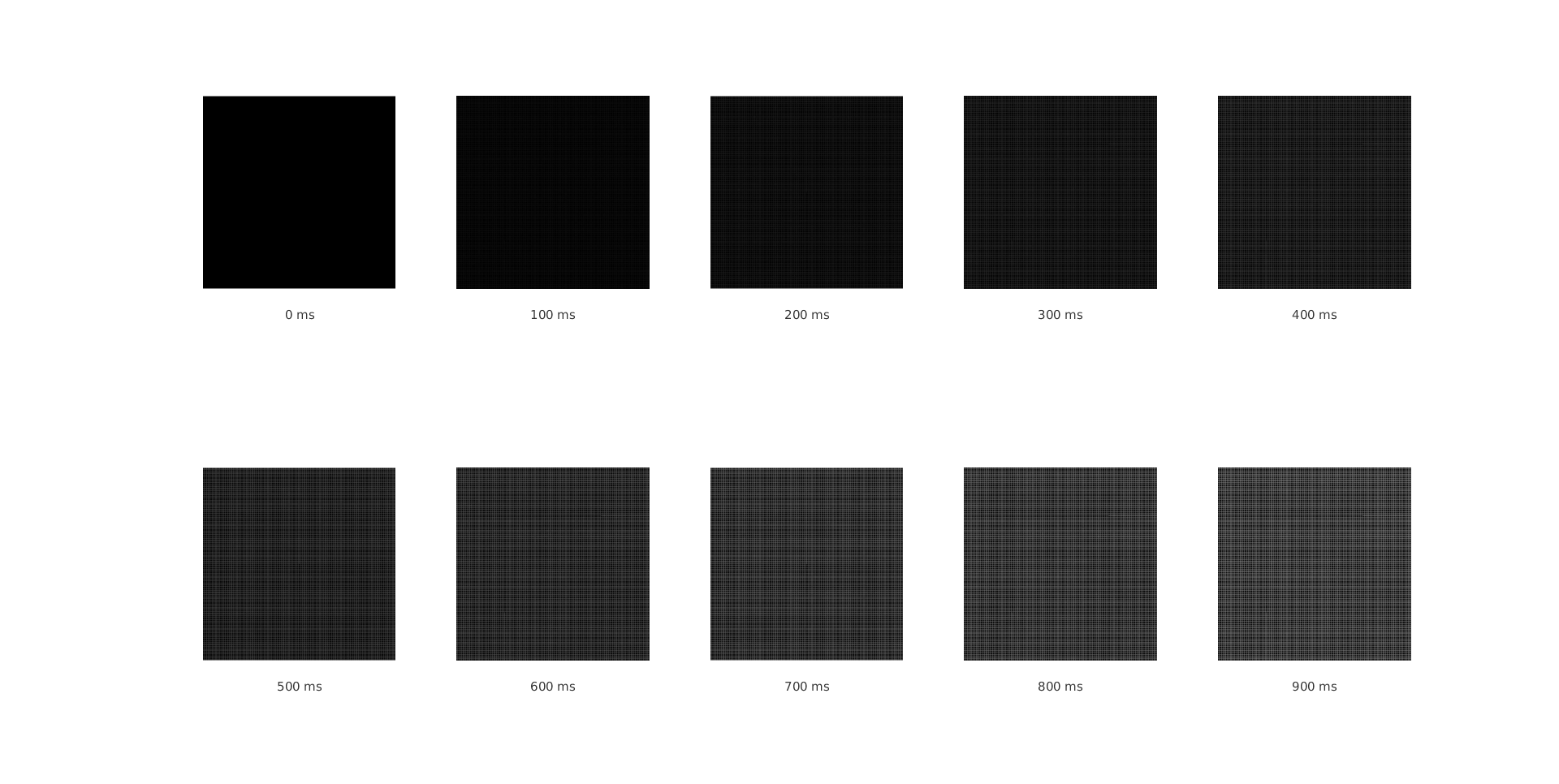

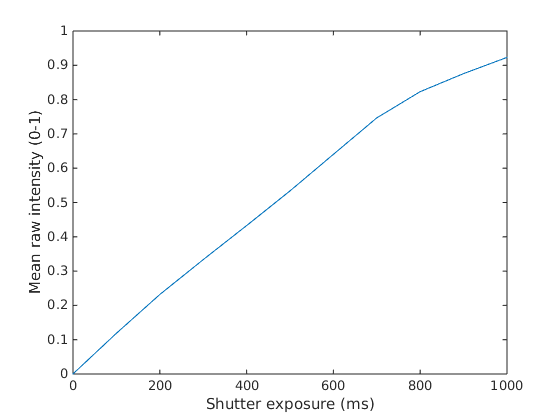

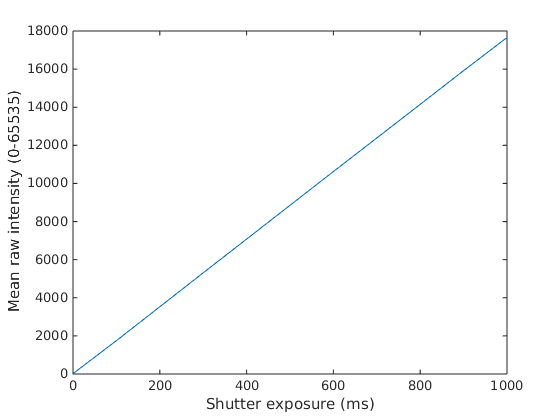

First following also the advice of @pklab I checked the linearity of my sensor:

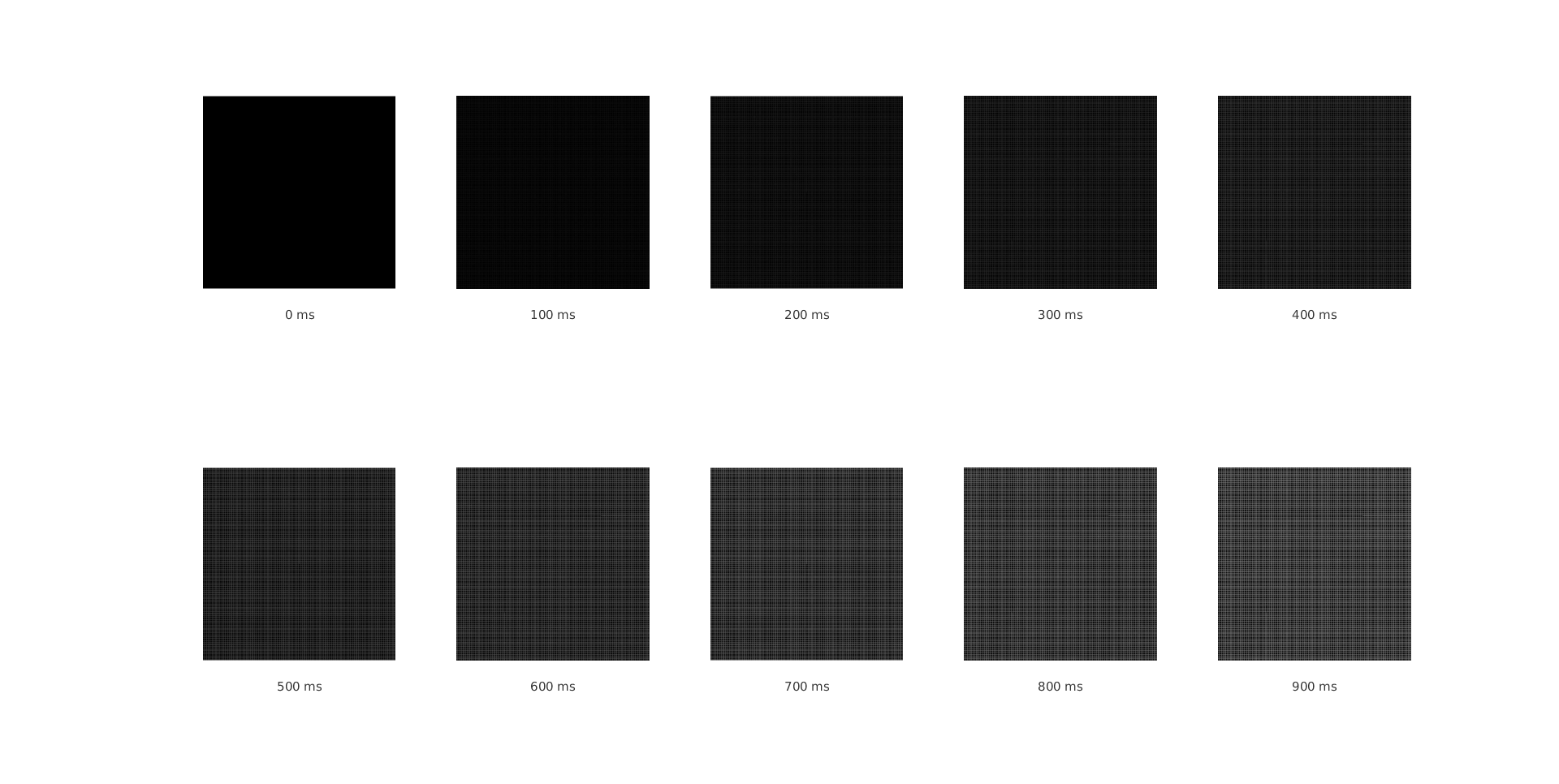

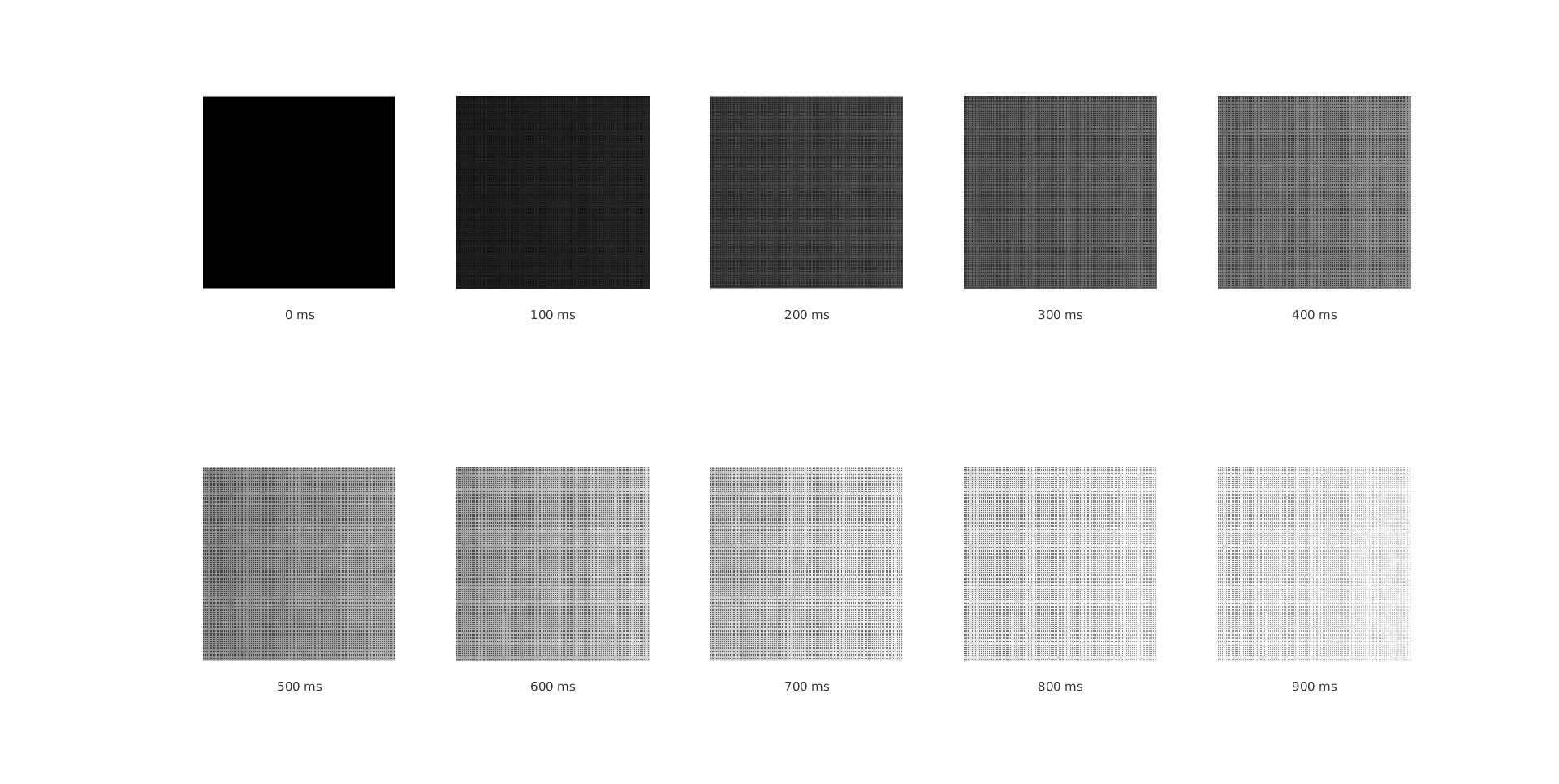

In fixed illumination I got raw format images (16bit, .dng) with different shutter exposure time. Then I took the mean value of a selected roi from the image and I plotted the values in relation to different shutter times.

you can even notice the bayer tiles in the rois above (quite cool :-), I would say).

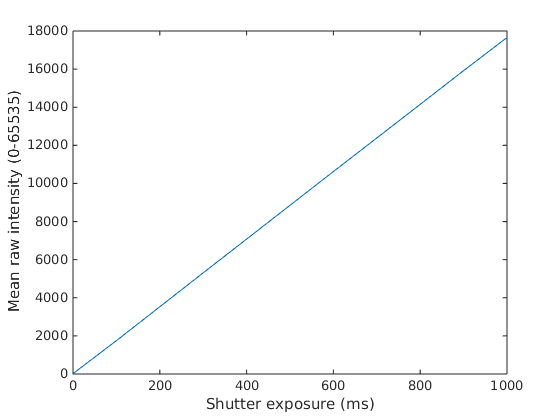

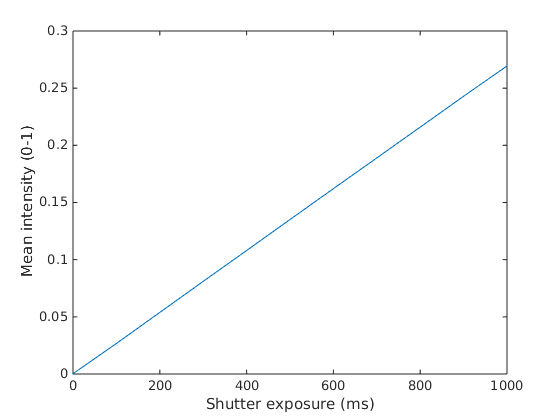

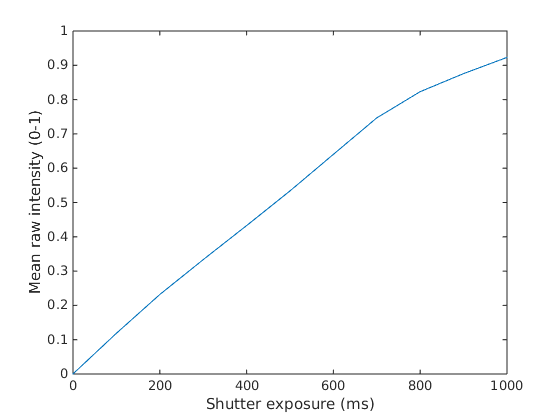

The result can be seen below:

and here with normalized values:

as you can see my sensor is linear.

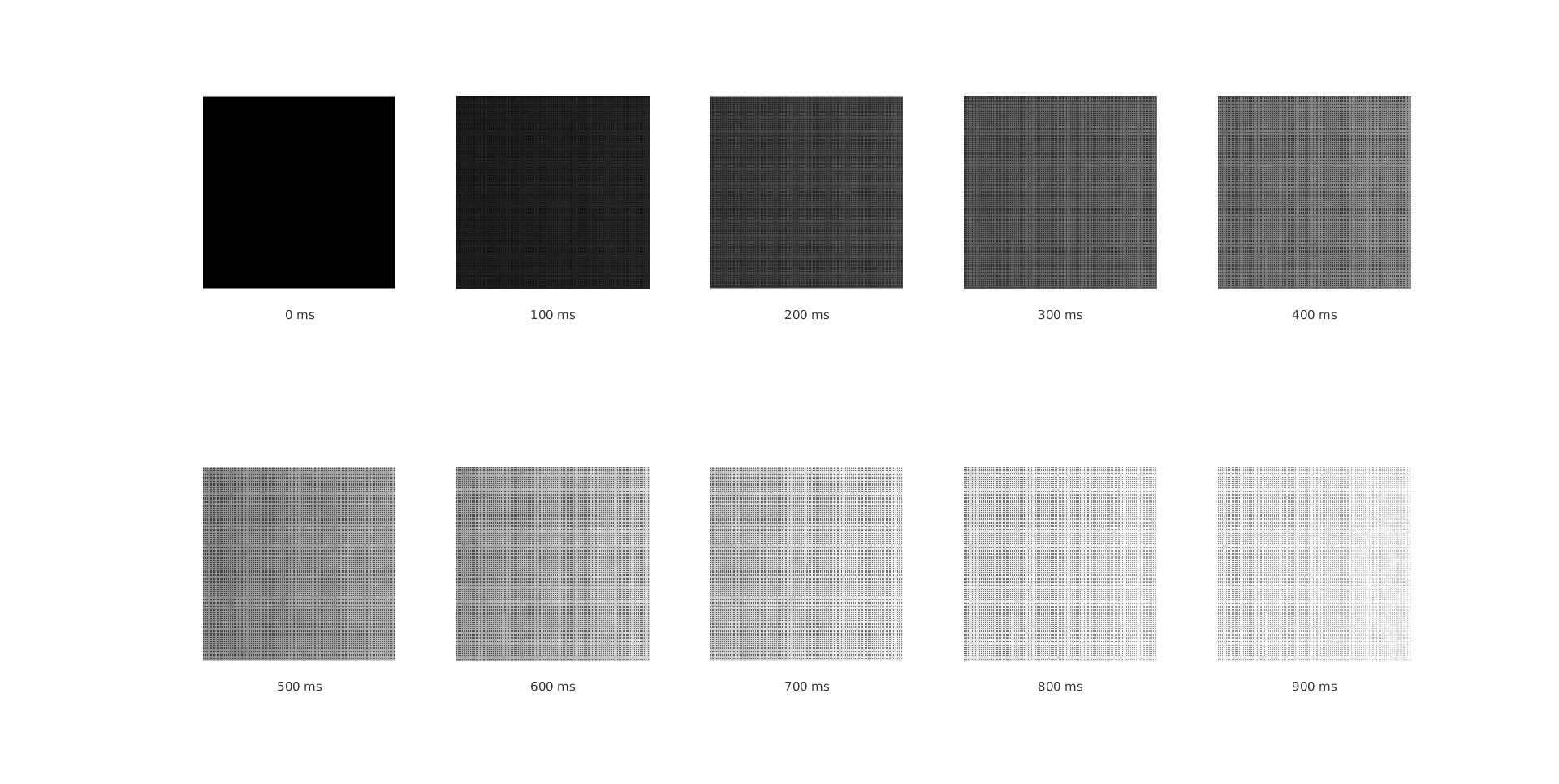

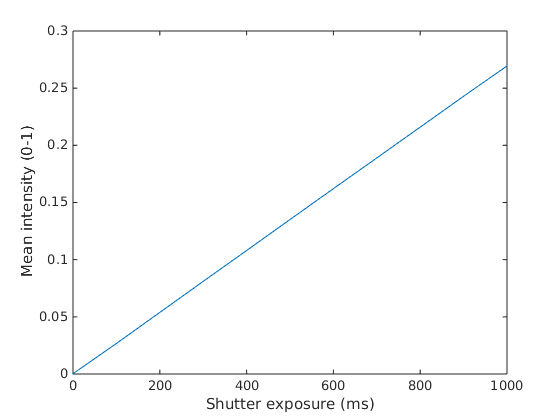

I also did the same experiment with natural light from a window in the lab:

as you can see still performs linearly though the noise, until of course the saturation to start in pixels in a part of the roi (from exporures 800ms and up).

I have also more results to share, and once I find some time again I will keep you posted.

Thanks again both @LBerger and @pklab :-).

| 2 | No.2 Revision |

A small fast update since I am in a hurry this period:

First following also the advice of @pklab I checked the linearity of my sensor:

In fixed illumination I got raw format images (16bit, .dng) with different shutter exposure time. Then I took the mean value of a selected roi from the image and I plotted the values in relation to different shutter times.

you can even notice the bayer tiles in the rois above (quite cool :-), I would say).

The result can be seen below:

and here with normalized values:

as you can see my sensor is linear.

I also did the same experiment with natural light from a window in the lab:

as you can see still performs linearly though the noise, until of course the saturation to start in pixels in a part of the roi (from exporures 800ms and up).

I have also more results to share, and once I find some time again I will keep you posted.

Thanks again both @LBerger and @pklab :-).

Update:

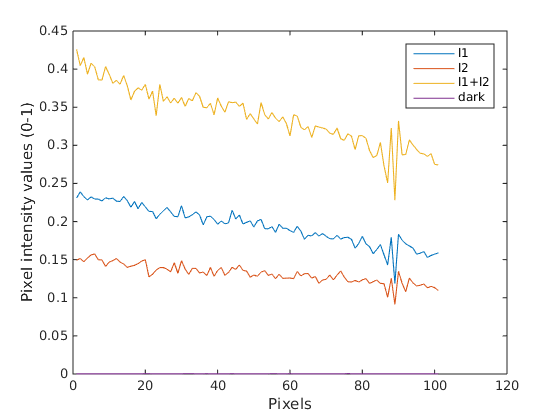

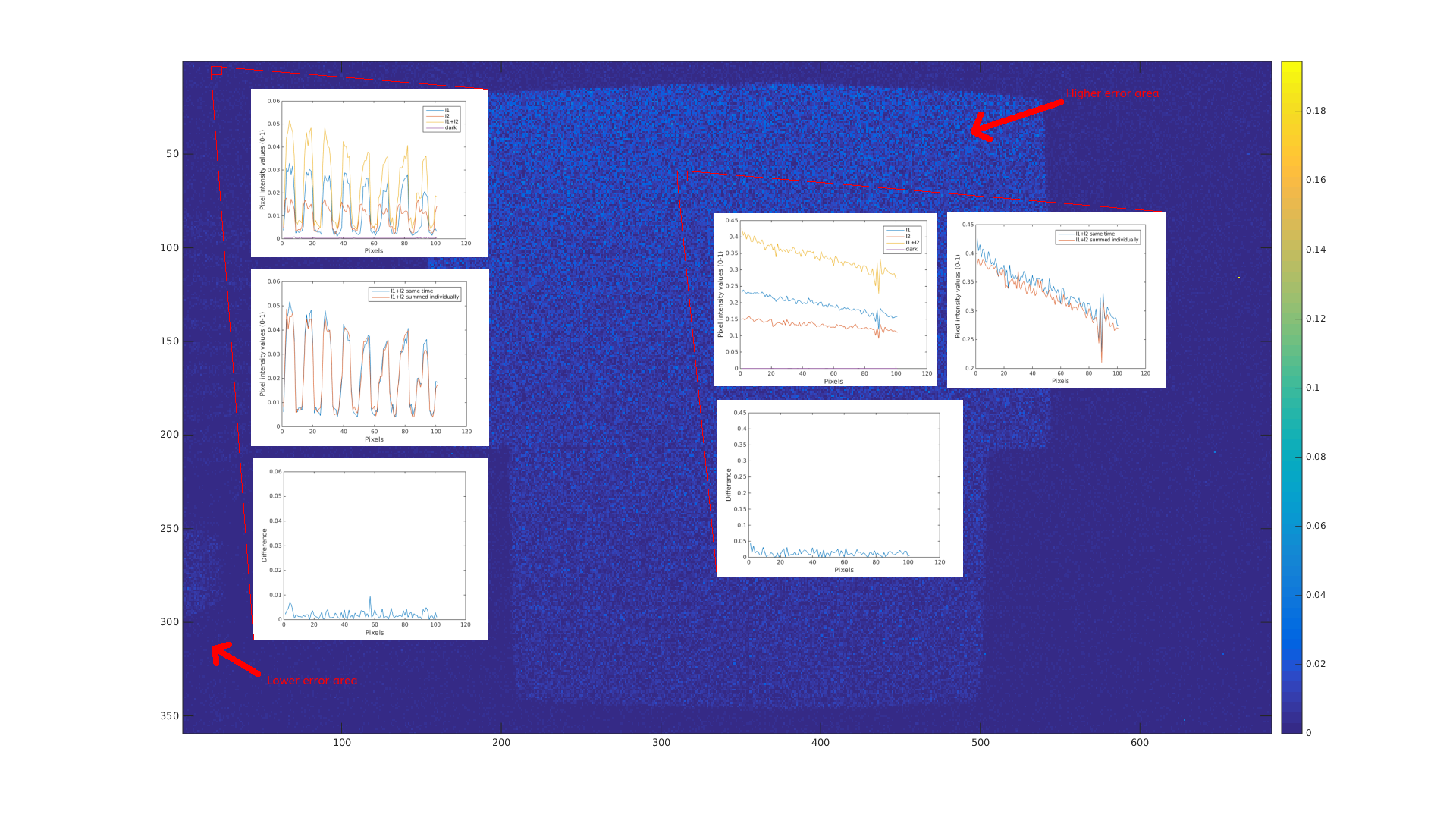

Ok, lets provide some more results regarding the experiments. Now that I am sure that my sensor is linear I did the same experiment as in my initial post. Some frames total dark, some frames with only one light source, some frames with only a second light source and lastly some frames with both lights switched on at the same time. In the ideal case the intensity values of the pixels where both light sources are on should be equal to the summation of the individual ones:

L12 = L1 + L2

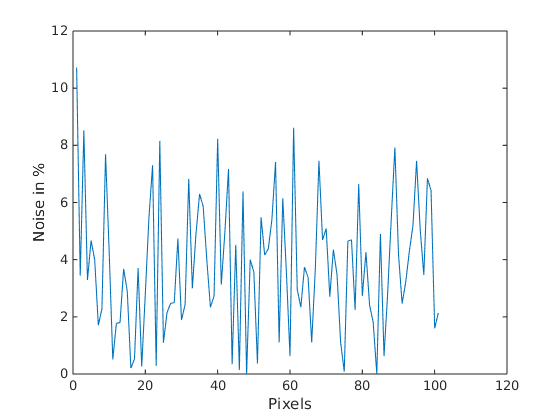

However, due to different types of noise (sensor noise, shot noise, heat noise, etc...), inter-reflections and so on a kind of error it is expected. Below can be seen what I mean in a ROI of 100 pixels:

Here the intensity values of the pixels in each light condition:

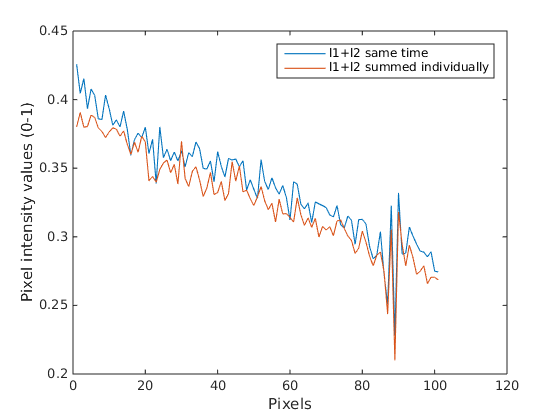

and here the summed images corresponding to each individual light in comparison to the image where both lights are on at the same time:

as you can see there is a kind of divergence, which can be better seen in the difference of the above two graphs:

if we try to interpret this in percentage we can notice an error of even 10% in some cases:

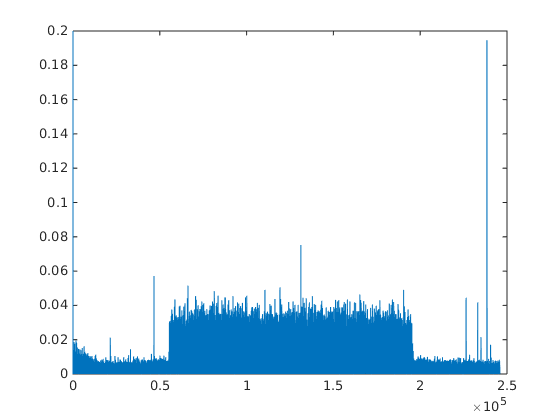

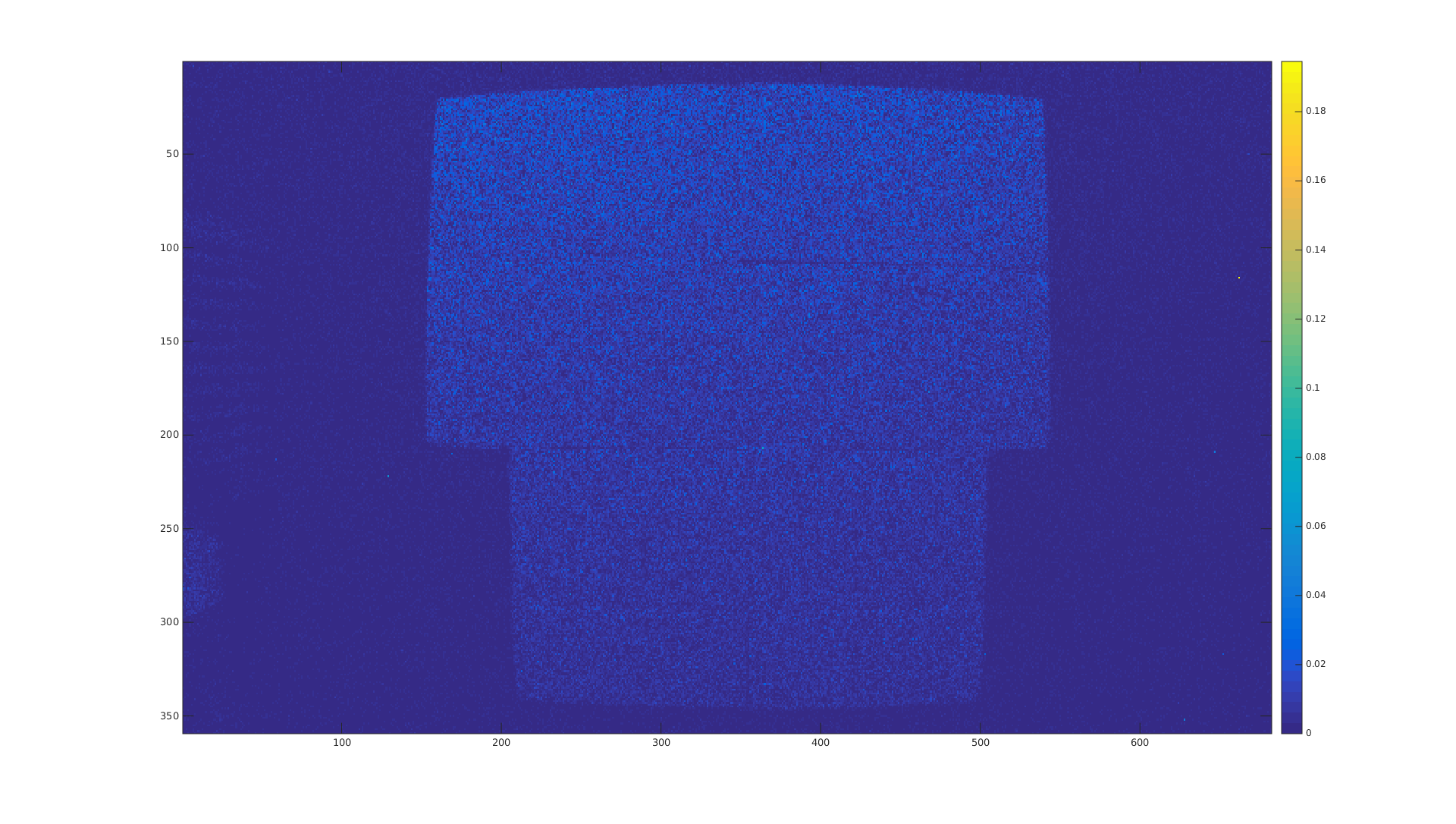

To be honest I found this quite high, but I am not sure if it is. Then I said ok lets see how this is for the whole image:

as you can see there a part of the image that has a higher error and actually this is where there is a different object from the rest, which by the way is also brighter from the other which is darker (some individual spikes that can be seen are hot pixels, so no worry about these). The latter can be seen better in the following heatmap:

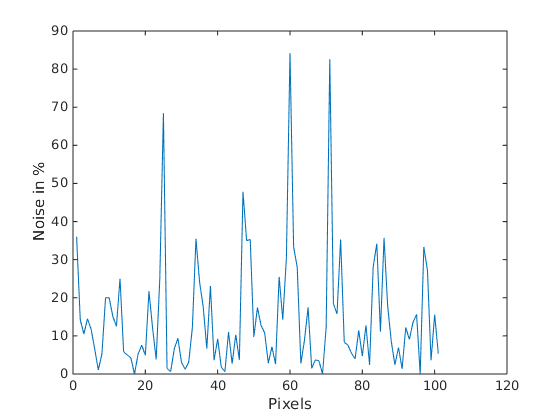

so I calculated the noise in the darker part:

and I noticed that now my noise reached even up to 80% ??????????????

So my question is how this can be happening or I am missing something? Is it such noise logical and is there a way to isolate the source of this noise? Because at the moment I can only guess.

What do you think? @pklab , @LBerger any ideas?