This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

ok I think I am ready to provide some solutions. So, let's say that we have the following image as input:

The image bellow is converted to uchar values for visualization, so have in mind that. In order to get the correct result you should load the actual matrix with the depth values. You can still apply the solution on this image but the output will not be that smooth.

Following instructions from this SO thread mentioned in the comments above as well. It is a kind of shortcut to cross production method.

if(depth.type() != CV_32FC1)

depth.convertTo(depth, CV_32FC1);

Mat normals(depth.size(), CV_32FC3);

for(int x = 0; x < depth.rows; ++x)

{

for(int y = 0; y < depth.cols; ++y)

{

// use float instead of double otherwise you will not get the correct result

// check my updates in the original post. I have not figure out yet why this

// is happening.

float dzdx = (depth.at<float>(x+1, y) - depth.at<float>(x-1, y)) / 2.0;

float dzdy = (depth.at<float>(x, y+1) - depth.at<float>(x, y-1)) / 2.0;

Vec3f d(-dzdx, -dzdy, 1.0f);

Vec3f n = normalize(d);

normals.at<Vec3f>(x, y) = n;

}

}

imshow("normals", normals);

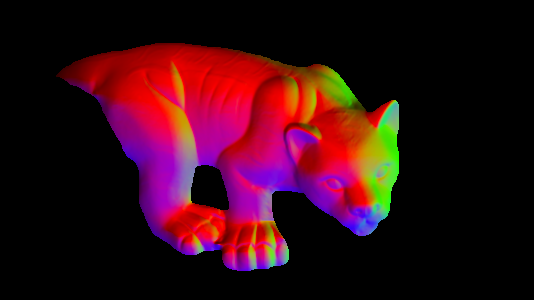

Outuput:

Applying the actual cross production explicitly. It is based on @berak's suggestion/comment and provided solution from above. (@berak if you have any problem with it or you do not feel confident having your solution here just tell me to remove it, no problem with it).

if(depth.type() != CV_32FC1)

depth.convertTo(depth, CV_32FC1);

Mat normals(depth.size(), CV_32FC3);

for(int x = 1; x < depth.cols - 1; ++x)

{

for(int y = 1; y < depth.rows - 1; ++y)

{

// 3d pixels, think (x,y, depth)

/* * * * *

* * t * *

* l c * *

* * * * */

Vec3f t(x,y-1,depth.at<float>(y-1, x));

Vec3f l(x-1,y,depth.at<float>(y, x-1));

Vec3f c(x,y,depth.at<float>(y, x));

Vec3f d = (l-c).cross(t-c);

Vec3f n = normalize(d);

nor.at<Vec3f>(y,x) = n;

}

}

imshow("explicitly cross_product normals", normals);

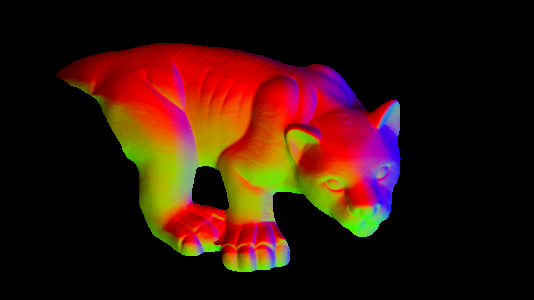

Output:

For some reason the output slightly differs, I do not really understand yet why but I am looking on it.

A convolution based approach. Based on this paper where the method is described in the supplemental work (1st paragraph).

if(depth.type() != CV_32FC1)

depth.convertTo(depth, CV_32FC1);

// filters

Mat_<float> f1 = (Mat_<float>(3, 3) << 1, 2, 1,

0, 0, 0,

-1, -2, -1) / 8;

Mat_<float> f2 = (Mat_<float>(3, 3) << 1, 0, -1,

2, 0, -2,

1, 0, -1) / 8;

/* Other filters that could be used:

% f1 = [0, 0, 0;

% 0, 1, 1;

% 0,-1,-1]/4;

%

% f2 = [0, 0, 0;

% 0, 1,-1;

% 0, 1,-1]/4;

or

% f1 = [0, 1, 0;

% 0, 0, 0;

% 0,-1, 0]/2;

%

% f2 = [0, 0, 0;

% 1, 0, -1;

% 0, 0, 0]/2;

*/

Mat f1m, f2m;

flip(f1, f1m, 0);

flip(f2, f2m, 1);

Mat n1, n2;

filter2D(depth, n1, -1, f1m, Point(-1, -1), 0, BORDER_CONSTANT);

filter2D(depth, n2, -1, f2m, Point(-1, -1), 0, BORDER_CONSTANT);

n1 *= -1;

n2 *= -1;

Mat temp = n1.mul(n1) + n2.mul(n2) + 1;

cv::sqrt(temp, temp);

Mat N3 = 1 / temp;

Mat N1 = n1.mul(N3);

Mat N2 = n2.mul(N3);

vector<Mat> N;

N.push_back(N1);

N.push_back(N2);

N.push_back(N3);

Mat surface_normals;

merge(N, surface_normals);

imshow("convolution_based_normals", surface_normals);

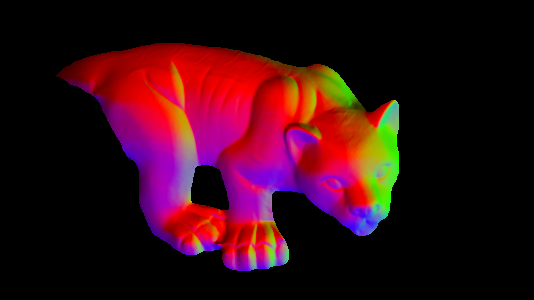

Output:

as we can see result is the same as in Solution 1. We should be getting the same result in Solution 2 but apparently something is missing (working on it).