This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

An example in C++ that can easily ported in python is the following:

#include <iostream>

#include <opencv2/opencv.hpp>

using namespace std;

using namespace cv;

int main()

{

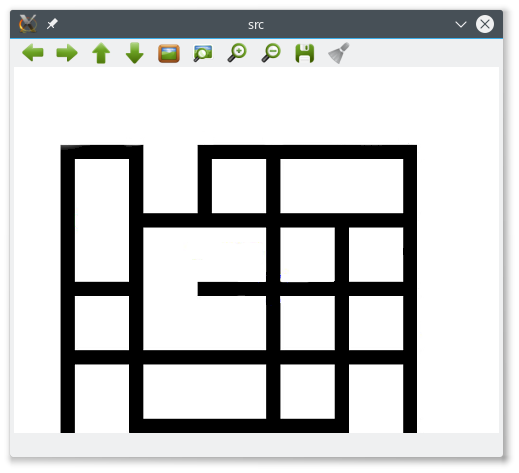

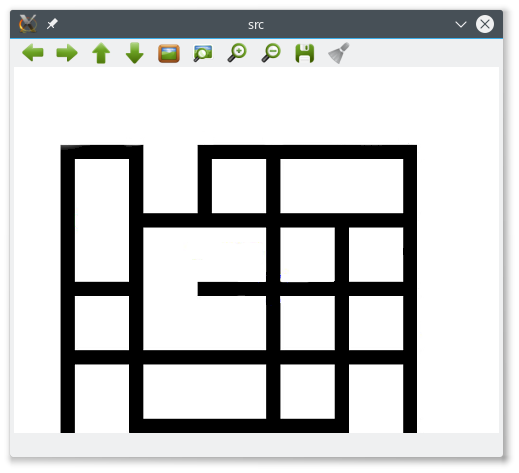

// Load source image

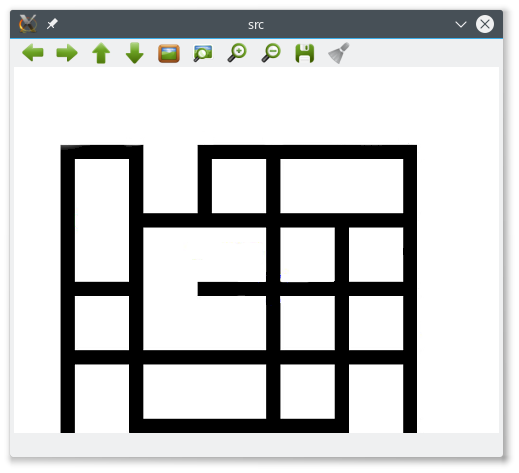

string filename = "nodes.bmp";

Mat src = imread(filename);

// Check if image is loaded fine

if(!src.data)

cerr << "Problem loading image!!!" << endl;

// Show source image

imshow("src", src);

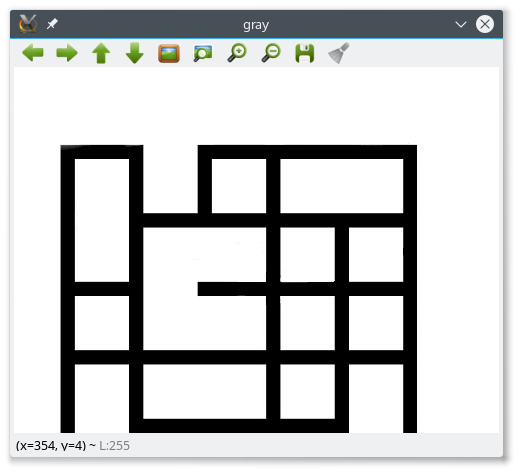

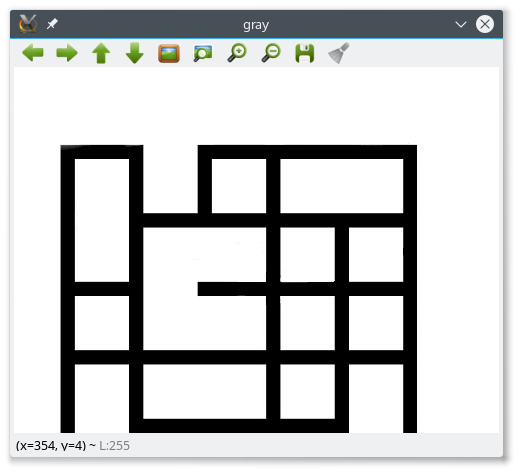

// Transform source image to gray if it is not

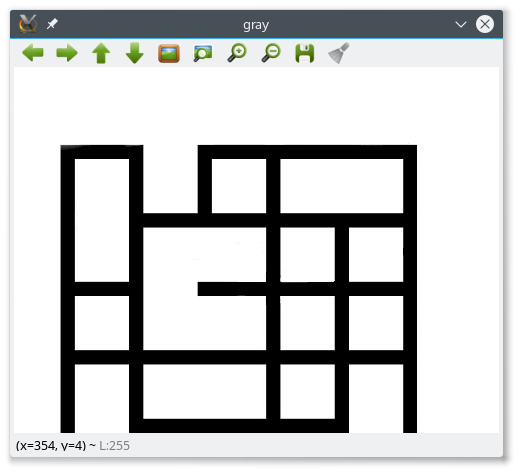

Mat gray;

if (src.channels() == 3)

{

cvtColor(src, gray, CV_BGR2GRAY);

}

else

{

gray = src;

}

// Show gray image

imshow("gray", gray);

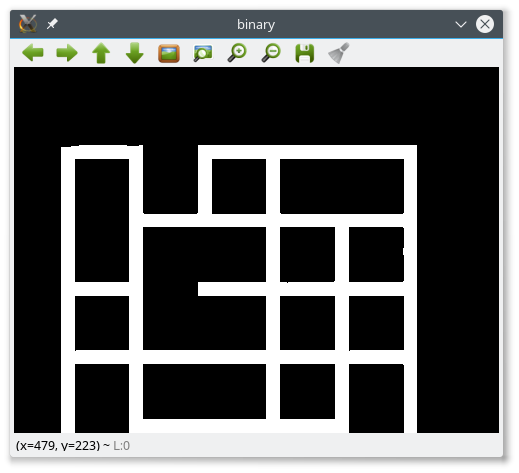

// Apply threshold to grayscale image

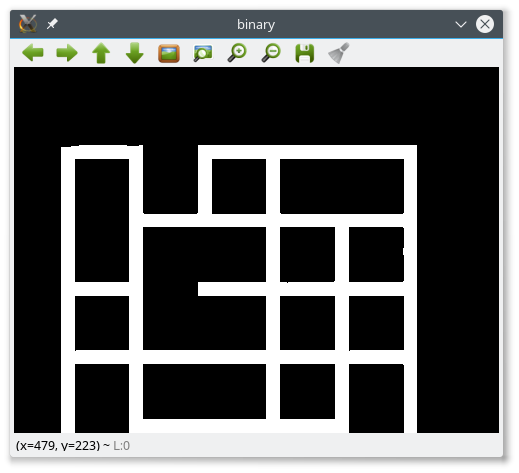

Mat bw;

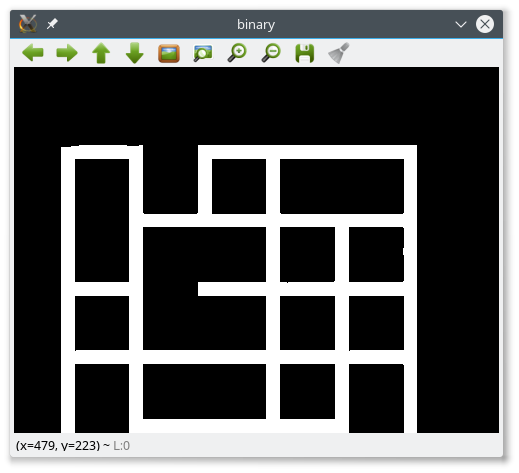

threshold(gray, bw, 50, 255, THRESH_BINARY || THRESH_OTSU);

// Show binary image

imshow("binary", bw);

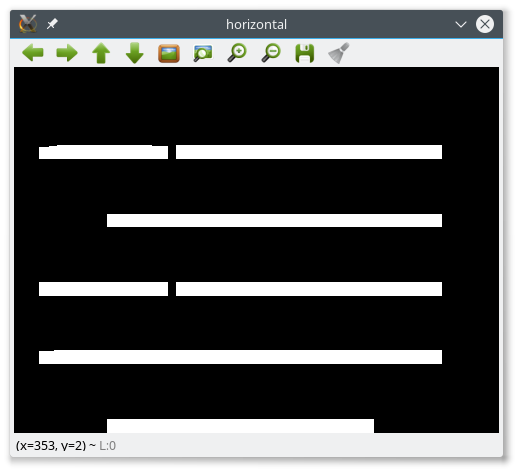

// Create the images that will use to extract the horizonta and vertical lines

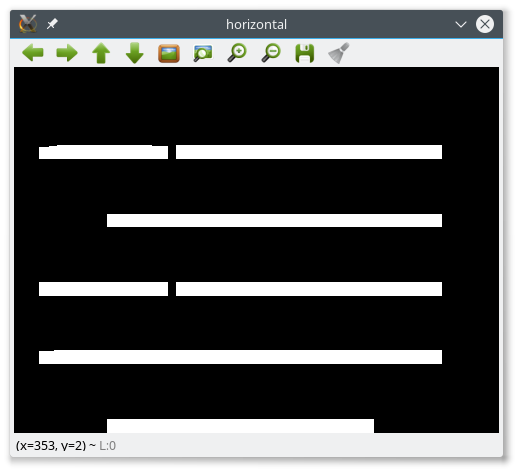

Mat horizontal = bw.clone();

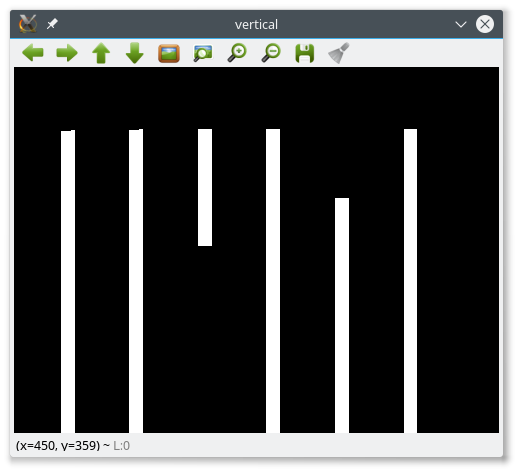

Mat vertical = bw.clone();

// Specify size on horizontal axis

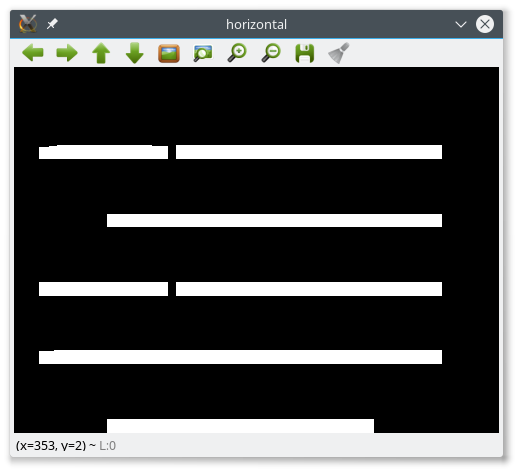

int horizontalsize = horizontal.cols / 10;

// Create structure element for extracting horizontal lines through morphology operations

Mat horizontalStructure = getStructuringElement(MORPH_RECT, Size(horizontalsize,1));

// Apply morphology operations

erode(horizontal, horizontal, horizontalStructure, Point(-1, -1));

dilate(horizontal, horizontal, horizontalStructure, Point(-1, -1));

dilate(horizontal, horizontal, horizontalStructure, Point(-1, -1)); // expand horizontal lines

// Show extracted horizontal lines

imshow("horizontal", horizontal);

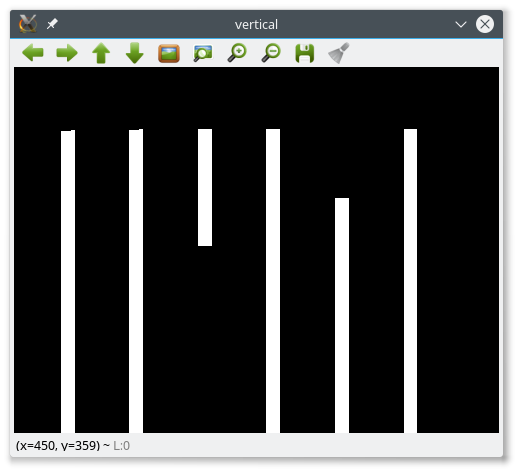

// Specify size on vertical axis

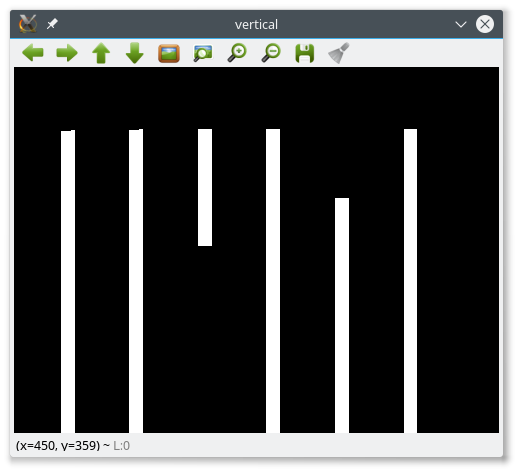

int verticalsize = vertical.rows / 10;

// Create structure element for extracting vertical lines through morphology operations

Mat verticalStructure = getStructuringElement(MORPH_RECT, Size( 1,verticalsize));

// Apply morphology operations

erode(vertical, vertical, verticalStructure, Point(-1, -1));

dilate(vertical, vertical, verticalStructure, Point(-1, -1));

dilate(vertical, vertical, verticalStructure, Point(-1, -1)); // expand vertical lines

// Show extracted vertical lines

imshow("vertical", vertical);

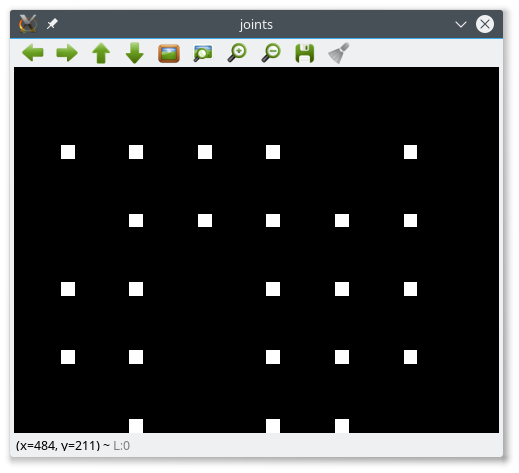

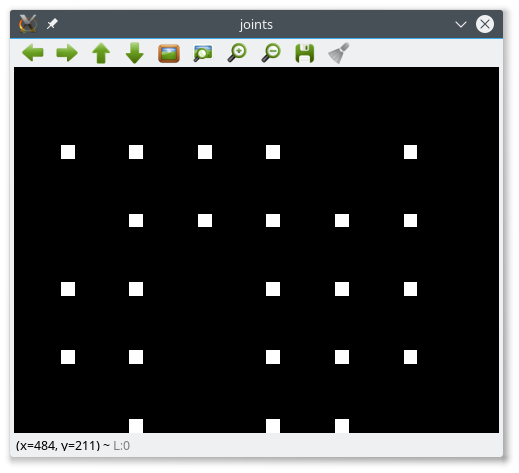

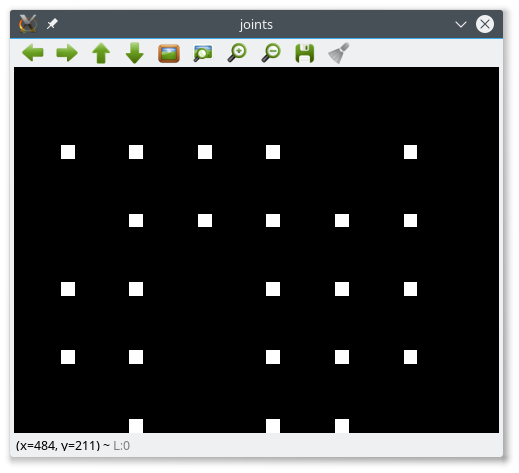

Mat joints;

bitwise_and(horizontal, vertical, joints);

imshow("joints", joints);

vector<vector<Point> > contours;

findContours(joints, contours, RETR_CCOMP, CHAIN_APPROX_SIMPLE);

/// Get the moments

vector<Moments> mu(contours.size() );

for( size_t i = 0; i < contours.size(); i++ )

{ mu[i] = moments( contours[i], false ); }

/// Get the mass centers:

vector<Point2f> mc( contours.size() );

for( size_t i = 0; i < contours.size(); i++ )

{ mc[i] = Point2f( mu[i].m10/mu[i].m00 , mu[i].m01/mu[i].m00 ); }

struct Less

{

bool operator () (const Point2f &a, const Point2f &b)

{

return (a.x + a.y*10000) < (b.x + b.y*10000);

}

};

std::sort(mc.begin(), mc.end(), Less());

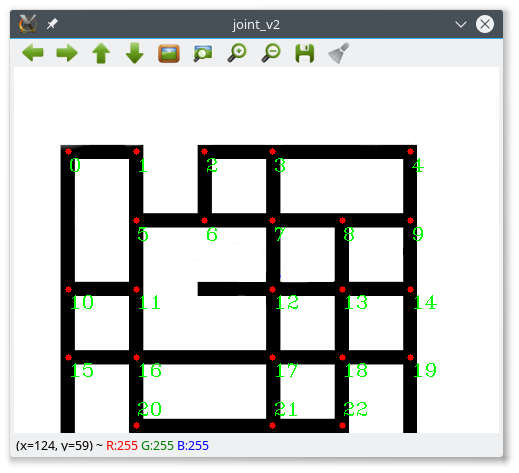

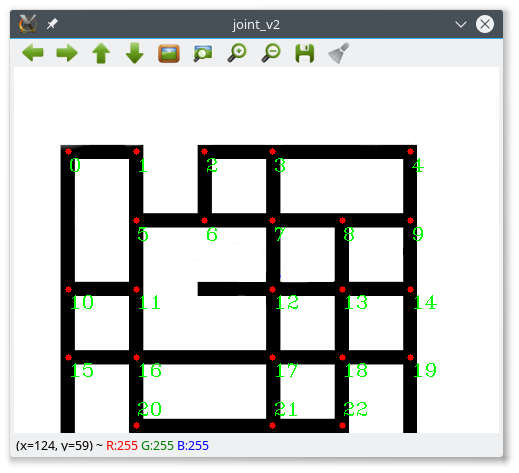

for( size_t i = 0; i< contours.size(); i++ )

{

string text = to_string(static_cast<int>(i));

Point origin = Point(mc[i].x + 1, mc[i].y + 20);

if((mc[i].x + 20) > src.cols/* && mc[i].y < src.rows*/)

origin = Point(mc[i].x - 25, mc[i].y + 22);

if ((mc[i].y + 20) > src.rows/* && mc[i].x < src.cols*/)

origin = Point(mc[i].x + 1, mc[i].y - 10);

if (((mc[i].x + 20) > src.cols) && ((mc[i].y + 20) > src.rows))

origin = Point(mc[i].x - 27, mc[i].y - 10);

putText(src, text, origin, FONT_HERSHEY_COMPLEX_SMALL, 1, Scalar( 0, 255, 0 ));

circle( src, mc[i], 3, Scalar( 0, 0, 255 ), -1, 8, 0 );

}

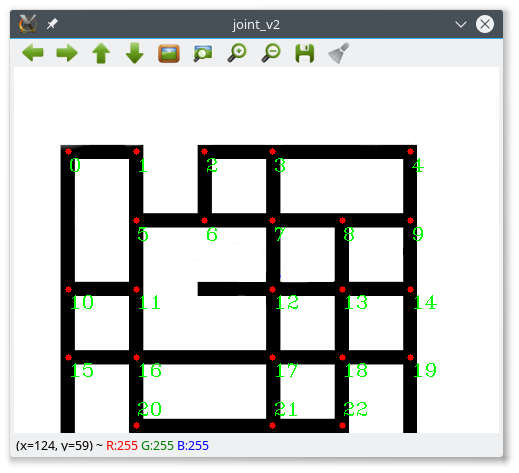

imshow("joint_v2", src);

waitKey(0);

return 0;

}

if you also want to find the joints in the spots where the horizontal and vertical lines are not cross each other then you can make a small trick by expanding the lines in the exported horizontal and vertical images, in such a way that they finally every vertical lines crosses the corresponding horizontal line. As I said it should be quite straight forward to port the above code to python. Moreover, some optimization might be needed. Enjoy!!!

| 2 | No.2 Revision |

An example in C++ that can easily ported in python is the following:following (bear in mind that there are quite some techniques that you could follow, such as to apply a skeletonize algorithm first, etc...):

#include <iostream>

#include <opencv2/opencv.hpp>

using namespace std;

using namespace cv;

int main()

{

// Load source image

string filename = "nodes.bmp";

Mat src = imread(filename);

// Check if image is loaded fine

if(!src.data)

cerr << "Problem loading image!!!" << endl;

// Show source image

imshow("src", src);

// Transform source image to gray if it is not

Mat gray;

if (src.channels() == 3)

{

cvtColor(src, gray, CV_BGR2GRAY);

}

else

{

gray = src;

}

// Show gray image

imshow("gray", gray);

// Apply threshold to grayscale image

Mat bw;

threshold(gray, bw, 50, 255, THRESH_BINARY || THRESH_OTSU);

// Show binary image

imshow("binary", bw);

// Create the images that will use to extract the horizonta and vertical lines

Mat horizontal = bw.clone();

Mat vertical = bw.clone();

// Specify size on horizontal axis

int horizontalsize = horizontal.cols / 10;

// Create structure element for extracting horizontal lines through morphology operations

Mat horizontalStructure = getStructuringElement(MORPH_RECT, Size(horizontalsize,1));

// Apply morphology operations

erode(horizontal, horizontal, horizontalStructure, Point(-1, -1));

dilate(horizontal, horizontal, horizontalStructure, Point(-1, -1));

dilate(horizontal, horizontal, horizontalStructure, Point(-1, -1)); // expand horizontal lines

// Show extracted horizontal lines

imshow("horizontal", horizontal);

// Specify size on vertical axis

int verticalsize = vertical.rows / 10;

// Create structure element for extracting vertical lines through morphology operations

Mat verticalStructure = getStructuringElement(MORPH_RECT, Size( 1,verticalsize));

// Apply morphology operations

erode(vertical, vertical, verticalStructure, Point(-1, -1));

dilate(vertical, vertical, verticalStructure, Point(-1, -1));

dilate(vertical, vertical, verticalStructure, Point(-1, -1)); // expand vertical lines

// Show extracted vertical lines

imshow("vertical", vertical);

Mat joints;

bitwise_and(horizontal, vertical, joints);

imshow("joints", joints);

vector<vector<Point> > contours;

findContours(joints, contours, RETR_CCOMP, CHAIN_APPROX_SIMPLE);

/// Get the moments

vector<Moments> mu(contours.size() );

for( size_t i = 0; i < contours.size(); i++ )

{ mu[i] = moments( contours[i], false ); }

/// Get the mass centers:

vector<Point2f> mc( contours.size() );

for( size_t i = 0; i < contours.size(); i++ )

{ mc[i] = Point2f( mu[i].m10/mu[i].m00 , mu[i].m01/mu[i].m00 ); }

struct Less

{

bool operator () (const Point2f &a, const Point2f &b)

{

return (a.x + a.y*10000) < (b.x + b.y*10000);

}

};

std::sort(mc.begin(), mc.end(), Less());

for( size_t i = 0; i< contours.size(); i++ )

{

string text = to_string(static_cast<int>(i));

Point origin = Point(mc[i].x + 1, mc[i].y + 20);

if((mc[i].x + 20) > src.cols/* && mc[i].y < src.rows*/)

origin = Point(mc[i].x - 25, mc[i].y + 22);

if ((mc[i].y + 20) > src.rows/* && mc[i].x < src.cols*/)

origin = Point(mc[i].x + 1, mc[i].y - 10);

if (((mc[i].x + 20) > src.cols) && ((mc[i].y + 20) > src.rows))

origin = Point(mc[i].x - 27, mc[i].y - 10);

putText(src, text, origin, FONT_HERSHEY_COMPLEX_SMALL, 1, Scalar( 0, 255, 0 ));

circle( src, mc[i], 3, Scalar( 0, 0, 255 ), -1, 8, 0 );

}

imshow("joint_v2", src);

waitKey(0);

return 0;

}

if you also want to find the joints in the spots where the horizontal and vertical lines are not cross each other then you can make a small trick by expanding the lines in the exported horizontal and vertical images, in such a way that they finally every vertical lines crosses the corresponding horizontal line. As I said it should be quite straight forward to port the above code to python. Moreover, some optimization might be needed. Enjoy!!!

| 3 | No.3 Revision |

An example in C++ that can easily ported in python is the following (bear in mind that there are quite some techniques that you could follow, such as to apply a skeletonize algorithm first, etc...):

#include <iostream>

#include <opencv2/opencv.hpp>

using namespace std;

using namespace cv;

int main()

{

// Load source image

string filename = "nodes.bmp";

Mat src = imread(filename);

// Check if image is loaded fine

if(!src.data)

cerr << "Problem loading image!!!" << endl;

// Show source image

imshow("src", src);

// Transform source image to gray if it is not

Mat gray;

if (src.channels() == 3)

{

cvtColor(src, gray, CV_BGR2GRAY);

}

else

{

gray = src;

}

// Show gray image

imshow("gray", gray);

// Apply threshold to grayscale image

Mat bw;

threshold(gray, bw, 50, 255, THRESH_BINARY || THRESH_OTSU);

// Show binary image

imshow("binary", bw);

// Create the images that will use to extract the horizonta and vertical lines

Mat horizontal = bw.clone();

Mat vertical = bw.clone();

// Specify size on horizontal axis

int horizontalsize = horizontal.cols / 10;

// Create structure element for extracting horizontal lines through morphology operations

Mat horizontalStructure = getStructuringElement(MORPH_RECT, Size(horizontalsize,1));

// Apply morphology operations

erode(horizontal, horizontal, horizontalStructure, Point(-1, -1));

dilate(horizontal, horizontal, horizontalStructure, Point(-1, -1));

horizontalStructure = getStructuringElement(MORPH_RECT, Size(500,1)); // 500 is a random value choose whatever you want here

dilate(horizontal, horizontal, horizontalStructure, Point(-1, -1)); // expand horizontal lines

// Show extracted horizontal lines

imshow("horizontal", horizontal);

// Specify size on vertical axis

int verticalsize = vertical.rows / 10;

// Create structure element for extracting vertical lines through morphology operations

Mat verticalStructure = getStructuringElement(MORPH_RECT, Size( 1,verticalsize));

// Apply morphology operations

erode(vertical, vertical, verticalStructure, Point(-1, -1));

dilate(vertical, vertical, verticalStructure, Point(-1, -1));

verticalStructure = getStructuringElement(MORPH_RECT, Size( 1,500)); // same stand here as previous

dilate(vertical, vertical, verticalStructure, Point(-1, -1)); // expand vertical lines

// Show extracted vertical lines

imshow("vertical", vertical);

Mat joints;

bitwise_and(horizontal, vertical, joints);

imshow("joints", joints);

vector<vector<Point> > contours;

findContours(joints, contours, RETR_CCOMP, CHAIN_APPROX_SIMPLE);

/// Get the moments

vector<Moments> mu(contours.size() );

for( size_t i = 0; i < contours.size(); i++ )

{ mu[i] = moments( contours[i], false ); }

/// Get the mass centers:

vector<Point2f> mc( contours.size() );

for( size_t i = 0; i < contours.size(); i++ )

{ mc[i] = Point2f( mu[i].m10/mu[i].m00 , mu[i].m01/mu[i].m00 ); }

struct Less

{

bool operator () (const Point2f &a, const Point2f &b)

{

return (a.x + a.y*10000) < (b.x + b.y*10000);

}

};

std::sort(mc.begin(), mc.end(), Less());

for( size_t i = 0; i< contours.size(); i++ )

{

string text = to_string(static_cast<int>(i));

Point origin = Point(mc[i].x + 1, mc[i].y + 20);

if((mc[i].x + 20) > src.cols/* && mc[i].y < src.rows*/)

origin = Point(mc[i].x - 25, mc[i].y + 22);

if ((mc[i].y + 20) > src.rows/* && mc[i].x < src.cols*/)

origin = Point(mc[i].x + 1, mc[i].y - 10);

if (((mc[i].x + 20) > src.cols) && ((mc[i].y + 20) > src.rows))

origin = Point(mc[i].x - 27, mc[i].y - 10);

putText(src, text, origin, FONT_HERSHEY_COMPLEX_SMALL, 1, Scalar( 0, 255, 0 ));

circle( src, mc[i], 3, Scalar( 0, 0, 255 ), -1, 8, 0 );

}

imshow("joint_v2", src);

waitKey(0);

return 0;

}

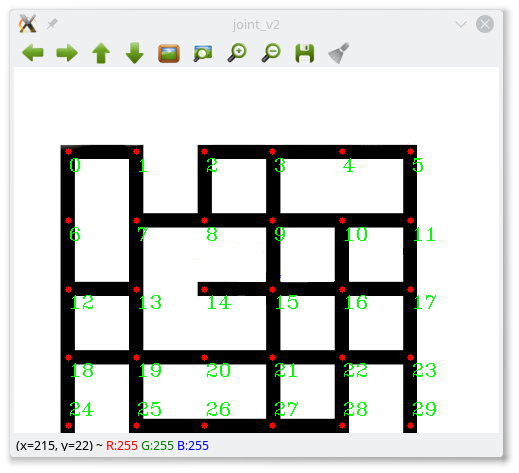

Update

if you also want to find the joints in the spots where the horizontal and vertical lines are not cross each other then you can make a small trick by expanding the lines in the exported horizontal and vertical images, in such a way that they finally every vertical lines crosses the corresponding horizontal line. As I said it should be quite straight forward to port the above code to python. Moreover, some optimization might be needed. Enjoy!!!