This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

I tried this CPP code and adopt it to Java side as below. I changed some of the lines because no need of them. So, the last state of it with Java goes here. I used *.mp4 file but i resize the frame before processing because more than a 640x480 resolution, processing is too slow. I prefer to dynamic resize the frame to 320x240 or max. 640x480 before processing :

// Fields...

Mat fg;

Mat blurred;

Mat thresholded;

Mat gray;

Mat blob;

Mat bgmodel;

BackgroundSubtractorMOG2 bgs;

List<MatOfPoint> contours = new ArrayList<MatOfPoint>();

CascadeClassifier body;

// Initializer part (you may put it to a private method and call it from constructor)...

bgs = new BackgroundSubtractorMOG2();

bgs.setInt("nmixtures", 3);

bgs.setInt("history", 1000);

bgs.setInt("varThresholdGen", 15);

//bgs.setBool("bShadowDetection", true); // This feautee does not exist on OpenCV-2.4.11

bgs.setInt("nShadowDetection",0);

bgs.setDouble("fTau", 0.5);

body=new CascadeClassifier("mapbase/hogcascade_pedestrians.xml"); // Standart sample pedestrian trained data file

if(body.empty())

{

System.out.println("--(!)Error loading hogcascade file\n");

return;

}

else

{

System.out.println("Pedestrian classifier loaded up");

}

// Frame reader part...

VideoCapture cam = new VideoCapture("D:\\Sample_Videos\\pedestrian-much.mp4"); // int 0 : Webcam, String : rtsp://IP:PORT/PATH for ip camera etc.

if(cam.isOpened())

{

Thread.sleep(500); /// This one-time delay allows the Webcam to initialize itself

while( true )

{

cam.read(cam_image);

if(!cam_image.empty())

{

// Thread.sleep(50); // If too much computation, you may give a time to CPU

Imgproc.resize(cam_image, resizeImage, new Size(320, 240));

//Apply the classifier to the captured image

renderImage=faceDetector.detect(resizeImage);

//Display the image

BufferedImage img = facePanel.matToBufferedImage(renderImage);

facePanel.repaint();

renderImage.release();

}

else

{

System.out.println(" --(!) No captured frame from video source!");

break;

}

}

}

cam.release(); //release the webcam }

// Detect method which is called in while read frame loop... public Mat detect(Mat inputframe){

Imgproc.resize(webcam_image, resizeImage, new Size(320, 240));

Mat mRgba=new Mat();

inputframe.copyTo(mRgba); // The frame read from video source (camera, *.mp4, *.avi file etc)

blurred = new Mat();

fg = blurred.clone();

thresholded = fg.clone();

blob = thresholded.clone();

gray = blob.clone();

Imgproc.cvtColor(mRgba,gray,Imgproc.COLOR_BGR2GRAY); // Before processing we convert to gray

Imgproc.GaussianBlur(gray,blurred,new Size(3,3),0,0,Imgproc.BORDER_DEFAULT); // Avoid from unnecessary pixels

bgs.apply(blurred,fg); // Extract background from scene

Imgproc.threshold(fg,thresholded,70.0f,255,Imgproc.THRESH_BINARY); // Apply max and min threshold

Mat elementCLOSE = new Mat(5,5,CvType.CV_8U,new Scalar(255,255,255));

Imgproc.morphologyEx(thresholded,thresholded,Imgproc.MORPH_CLOSE,elementCLOSE); // Do a body figure analysis (for object scene change optimization)

Mat v = new Mat();

Imgproc.findContours(thresholded,contours,v, Imgproc.RETR_EXTERNAL,Imgproc.CHAIN_APPROX_SIMPLE); // Find the changes of scene (the moving parts)

Imgproc.equalizeHist(gray, gray); // Balance the contrast to gain proper result

int cmin = 500;

int cmax = 80000;

MatOfRect rects = new MatOfRect();

if (contours != null && !contours.isEmpty()) {

//if (contours.size() >= 50) { // You may use this control and a threshold value if too much contour you have

//Imgproc.drawContours(mRgba,contours,-1,new Scalar(0, 0, 255),2); // If you want you may draw the changed pixels in red color

for (int r = 0; r < contours.size(); r++) {

Mat contour = contours.get(r);

double contourarea = Imgproc.contourArea(contour);

if (contourarea > cmin && contourarea < cmax) { // If the changed area in ranges

body.detectMultiScale(gray, rects); // If the change is compatible with the predefined vector data

Rect[] rcts = rects.toArray();

for (int i=0;i<rcts.length;i++) {

Core.rectangle(mRgba, new Point(rcts[i].x, rcts[i].y),

new Point(rcts[i].x+rcts[i].width, rcts[i].y+rcts[i].height),

new Scalar(0, 255, 0), 4); // Draw the detected area (human body part) in green color

}

}

}

//}

}

inputframe.release();

v.release();

releaseAll(); // Very important if you don't want to get a OutofMemory error

return mRgba;

}

private void releaseAll() {

blurred.release();

fg.release();

thresholded.release();

blob.release();

gray.release();

contours.clear();

}

| 2 | No.2 Revision |

I tried this CPP code and adopt it to Java side as below. I changed some of the lines because no need of them. So, the last state of it with Java goes here. I used *.mp4 file but i resize the frame before processing because more than a 640x480 resolution, processing is too slow. I prefer to dynamic resize the frame to 320x240 or max. 640x480 before processing :

// Fields...

Mat fg;

Mat blurred;

Mat thresholded;

Mat gray;

Mat blob;

Mat bgmodel;

BackgroundSubtractorMOG2 bgs;

List<MatOfPoint> contours = new ArrayList<MatOfPoint>();

CascadeClassifier body;

// Initializer part (you may put it to a private method and call it from constructor)...

bgs = new BackgroundSubtractorMOG2();

bgs.setInt("nmixtures", 3);

bgs.setInt("history", 1000);

bgs.setInt("varThresholdGen", 15);

//bgs.setBool("bShadowDetection", true); // This feautee does not exist on OpenCV-2.4.11

bgs.setInt("nShadowDetection",0);

bgs.setDouble("fTau", 0.5);

body=new CascadeClassifier("mapbase/hogcascade_pedestrians.xml"); // Standart sample pedestrian trained data file

if(body.empty())

{

System.out.println("--(!)Error loading hogcascade file\n");

return;

}

else

{

System.out.println("Pedestrian classifier loaded up");

}

// Frame reader part...

VideoCapture cam = new VideoCapture("D:\\Sample_Videos\\pedestrian-much.mp4"); // int 0 : Webcam, String : rtsp://IP:PORT/PATH for ip camera etc.

if(cam.isOpened())

{

Thread.sleep(500); /// This one-time delay allows the Webcam to initialize itself

while( true )

{

cam.read(cam_image);

if(!cam_image.empty())

{

// Thread.sleep(50); // If too much computation, you may give a time to CPU

Imgproc.resize(cam_image, resizeImage, new Size(320, 240));

//Apply the classifier to the captured image

renderImage=faceDetector.detect(resizeImage);

//Display the image

BufferedImage img = facePanel.matToBufferedImage(renderImage);

facePanel.repaint();

renderImage.release();

}

else

{

System.out.println(" --(!) No captured frame from video source!");

break;

}

}

}

cam.release(); //release the webcam }

// Detect method which is called in while read frame loop... public Mat detect(Mat inputframe){

Imgproc.resize(webcam_image, resizeImage, new Size(320, 240));

Mat mRgba=new Mat();

inputframe.copyTo(mRgba); // The frame read from video source (camera, *.mp4, *.avi file etc)

blurred = new Mat();

fg = blurred.clone();

thresholded = fg.clone();

blob = thresholded.clone();

gray = blob.clone();

Imgproc.cvtColor(mRgba,gray,Imgproc.COLOR_BGR2GRAY); // Before processing we convert to gray

Imgproc.GaussianBlur(gray,blurred,new Size(3,3),0,0,Imgproc.BORDER_DEFAULT); // Avoid from unnecessary pixels

bgs.apply(blurred,fg); // Extract background from scene

Imgproc.threshold(fg,thresholded,70.0f,255,Imgproc.THRESH_BINARY); // Apply max and min threshold

Mat elementCLOSE = new Mat(5,5,CvType.CV_8U,new Scalar(255,255,255));

Imgproc.morphologyEx(thresholded,thresholded,Imgproc.MORPH_CLOSE,elementCLOSE); // Do a body figure analysis (for object scene change optimization)

Mat v = new Mat();

Imgproc.findContours(thresholded,contours,v, Imgproc.RETR_EXTERNAL,Imgproc.CHAIN_APPROX_SIMPLE); // Find the changes of scene (the moving parts)

Imgproc.equalizeHist(gray, gray); // Balance the contrast to gain proper result

int cmin = 500;

int cmax = 80000;

MatOfRect rects = new MatOfRect();

if (contours != null && !contours.isEmpty()) {

//if (contours.size() >= 50) { // You may use this control and a threshold value if too much contour you have

//Imgproc.drawContours(mRgba,contours,-1,new Scalar(0, 0, 255),2); // If you want you may draw the changed pixels in red color

for (int r = 0; r < contours.size(); r++) {

Mat contour = contours.get(r);

double contourarea = Imgproc.contourArea(contour);

if (contourarea > cmin && contourarea < cmax) { // If the changed area in ranges

body.detectMultiScale(gray, rects); // If the change is compatible with the predefined vector data

Rect[] rcts = rects.toArray();

for (int i=0;i<rcts.length;i++) {

Core.rectangle(mRgba, new Point(rcts[i].x, rcts[i].y),

new Point(rcts[i].x+rcts[i].width, rcts[i].y+rcts[i].height),

new Scalar(0, 255, 0), 4); // Draw the detected area (human body part) in green color

}

}

}

//}

}

inputframe.release();

v.release();

releaseAll(); // Very important if you don't want to get a OutofMemory error

return mRgba;

}

private void releaseAll() {

blurred.release();

fg.release();

thresholded.release();

blob.release();

gray.release();

contours.clear();

}

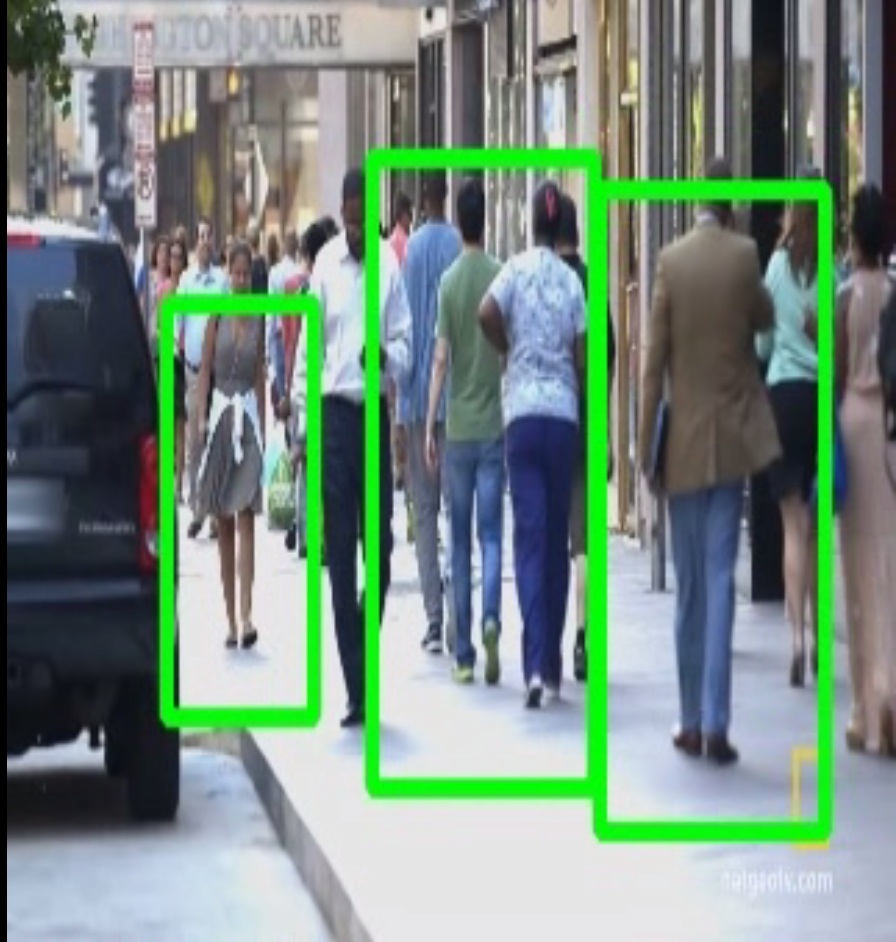

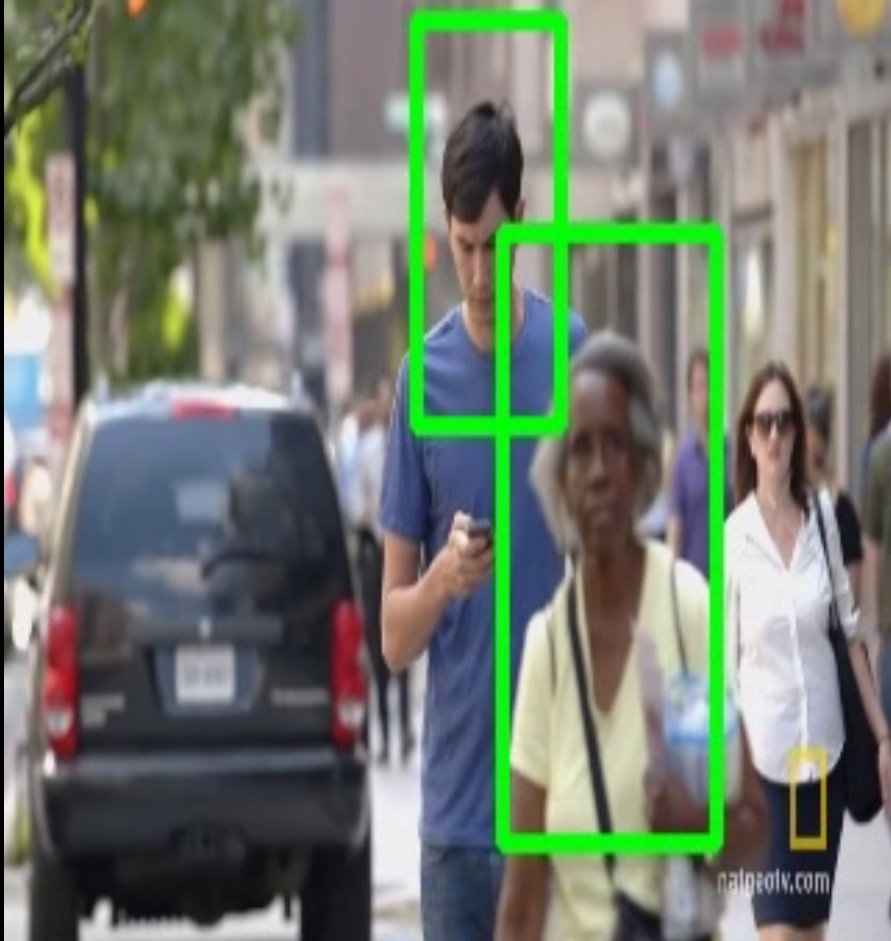

Here are 2 sample pictures. This is a basic work so don't forget that if you want proper results you must develop the algorithm and generate a HOG file with INRA or MIT pedestrian database.