This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

The registered depth data is given in a cv::Mat structure with data in several types. If you retrieve it by using CV_CAP_OPENNI_DEPTH_MAP or CV_CAP_OPENNI_POINT_CLOUD_MAP, this matrix will be respectively of CV_16UC1 or CV_32FC3. The first is a 16-bit integer with the depth in millimetres, and the second each element will be an X,Y,Z vector of the relative 3D position of the point to the camera in metres . You can easily access the data by accessing the individual elements of the Mat object.

see the example:

cv:.Mat image3D, imageBGR;

cv::VideoCapture videoReader;

//Init camera

videoReader.open( CV_CAP_OPENNI );

videoReader.set( CV_CAP_OPENNI_IMAGE_GENERATOR_OUTPUT_MODE, CV_CAP_OPENNI_VGA_30HZ );

videoReader.set(CV_CAP_OPENNI_DEPTH_GENERATOR_REGISTRATION,1);

//grab a frame

if (videoReader.grab()){

videoReader.retrieve(imageBGR,CV_CAP_OPENNI_BGR_IMAGE);

videoReader.retrieve(image3D,CV_CAP_OPENNI_POINT_CLOUD_MAP);

//Here you get the depth

int y = 200;

int x = 100;

cv::Vec3f pt_3d = image3D.at<cv::Vec3f>(y,x); //0 - X, 1 - Y, 2 - Z

else{

std::cerr << "Error capturing frame !" << std::endl;

}

I hope that it helps.

| 2 | No.2 Revision |

The registered depth data is given in a cv::Mat structure with data in several types. If you retrieve it by using CV_CAP_OPENNI_DEPTH_MAP or CV_CAP_OPENNI_POINT_CLOUD_MAP, this matrix will be respectively of CV_16UC1 or CV_32FC3. The first is a 16-bit integer with the depth in millimetres, and the second each element will be an X,Y,Z vector of the relative 3D position of the point to the camera in metres . You can easily access the data by accessing the individual elements of the Mat object.

see the example:

cv:.Mat image3D, imageBGR;

cv::VideoCapture videoReader;

//Init camera

videoReader.open( CV_CAP_OPENNI );

videoReader.set( CV_CAP_OPENNI_IMAGE_GENERATOR_OUTPUT_MODE, CV_CAP_OPENNI_VGA_30HZ );

videoReader.set(CV_CAP_OPENNI_DEPTH_GENERATOR_REGISTRATION,1);

//grab a frame

if (videoReader.grab()){

videoReader.retrieve(imageBGR,CV_CAP_OPENNI_BGR_IMAGE);

videoReader.retrieve(image3D,CV_CAP_OPENNI_POINT_CLOUD_MAP);

//Here you get the depth

int y = 200;

int x = 100;

cv::Vec3f pt_3d = image3D.at<cv::Vec3f>(y,x); //0 - X, 1 - Y, 2 - Z

else{

std::cerr << "Error capturing frame !" << std::endl;

}

I hope that it helps.

About the alignment of the images:

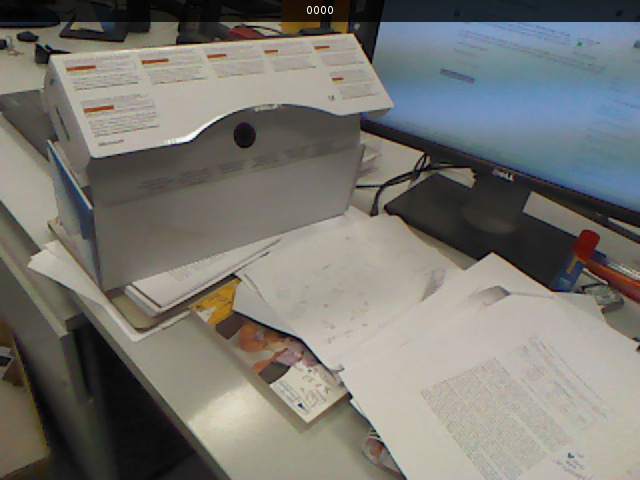

The flags sent in the "set" command to the "VideoCapture " structure in the example above (more specifically "CV_CAP_OPENNI_DEPTH_GENERATOR_REGISTRATION") ensure that the RGB and the depth frame will be properly registered/aligned by the OpenNI backend. As side effect of the registration, there will be a black border around the scene which corresponds to regions where the field of view of the IR sensor does not overlap with the RGB sensor.

See the example bellow. As you can see, there is a surrounding area where there isn't any depth information (the black regions):