This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

I tried to do the same using a basic video.

There is maybe a better solution but this is how I did to stitch the images (maybe this could help you):

To copy/paste the images, in pseudo-code:

for each new image {

//Get the new image

capture >> img_cur

//Copy the current panorama into panoramaCur

cv::Mat panoramaCur;

panorama.copyTo(panoramaCur);

//panoramaSize: new panorama size

//Warp and copy img_cur into panoramaCur using the homography H

cv::warpPerspective(img_cur, panoramaCur, H_curto1, panoramaSize);

//ROI for the previous panorama

cv::Mat half(panoramaCur, cv::Rect(0, 0, panorama.cols, panorama.rows));

panorama.copyTo(half);

//Get the new panorama result

panoramaCur.copyTo(panorama);

}

Finally, the result in video.

| 2 | No.2 Revision |

I tried to do the same using a basic video.

There is maybe a better solution but this is how I did to stitch the images (maybe this could help you):

To copy/paste the images, in pseudo-code:

for each new image {

//Get the new image

capture >> img_cur

//Copy the current panorama into panoramaCur

cv::Mat panoramaCur;

panorama.copyTo(panoramaCur);

//panoramaSize: new panorama size

//Warp and copy img_cur into panoramaCur using the homography H

cv::warpPerspective(img_cur, panoramaCur, H_curto1, panoramaSize);

//ROI for the previous panorama

cv::Mat half(panoramaCur, cv::Rect(0, 0, panorama.cols, panorama.rows));

panorama.copyTo(half);

//Get the new panorama result

panoramaCur.copyTo(panorama);

}

Finally, the result in video.

Edit:

First of all, it is the first time I "play" with image stitching so the method I present is not necessarily good or optimal. I think that the problem you encounter is that some pixels in the original image are warped in negative coordinates.

In your case, it seems that the view is shot from an UAV. I think that the easiest solution is to divide the mosaic image in a grid of 3x3. The central part will show always the current image. There will be always some free space for the result of the warping.

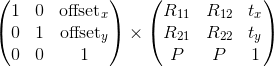

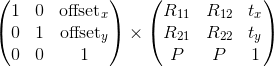

Some test I made (tl:dr). For example, with the two images below:

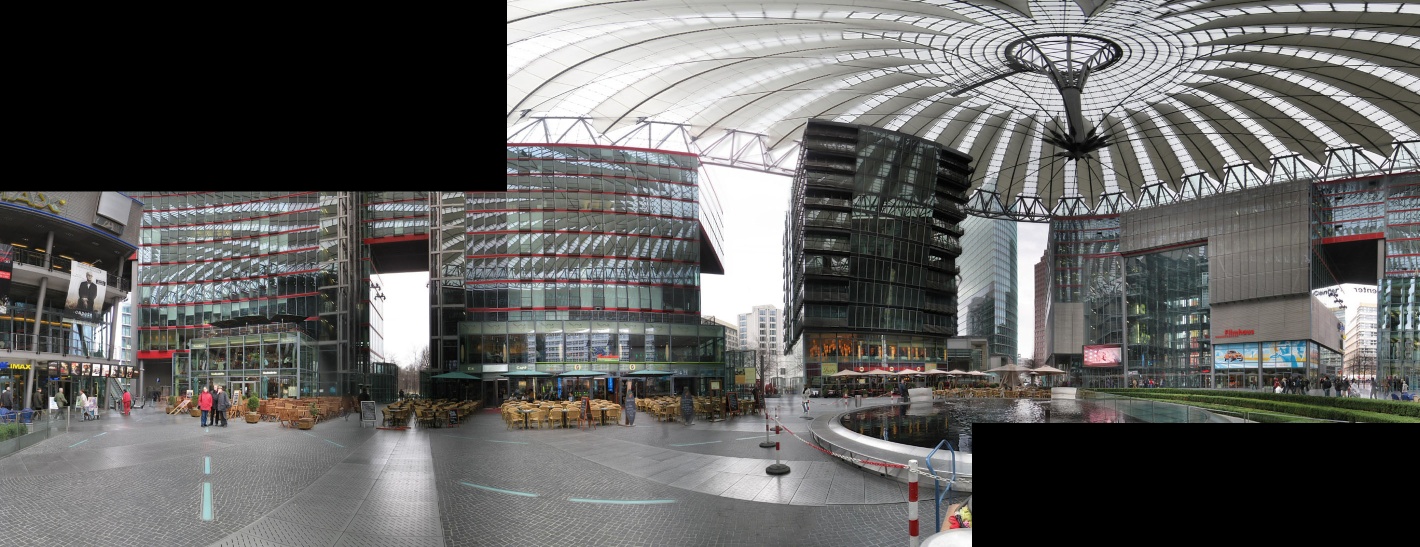

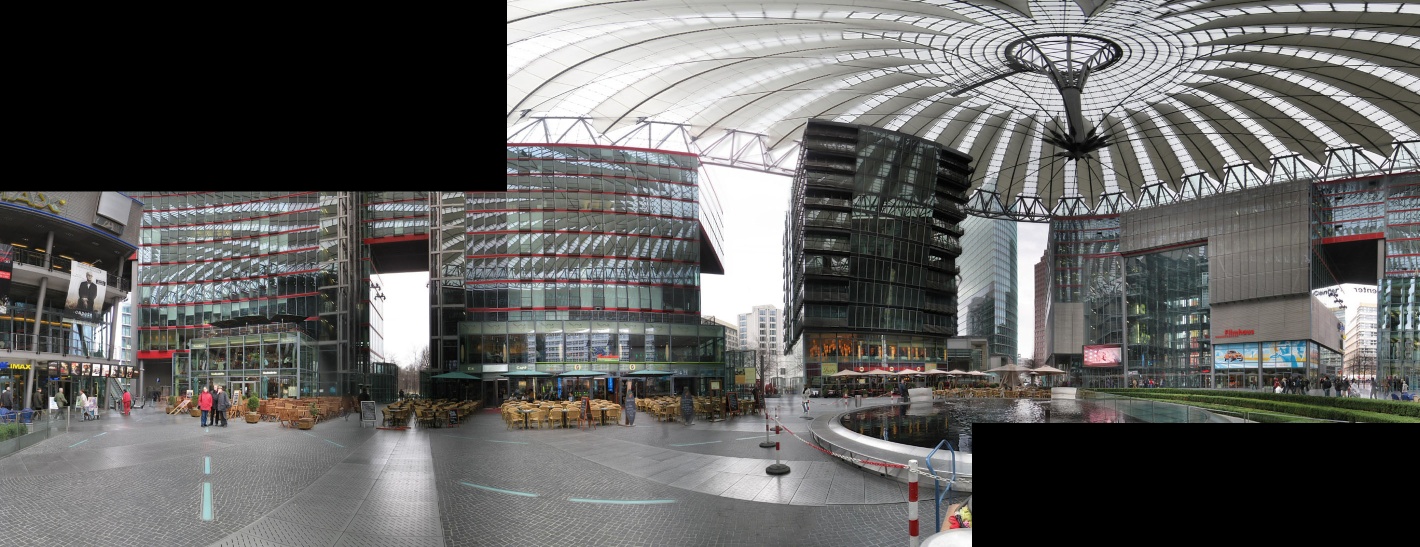

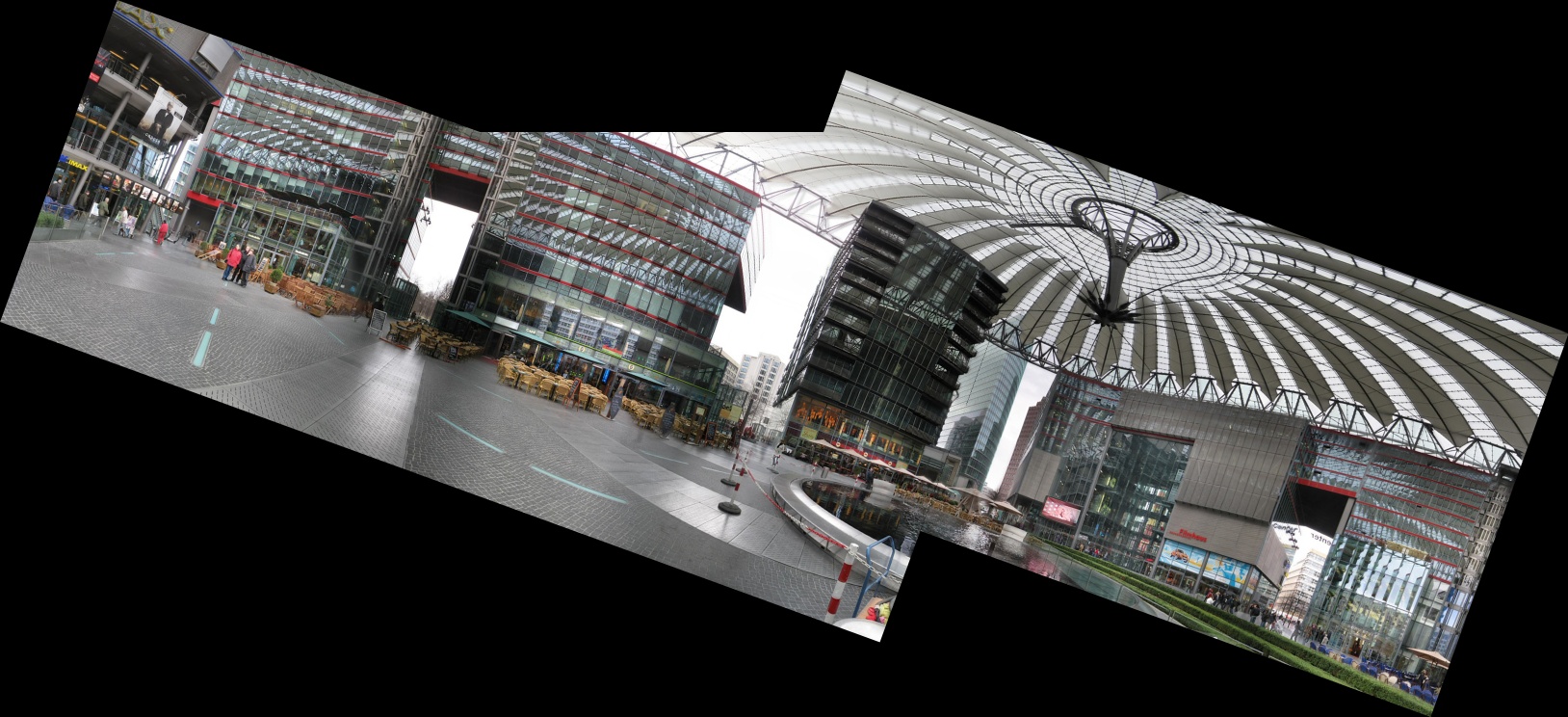

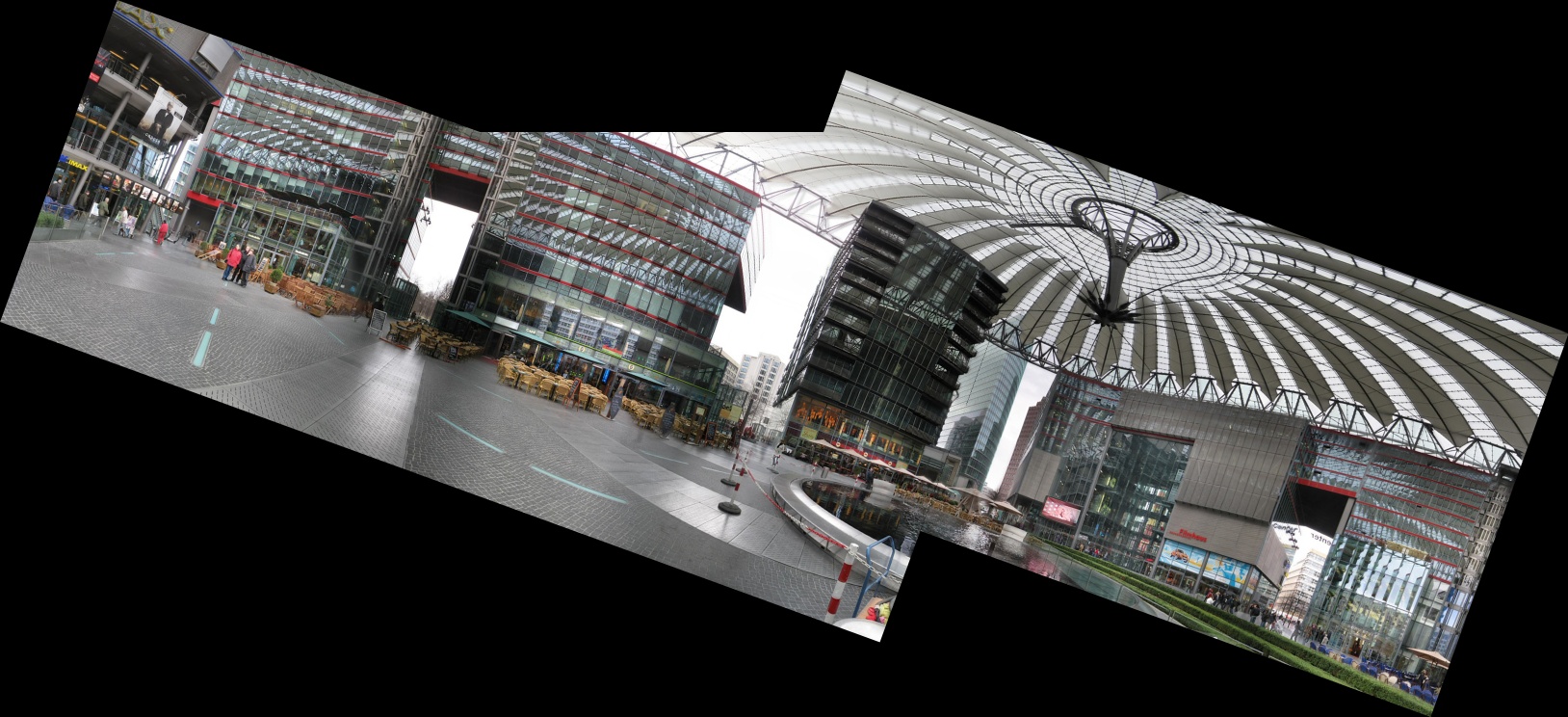

If we warp the image 2, some pixels will not be shown (e.g. the roof):

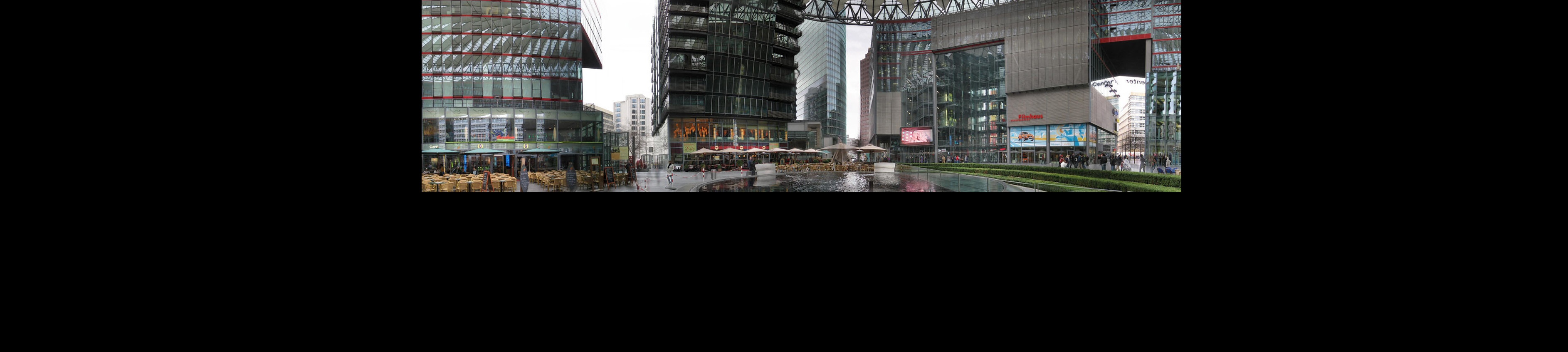

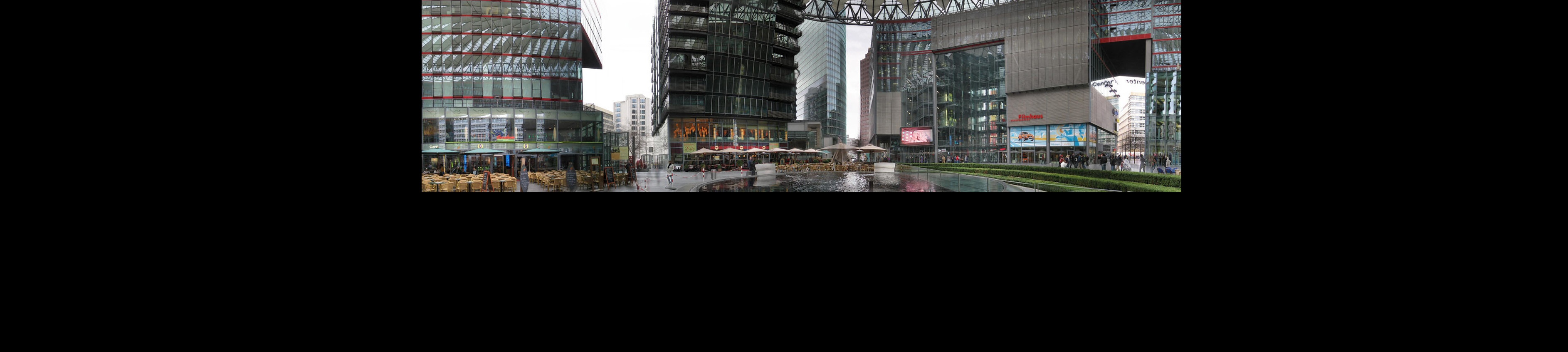

The stitching will look like this:

In fact, if we print the homography matrix:

double homography[3][3] = {

{0.999953365335864, -0.0001669222180845182, 507.0299576823942},

{5.718816824900338e-05, 0.9999404263126825, -191.9941904903286},

{1.206803293748564e-08, -1.563550523469747e-07, 1},

};

we can see that the translation in y is negative.

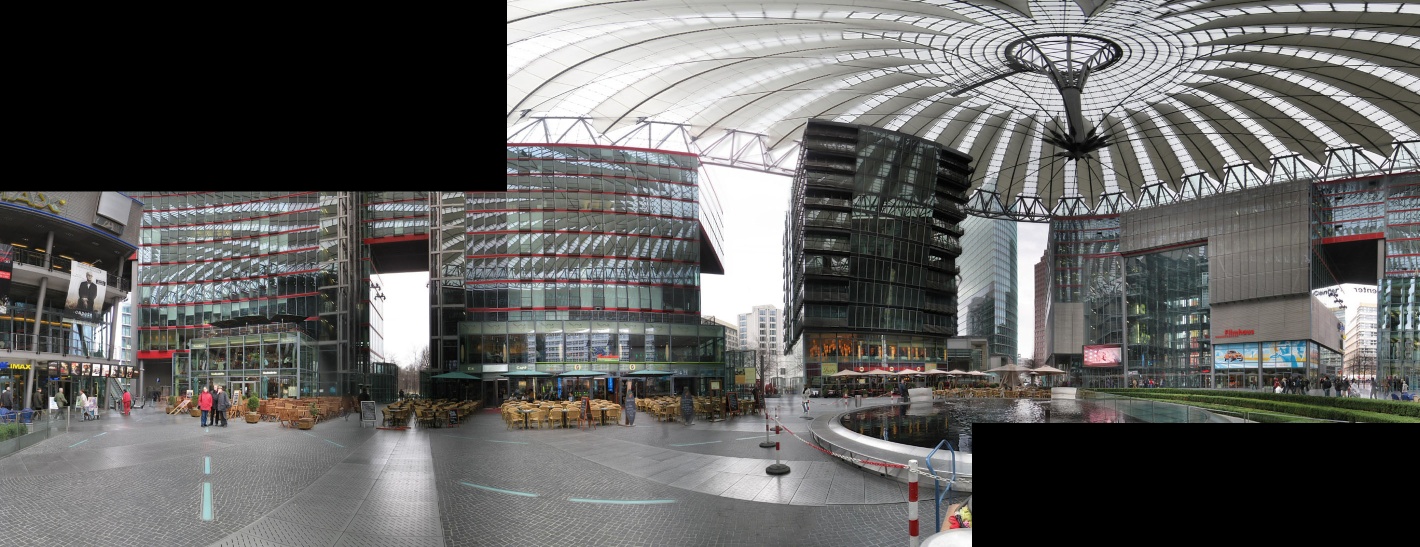

My solution would be to put 0 for t_x or t_y if they are negative for the homography matrix and use it to warp the image. After, I paste the first image not in (0,0) but in (offsetX, offsetY):

You can also calculate the new coordinates of the image after the warping using perspectiveTransform:

std::vector<cv::Point2f> corners(4);

corners[0] = cv::Point2f(0, 0);

corners[1] = cv::Point2f(0, img2.rows);

corners[2] = cv::Point2f(img2.cols, 0);

corners[3] = cv::Point2f(img2.cols, img2.rows);

std::vector<cv::Point2f> cornersTransform(4);

cv::perspectiveTransform(corners, cornersTransform, H);

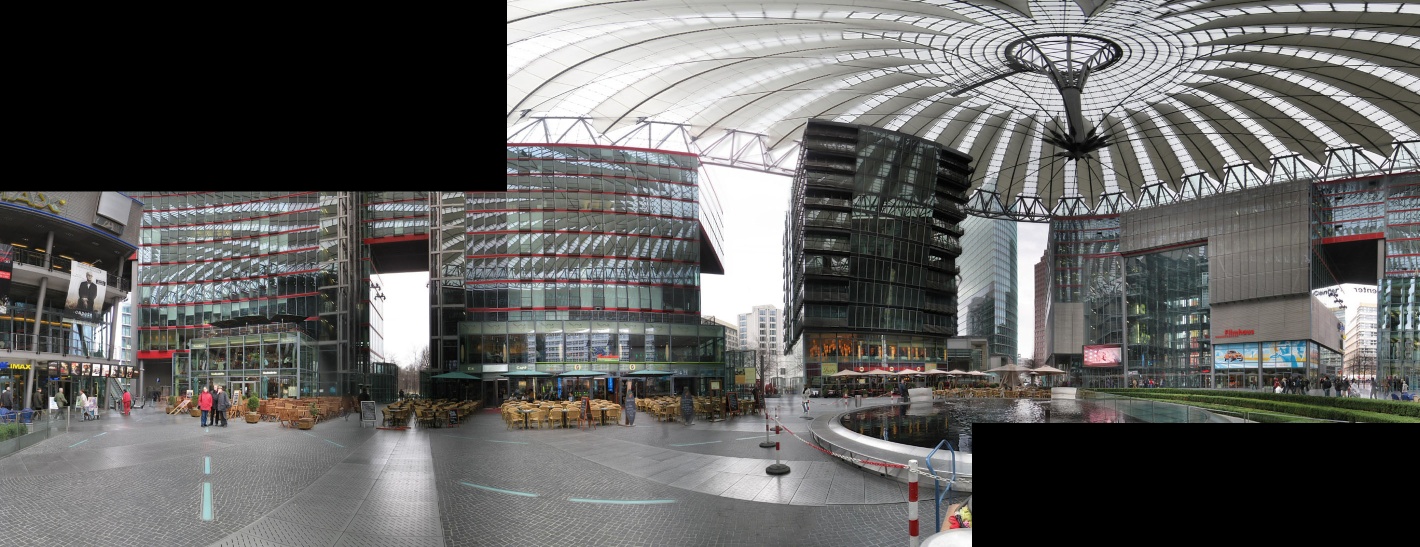

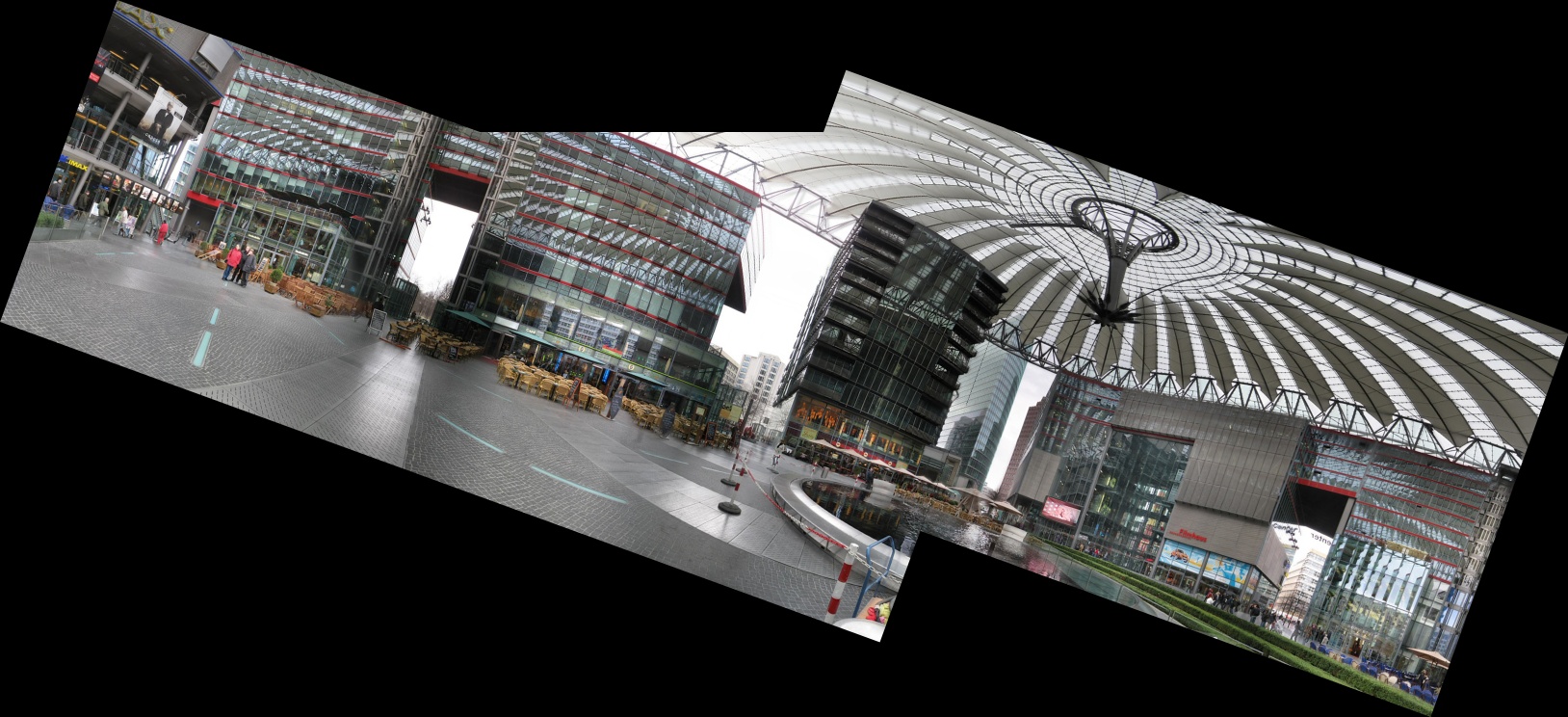

Finally, the result of the stitching I can successfully process:

| 3 | No.3 Revision |

I tried to do the same using a basic video.

There is maybe a better solution but this is how I did to stitch the images (maybe this could help you):

To copy/paste the images, in pseudo-code:

for each new image {

//Get the new image

capture >> img_cur

//Copy the current panorama into panoramaCur

cv::Mat panoramaCur;

panorama.copyTo(panoramaCur);

//panoramaSize: new panorama size

//Warp and copy img_cur into panoramaCur using the homography H

cv::warpPerspective(img_cur, panoramaCur, H_curto1, panoramaSize);

//ROI for the previous panorama

cv::Mat half(panoramaCur, cv::Rect(0, 0, panorama.cols, panorama.rows));

panorama.copyTo(half);

//Get the new panorama result

panoramaCur.copyTo(panorama);

}

Finally, the result in video.

Edit:

First of all, it is the first time I "play" with image stitching so the method I present is not necessarily good or optimal. I think that the problem you encounter is that some pixels in the original image are warped in negative coordinates.

In your case, it seems that the view is shot from an UAV. I think that the easiest solution is to divide the mosaic image in a grid of 3x3. The central part will show always the current image. There will be always some free space for the result of the warping.

Some test I made (tl:dr). For example, with the two images below:

If we warp the image 2, some pixels will not be shown (e.g. the roof):

The stitching will look like this:

In fact, if we print the homography matrix:

double homography[3][3] = {

{0.999953365335864, -0.0001669222180845182, 507.0299576823942},

{5.718816824900338e-05, 0.9999404263126825, -191.9941904903286},

{1.206803293748564e-08, -1.563550523469747e-07, 1},

};

we can see that the translation in y is negative.

My solution would be to put 0 for t_x or t_y if they are negative for the homography matrix and use it to warp the After, I paste the first image not in (0,0) but in (offsetX, offsetY):image. image.

You can also calculate the new coordinates of the image after the warping using perspectiveTransform:

std::vector<cv::Point2f> corners(4);

corners[0] = cv::Point2f(0, 0);

corners[1] = cv::Point2f(0, img2.rows);

corners[2] = cv::Point2f(img2.cols, 0);

corners[3] = cv::Point2f(img2.cols, img2.rows);

std::vector<cv::Point2f> cornersTransform(4);

cv::perspectiveTransform(corners, cornersTransform, H);

Finally, the result of the stitching I can successfully process:

Edit 2:

In fact, setting the translation part to zero in the homography matrix part is not right. It worked in my previous case because there was almost no rotation, only translation. The correct way is to first calculate the maximum offset in x and y (the maximum negative values) for the part outside of the image using perspectiveTransform.

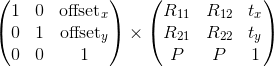

Then, we can premultiply the homography matrix H with another matrix to get all the pixel coordinates in the positive:

cv::warpPerspective(img2, panorama, H2*H, size_warp);

| 4 | No.4 Revision |

I tried to do the same using a basic video.

There is maybe a better solution but this is how I did to stitch the images (maybe this could help you):

To copy/paste the images, in pseudo-code:

for each new image {

//Get the new image

capture >> img_cur

//Copy the current panorama into panoramaCur

cv::Mat panoramaCur;

panorama.copyTo(panoramaCur);

//panoramaSize: new panorama size

//Warp and copy img_cur into panoramaCur using the homography H

cv::warpPerspective(img_cur, panoramaCur, H_curto1, panoramaSize);

//ROI for the previous panorama

cv::Mat half(panoramaCur, cv::Rect(0, 0, panorama.cols, panorama.rows));

panorama.copyTo(half);

//Get the new panorama result

panoramaCur.copyTo(panorama);

}

Finally, the result in video.

Edit:

First of all, it is the first time I "play" with image stitching so the method I present is not necessarily good or optimal. I think that the problem you encounter is that some pixels in the original image are warped in negative coordinates.

In your case, it seems that the view is shot from an UAV. I think that the easiest solution is to divide the mosaic image in a grid of 3x3. The central part will show always the current image. There will be always some free space for the result of the warping.

Some test I made (tl:dr). For example, with the two images below:

If we warp the image 2, some pixels will not be shown (e.g. the roof):

The stitching will look like this:

In fact, if we print the homography matrix:

double homography[3][3] = {

{0.999953365335864, -0.0001669222180845182, 507.0299576823942},

{5.718816824900338e-05, 0.9999404263126825, -191.9941904903286},

{1.206803293748564e-08, -1.563550523469747e-07, 1},

};

we can see that the translation in y is negative.

My solution would be to put 0 for t_x or t_y if they are negative for the homography matrix and use it to warp the image. After, I paste the first image not in (0,0) but in (offsetX, offsetY):

You can also calculate the new coordinates of the image after the warping using perspectiveTransform:

std::vector<cv::Point2f> corners(4);

corners[0] = cv::Point2f(0, 0);

corners[1] = cv::Point2f(0, img2.rows);

corners[2] = cv::Point2f(img2.cols, 0);

corners[3] = cv::Point2f(img2.cols, img2.rows);

std::vector<cv::Point2f> cornersTransform(4);

cv::perspectiveTransform(corners, cornersTransform, H);

Finally, the result of the stitching I can successfully process:

Edit 2:

In fact, setting the translation part to zero in the homography matrix part is not right. It worked in my previous case because there was almost no rotation, only translation. The correct way is to first calculate the maximum offset in x and y (the maximum negative values) for the part outside of the image using perspectiveTransform.

Then, we can premultiply the homography matrix H with another matrix to get all the pixel coordinates in the positive:

cv::warpPerspective(img2, panorama, H2*H, size_warp);

Edit 3:

The code I used for my image stitching tests, it is not perfect but it do almost what I want (it should be fully functional):

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

cv::Mat stitch(const cv::Mat &img1, const cv::Mat &img2, cv::Mat &mask, const cv::Mat &H) {

//Coordinates of the 4 corners of the image

std::vector<cv::Point2f> corners(4);

corners[0] = cv::Point2f(0, 0);

corners[1] = cv::Point2f(0, img2.rows);

corners[2] = cv::Point2f(img2.cols, 0);

corners[3] = cv::Point2f(img2.cols, img2.rows);

std::vector<cv::Point2f> cornersTransform(4);

cv::perspectiveTransform(corners, cornersTransform, H);

double offsetX = 0.0;

double offsetY = 0.0;

//Get max offset outside of the image

for(size_t i = 0; i < 4; i++) {

std::cout << "cornersTransform[" << i << "]=" << cornersTransform[i] << std::endl;

if(cornersTransform[i].x < offsetX) {

offsetX = cornersTransform[i].x;

}

if(cornersTransform[i].y < offsetY) {

offsetY = cornersTransform[i].y;

}

}

offsetX = -offsetX;

offsetY = -offsetY;

std::cout << "offsetX=" << offsetX << " ; offsetY=" << offsetY << std::endl;

//Get max width and height for the new size of the panorama

double maxX = std::max((double) img1.cols+offsetX, (double) std::max(cornersTransform[2].x, cornersTransform[3].x)+offsetX);

double maxY = std::max((double) img1.rows+offsetY, (double) std::max(cornersTransform[1].y, cornersTransform[3].y)+offsetY);

std::cout << "maxX=" << maxX << " ; maxY=" << maxY << std::endl;

cv::Size size_warp(maxX, maxY);

cv::Mat panorama(size_warp, CV_8UC3);

//Create the transformation matrix to be able to have all the pixels

cv::Mat H2 = cv::Mat::eye(3, 3, CV_64F);

H2.at<double>(0,2) = offsetX;

H2.at<double>(1,2) = offsetY;

cv::warpPerspective(img2, panorama, H2*H, size_warp);

//ROI for img1

cv::Rect img1_rect(offsetX, offsetY, img1.cols, img1.rows);

cv::Mat half;

//First iteration

if(mask.empty()) {

//Copy img1 in the panorama using the ROI

cv::Mat half = cv::Mat(panorama, img1_rect);

img1.copyTo(half);

//Create the new mask matrix for the panorama

mask = cv::Mat::ones(img2.size(), CV_8U)*255;

cv::warpPerspective(mask, mask, H2*H, size_warp);

cv::rectangle(mask, img1_rect, cv::Scalar(255), -1);

} else {

cv::Mat maskTmp = cv::Mat::zeros(size_warp, img1.type());

half = cv::Mat(maskTmp, img1_rect);

img1.copyTo(half);

//Copy only pixel with intensity != 0 in panorama

maskTmp.copyTo(panorama, maskTmp);

//Create a mask for the warped part

maskTmp = cv::Mat::ones(img2.size(), CV_8U)*255;

cv::warpPerspective(maskTmp, maskTmp, H2*H, size_warp);

cv::Mat maskTmp2 = cv::Mat::zeros(size_warp, CV_8U);

half = cv::Mat(maskTmp2, img1_rect);

//Copy the old mask in maskTmp2

mask.copyTo(half);

//Merge the old mask with the new one

maskTmp += maskTmp2;

maskTmp.copyTo(mask);

}

return panorama;

}

int main(int argc, char **argv) {

double H_1to3[3][3] = {

{0.9397222389550625, -0.3417130056282905, -244.3182439813799},

{0.3420693933107188, 0.9399119699575031, -137.2934907810936},

{-2.105164197050072e-08, 5.938357135572661e-07, 1.0}

};

cv::Mat matH_1_to_3(3, 3, CV_64F, H_1to3);

double H_2toPan[3][3] = {

{0.9368203321472403, -0.3454438491707963, 662.6735928838605},

{0.3407072775400232, 0.9356103255435544, -6.647965498116199},

{-1.969823553341344e-06, -6.793479233220533e-06, 1.0}

};

cv::Mat matH_2toPan(3, 3, CV_64F, H_2toPan);

cv::Mat img1, img2, img3;

cv::VideoCapture capture("http://answers.opencv.org/upfiles/14298087802018564.jpg");

if(!capture.isOpened()) {

return -1;

}

capture >> img1;

capture = cv::VideoCapture("http://answers.opencv.org/upfiles/14298088061356162.jpg");

if(!capture.isOpened()) {

return -1;

}

capture >> img2;

capture = cv::VideoCapture("http://answers.opencv.org/upfiles/14303473366969024.jpg");

if(!capture.isOpened()) {

return -1;

}

capture >> img3;

if(img1.empty() || img2.empty() || img3.empty()) {

return -1;

}

cv::resize(img1, img1, cv::Size(), 0.5, 0.5);

cv::resize(img2, img2, cv::Size(), 0.5, 0.5);

cv::resize(img3, img3, cv::Size(), 0.5, 0.5);

cv::Mat mask;

cv::Mat panorama = stitch(img3, img1, mask, matH_1_to_3);

panorama = stitch(panorama, img2, mask, matH_2toPan);

cv::imshow("panorama", panorama);

cv::waitKey(0);

return 0;

}

| 5 | No.5 Revision |

I tried to do the same using a basic video.

There is maybe a better solution but this is how I did to stitch the images (maybe this could help you):

To copy/paste the images, in pseudo-code:

for each new image {

//Get the new image

capture >> img_cur

//Copy the current panorama into panoramaCur

cv::Mat panoramaCur;

panorama.copyTo(panoramaCur);

//panoramaSize: new panorama size

//Warp and copy img_cur into panoramaCur using the homography H

cv::warpPerspective(img_cur, panoramaCur, H_curto1, panoramaSize);

//ROI for the previous panorama

cv::Mat half(panoramaCur, cv::Rect(0, 0, panorama.cols, panorama.rows));

panorama.copyTo(half);

//Get the new panorama result

panoramaCur.copyTo(panorama);

}

Finally, the result in video.

Edit:

First of all, it is the first time I "play" with image stitching so the method I present is not necessarily good or optimal. I think that the problem you encounter is that some pixels in the original image are warped in negative coordinates.

In your case, it seems that the view is shot from an UAV. I think that the easiest solution is to divide the mosaic image in a grid of 3x3. The central part will show always the current image. There will be always some free space for the result of the warping.

Some test I made (tl:dr). For example, with the two images below:

If we warp the image 2, some pixels will not be shown (e.g. the roof):

The stitching will look like this:

In fact, if we print the homography matrix:

double homography[3][3] = {

{0.999953365335864, -0.0001669222180845182, 507.0299576823942},

{5.718816824900338e-05, 0.9999404263126825, -191.9941904903286},

{1.206803293748564e-08, -1.563550523469747e-07, 1},

};

we can see that the translation in y is negative.

My solution would be to put 0 for t_x or t_y if they are negative for the homography matrix and use it to warp the image. After, I paste the first image not in (0,0) but in (offsetX, offsetY):

You can also calculate the new coordinates of the image after the warping using perspectiveTransform:

std::vector<cv::Point2f> corners(4);

corners[0] = cv::Point2f(0, 0);

corners[1] = cv::Point2f(0, img2.rows);

corners[2] = cv::Point2f(img2.cols, 0);

corners[3] = cv::Point2f(img2.cols, img2.rows);

std::vector<cv::Point2f> cornersTransform(4);

cv::perspectiveTransform(corners, cornersTransform, H);

Finally, the result of the stitching I can successfully process:

Edit 2:

In fact, setting the translation part to zero in the homography matrix part is not right. It worked in my previous case because there was almost no rotation, only translation. The correct way is to first calculate the maximum offset in x and y (the maximum negative values) for the part outside of the image using perspectiveTransform.

Then, we can premultiply the homography matrix H with another matrix to get all the pixel coordinates in the positive:

cv::warpPerspective(img2, panorama, H2*H, size_warp);

Edit 3:

The code I used for my image stitching tests, it is not perfect but it do almost what I want (it should be fully functional):

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

cv::Mat stitch(const cv::Mat &img1, const cv::Mat &img2, cv::Mat &mask, const cv::Mat &H) {

//Coordinates of the 4 corners of the image

std::vector<cv::Point2f> corners(4);

corners[0] = cv::Point2f(0, 0);

corners[1] = cv::Point2f(0, img2.rows);

corners[2] = cv::Point2f(img2.cols, 0);

corners[3] = cv::Point2f(img2.cols, img2.rows);

std::vector<cv::Point2f> cornersTransform(4);

cv::perspectiveTransform(corners, cornersTransform, H);

double offsetX = 0.0;

double offsetY = 0.0;

//Get max offset outside of the image

for(size_t i = 0; i < 4; i++) {

std::cout << "cornersTransform[" << i << "]=" << cornersTransform[i] << std::endl;

if(cornersTransform[i].x < offsetX) {

offsetX = cornersTransform[i].x;

}

if(cornersTransform[i].y < offsetY) {

offsetY = cornersTransform[i].y;

}

}

offsetX = -offsetX;

offsetY = -offsetY;

std::cout << "offsetX=" << offsetX << " ; offsetY=" << offsetY << std::endl;

//Get max width and height for the new size of the panorama

double maxX = std::max((double) img1.cols+offsetX, (double) std::max(cornersTransform[2].x, cornersTransform[3].x)+offsetX);

double maxY = std::max((double) img1.rows+offsetY, (double) std::max(cornersTransform[1].y, cornersTransform[3].y)+offsetY);

std::cout << "maxX=" << maxX << " ; maxY=" << maxY << std::endl;

cv::Size size_warp(maxX, maxY);

cv::Mat panorama(size_warp, CV_8UC3);

//Create the transformation matrix to be able to have all the pixels

cv::Mat H2 = cv::Mat::eye(3, 3, CV_64F);

H2.at<double>(0,2) = offsetX;

H2.at<double>(1,2) = offsetY;

cv::warpPerspective(img2, panorama, H2*H, size_warp);

//ROI for img1

cv::Rect img1_rect(offsetX, offsetY, img1.cols, img1.rows);

cv::Mat half;

//First iteration

if(mask.empty()) {

//Copy img1 in the panorama using the ROI

cv::Mat half = cv::Mat(panorama, img1_rect);

img1.copyTo(half);

//Create the new mask matrix for the panorama

mask = cv::Mat::ones(img2.size(), CV_8U)*255;

cv::warpPerspective(mask, mask, H2*H, size_warp);

cv::rectangle(mask, img1_rect, cv::Scalar(255), -1);

} else {

//Create an image with the final size to paste img1

cv::Mat maskTmp = cv::Mat::zeros(size_warp, img1.type());

half = cv::Mat(maskTmp, img1_rect);

img1.copyTo(half);

//Copy only pixel with intensity != 0 in panorama

img1 into panorama using a mask

cv::Mat maskTmp2 = cv::Mat::zeros(size_warp, CV_8U);

half = cv::Mat(maskTmp2, img1_rect);

mask.copyTo(half);

maskTmp.copyTo(panorama, maskTmp);

maskTmp2);

//Create a mask for the warped part

maskTmp = cv::Mat::ones(img2.size(), CV_8U)*255;

cv::warpPerspective(maskTmp, maskTmp, H2*H, size_warp);

cv::Mat maskTmp2 = cv::Mat::zeros(size_warp, CV_8U);

half = cv::Mat(maskTmp2, img1_rect);

//Copy the old mask in maskTmp2

mask.copyTo(half);

//Merge the old mask with the new one

maskTmp += maskTmp2;

maskTmp.copyTo(mask);

}

return panorama;

}

int main(int argc, char **argv) {

double H_1to3[3][3] = {

{0.9397222389550625, -0.3417130056282905, -244.3182439813799},

{0.3420693933107188, 0.9399119699575031, -137.2934907810936},

{-2.105164197050072e-08, 5.938357135572661e-07, 1.0}

};

cv::Mat matH_1_to_3(3, 3, CV_64F, H_1to3);

double H_2toPan[3][3] = {

{0.9368203321472403, -0.3454438491707963, 662.6735928838605},

{0.3407072775400232, 0.9356103255435544, -6.647965498116199},

{-1.969823553341344e-06, -6.793479233220533e-06, 1.0}

};

cv::Mat matH_2toPan(3, 3, CV_64F, H_2toPan);

cv::Mat img1, img2, img3;

cv::VideoCapture capture("http://answers.opencv.org/upfiles/14298087802018564.jpg");

if(!capture.isOpened()) {

return -1;

}

capture >> img1;

capture = cv::VideoCapture("http://answers.opencv.org/upfiles/14298088061356162.jpg");

if(!capture.isOpened()) {

return -1;

}

capture >> img2;

capture = cv::VideoCapture("http://answers.opencv.org/upfiles/14303473366969024.jpg");

if(!capture.isOpened()) {

return -1;

}

capture >> img3;

if(img1.empty() || img2.empty() || img3.empty()) {

return -1;

}

cv::resize(img1, img1, cv::Size(), 0.5, 0.5);

cv::resize(img2, img2, cv::Size(), 0.5, 0.5);

cv::resize(img3, img3, cv::Size(), 0.5, 0.5);

cv::Mat mask;

cv::Mat panorama = stitch(img3, img1, mask, matH_1_to_3);

panorama = stitch(panorama, img2, mask, matH_2toPan);

cv::imshow("panorama", panorama);

cv::waitKey(0);

return 0;

}