This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

One way is apply perspective transform to stretch the image and fill the black region.

The next is apply Inpainting, but it will be helpful when the area to fill is smaller to create the real effect.

For the second method you need to create mask by thresholding the source and then apply inpaint()

like Mat src=imread("a.jpg"); cvtColor(src, thr, CV_RGB2GRAY); threshold(thr,thr,20,255,THRESH_BINARY_INV); inpaint( src,thr, dst,10, INPAINT_NS);

See the Inpainting result,

| 2 | No.2 Revision |

One way is apply perspective transform to stretch the image and fill the black region.

The next is apply Inpainting, but it will be helpful when the area to fill is smaller to create the real effect.

For the second method you need to create mask by thresholding the source and then apply inpaint()

like Mat src=imread("a.jpg"); Mat thr,dst; cvtColor(src, thr, CV_RGB2GRAY); threshold(thr,thr,20,255,THRESH_BINARY_INV); inpaint( src,thr, dst,10, INPAINT_NS);

See the Inpainting result,

| 3 | No.3 Revision |

One way is apply perspective transform to stretch the image and fill the black region.

The next is apply Inpainting, but it will be helpful when the area to fill is smaller to create the real effect.

For the second method you need to create mask by thresholding the source and then apply inpaint()

like

like

Mat src=imread("a.jpg");

Mat thr,dst;

cvtColor(src, thr, CV_RGB2GRAY);

threshold(thr,thr,20,255,THRESH_BINARY_INV);

inpaint( src,thr, dst,10, INPAINT_NS);INPAINT_NS);

See the Inpainting result,

| 4 | No.4 Revision |

One way is apply perspective transform to stretch the image and fill the black region.

The next is apply Inpainting, but it will be helpful when the area to fill is smaller to create the real effect.

For the second method you need to create mask by thresholding the source and then apply inpaint()

like

Mat src=imread("a.jpg");

Mat thr,dst;

cvtColor(src, thr, CV_RGB2GRAY);

threshold(thr,thr,20,255,THRESH_BINARY_INV);

inpaint( src,thr, dst,10, INPAINT_NS);

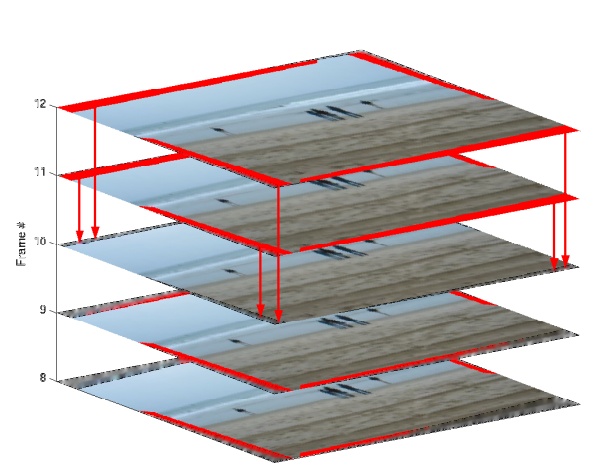

See the Inpainting result,

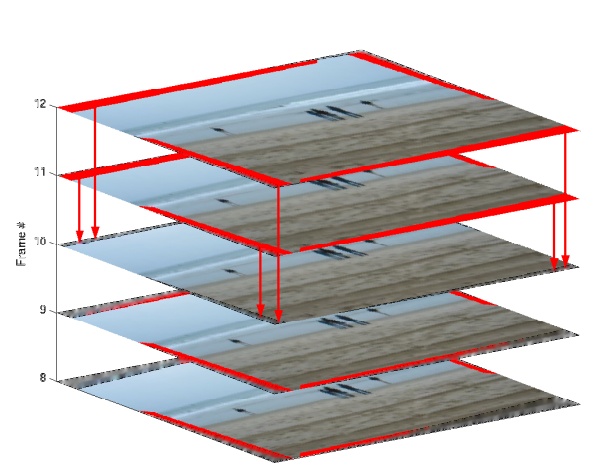

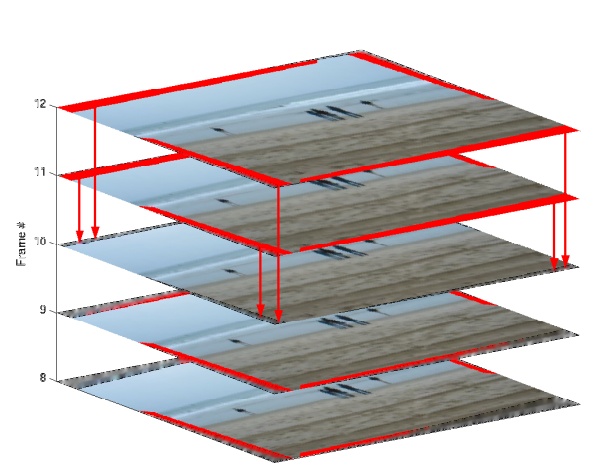

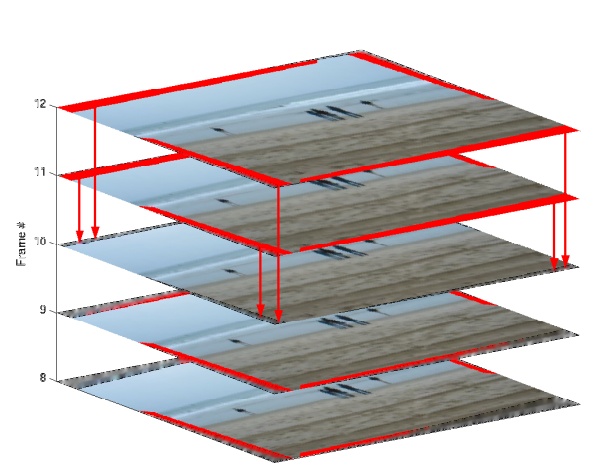

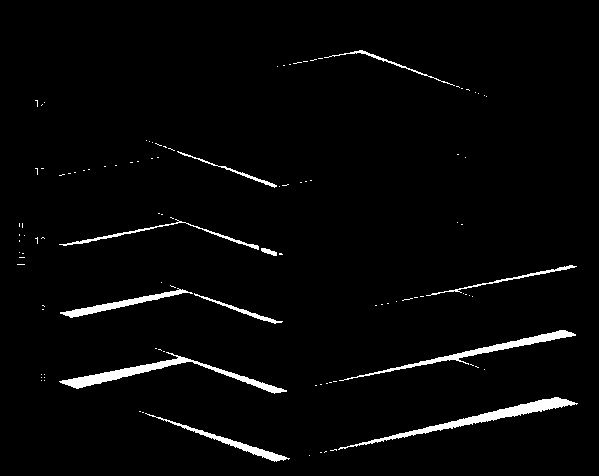

Using Perspective transform

Here you need to find source and destination points(4 corner) for the transformation.

In the above image the source point is four corner of black boundary.

And the destination points are the four corner of source image.

In the below image the red one is using source point and green one is the points where we are going to transform.

So for getting source co-ordinates,

->Thrshold, ->Find biggest contour. ->approxPolyDP

Here you should get four co-ordinates corresponding to source co-ordinates of warpPerspective.

The destination points are simply the corner of source image.

Sort the co-ordinates from top left to bottom right.

Apply warpPerspective transform.

See the transformed result

Code:

struct point_sorter

{

bool operator ()( const Point2f a, Point2f b )

{

return ( (a.x + 500*a.y) < (b.x + 500*b.y) );

}

};

int main()

{

Mat src=imread("warp.jpg");

Mat thr;

cvtColor(src,thr,CV_BGR2GRAY);

threshold( thr, thr, 30, 255,CV_THRESH_BINARY_INV );

bitwise_not(thr,thr);

vector< vector <Point> > contours; // Vector for storing contour

vector< Vec4i > hierarchy;

int largest_contour_index=0;

int largest_area=0;

Mat dst(src.rows,src.cols,CV_8UC1,Scalar::all(0)); //create destination image

findContours( thr.clone(), contours, hierarchy,CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE ); // Find the contours in the image

for( int i = 0; i< contours.size(); i++ ){

double a=contourArea( contours[i],false); // Find the area of contour

if(a>largest_area){

largest_area=a;

largest_contour_index=i; //Store the index of largest contour

}

}

//drawContours( dst,contours, largest_contour_index, Scalar(255,255,255),CV_FILLED, 8, hierarchy );

vector<vector<Point> > contours_poly(1);

approxPolyDP( Mat(contours[largest_contour_index]), contours_poly[0],5, true );

//Rect boundRect=boundingRect(contours[largest_contour_index]);

if(contours_poly[0].size()==4){

std::vector<Point2f> src_pts;

std::vector<Point2f> dst_pts;

src_pts.push_back(contours_poly[0][0]);

src_pts.push_back(contours_poly[0][1]);

src_pts.push_back(contours_poly[0][2]);

src_pts.push_back(contours_poly[0][3]);

dst_pts.push_back(Point2f(0,0));

dst_pts.push_back(Point2f(0,src.rows));

dst_pts.push_back(Point2f(src.cols,0));

dst_pts.push_back(Point2f(src.cols,src.rows));

sort(dst_pts.begin(), dst_pts.end(), point_sorter());

sort(src_pts.begin(), src_pts.end(), point_sorter());

Mat transmtx = getPerspectiveTransform(src_pts,dst_pts);

Mat transformed = Mat::zeros(src.rows, src.cols, CV_8UC3);

warpPerspective(src, transformed, transmtx, src.size());

imshow("transformed", transformed);

imshow("src",src);

waitKey();

}

else

cout<<"Make sure that your are getting 4 corner using approxPolyDP.....adjust 'epsilon' and try again"<<endl;

return 0;

}