This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

As the definition above says, segmentation is partitioning the image into different regions.

You can imagine these regions as parts of the image representing for example different objects.

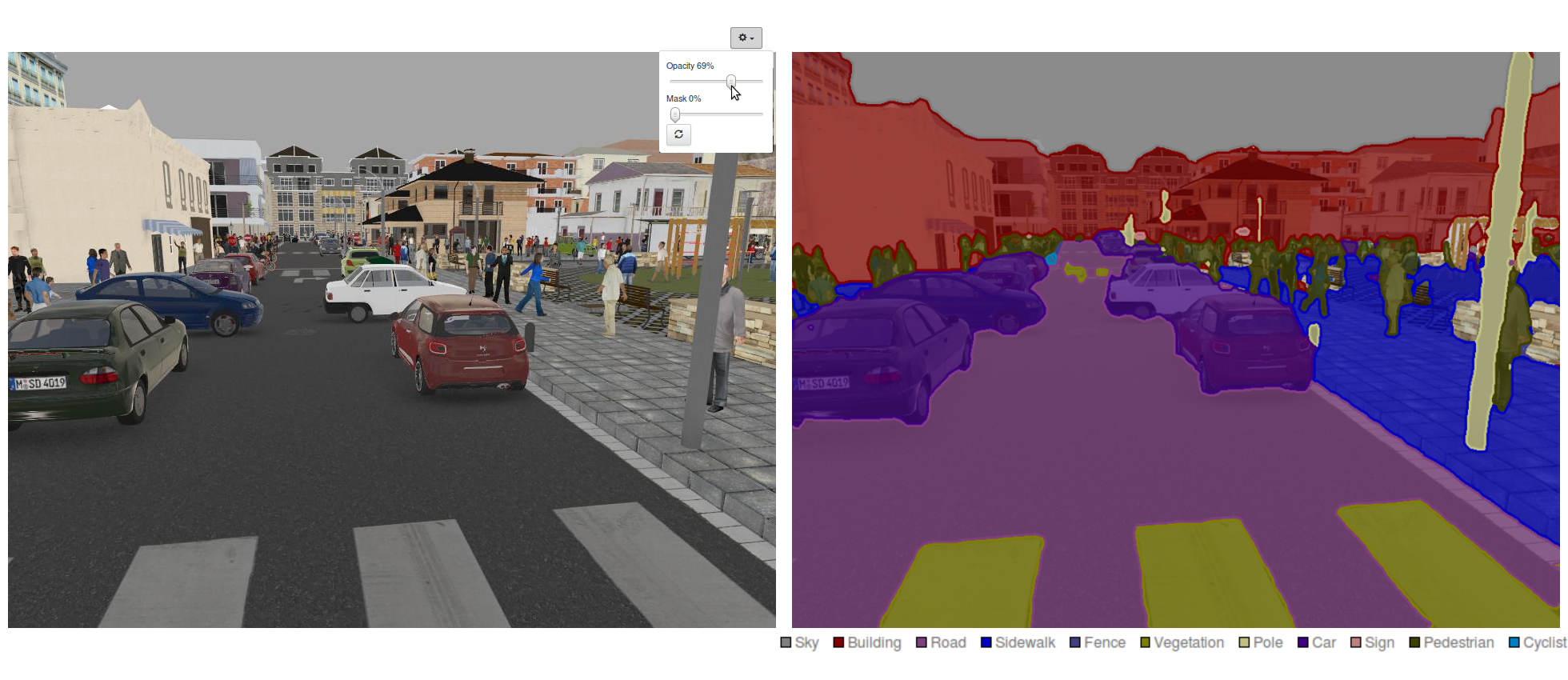

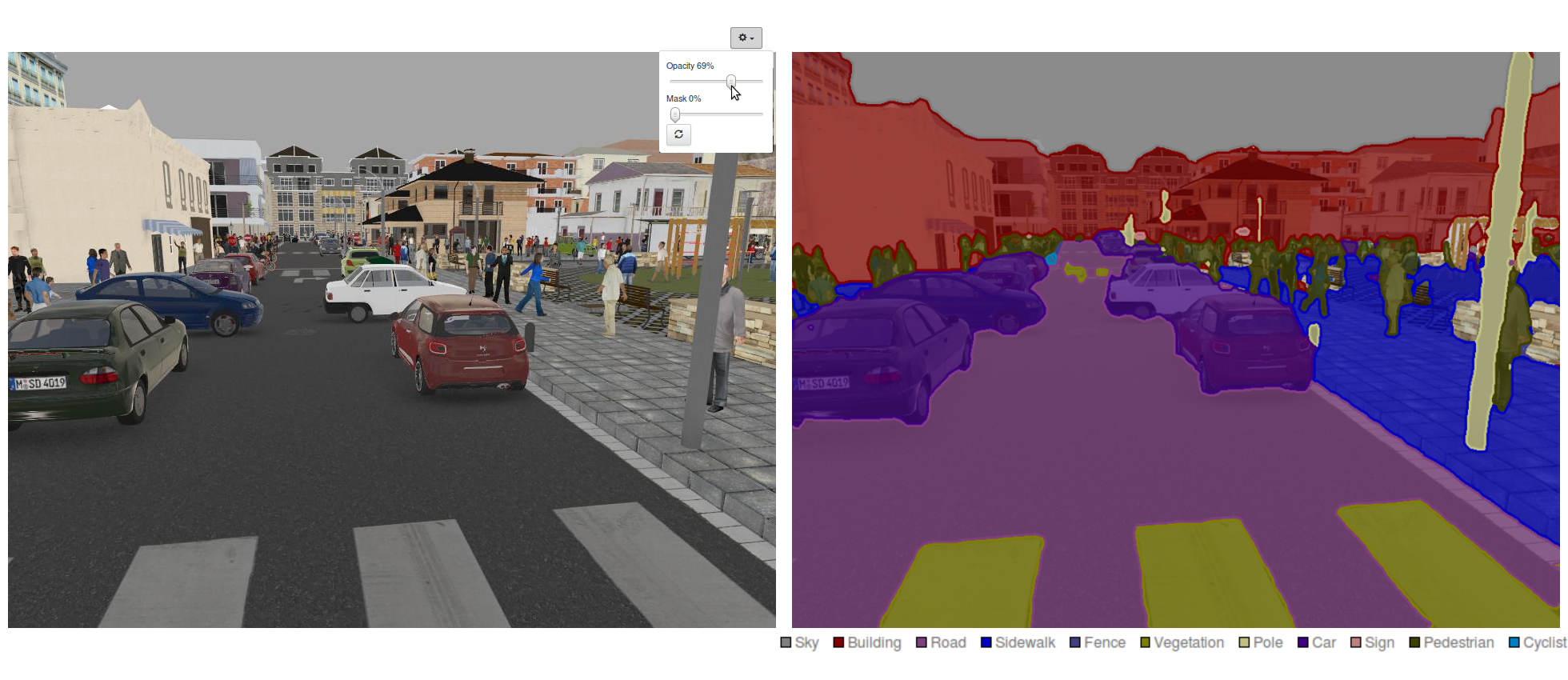

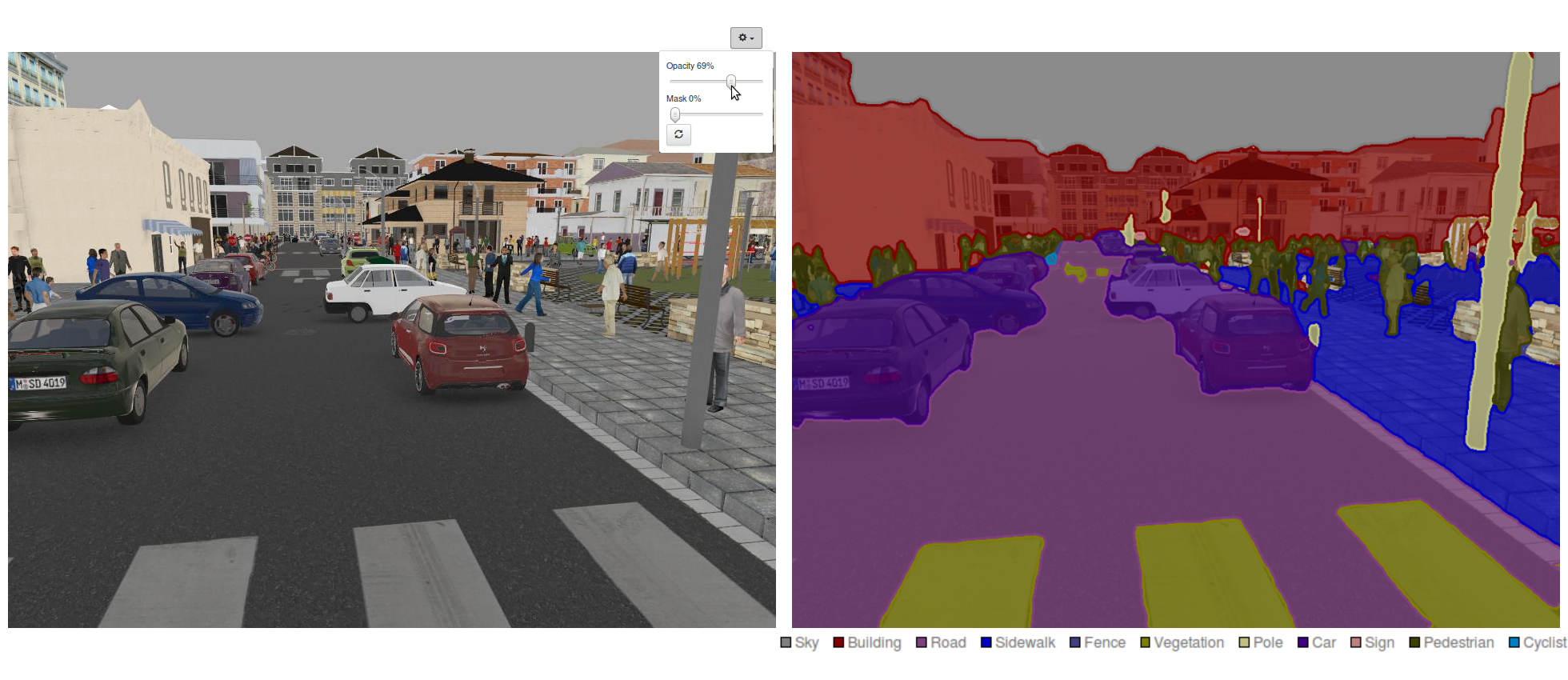

This can be exhaustive (all the objects are identified), for example when analyzing the environment of self driving cars: the road, sidewalk, traffic signs, pedestrians, cars, bicycles, trees, buildings etc. are identified. Here's an example from nvidia dev blog:

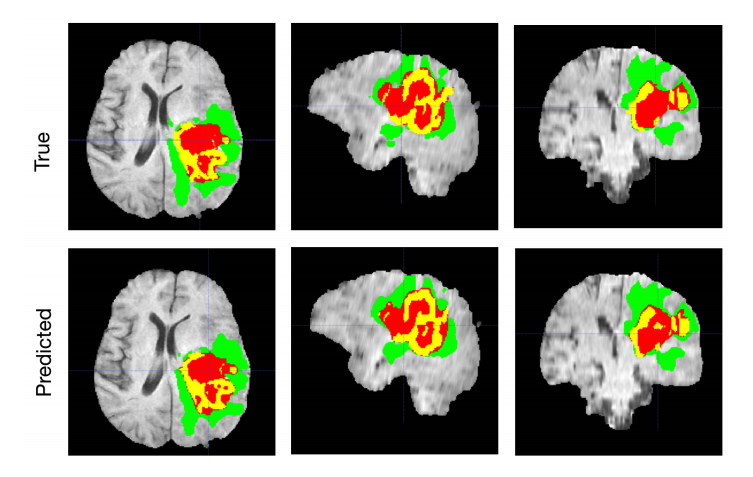

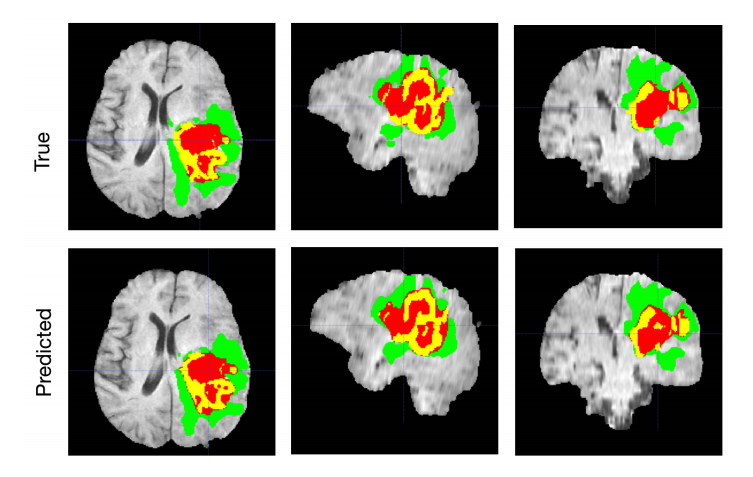

It can also be an object against the background (or anything else). For example when trying to identify a cancer on a radiography image.

The segmented regions don't have to represent objects. You can segment colors (example application: how ripe (red) is an apple?), textures (seismic image processing), or anything else (for example: detect the parts of a photo modified by Photoshop).

As you can see, in the simple situations the segmentation can be color-based (see thresholding) or gradient/edge-based (e.g.canny edge detector), you can rely on textures, and in complicated situations you'll need to use deep neural networks (segnets) and so on...

| 2 | No.2 Revision |

As the definition above says, segmentation is partitioning the image into different regions.

You can imagine these regions as parts of the image representing for example different objects.

This can be exhaustive (all the objects are identified), for example when analyzing the environment of self driving cars: the road, sidewalk, traffic signs, pedestrians, cars, bicycles, trees, buildings etc. are identified. Here's an example from nvidia dev blog:

It can also be an object against the background (or anything else). For example when trying to identify a cancer on a radiography image.image. Another image from NVIDIA:

The segmented regions don't have to represent objects. You can segment colors (example application: how ripe (red) is an apple?), textures (seismic image processing), or anything else (for example: detect the parts of a photo modified by Photoshop).

As you can see, in the simple situations the segmentation can be color-based (see thresholding) or gradient/edge-based (e.g.canny edge detector), you can rely on textures, and in complicated situations you'll need to use deep neural networks (segnets) and so on...

| 3 | No.3 Revision |

As the definition above says, segmentation is partitioning the image into different regions.

You can imagine these regions as parts of the image representing for example different objects.

This can be exhaustive (all the objects are identified), for example when analyzing the environment of self driving cars: the road, sidewalk, traffic signs, pedestrians, cars, bicycles, trees, buildings etc. are identified. Here's an example from nvidia dev blog:

It can also be an object against the background (or anything else). For example when trying to identify a cancer on a radiography image. Another image from NVIDIA:

The segmented regions don't have to represent objects. You can segment colors (example application: how ripe (red) is an apple?), textures (seismic (e.g. cracks/defects in a material, seismic image processing), or anything else (for example: (e.g. detect the parts of a photo modified manipulated by Photoshop).

As you can see, in the simple situations the segmentation can be color-based (see thresholding) or gradient/edge-based (e.g.canny edge detector), you can rely on textures, and in complicated situations you'll need to use deep neural networks (segnets) and so on...