|

2020-10-27 18:22:37 -0600

| received badge | ● Nice Answer

(source)

|

|

2020-10-10 14:40:37 -0600

| received badge | ● Nice Answer

(source)

|

|

2020-10-10 14:40:28 -0600

| received badge | ● Nice Question

(source)

|

|

2019-04-24 09:55:16 -0600

| received badge | ● Notable Question

(source)

|

|

2019-01-17 22:42:52 -0600

| received badge | ● Notable Question

(source)

|

|

2018-07-22 13:30:12 -0600

| asked a question | Error on reading directly from camera Error on reading directly from camera

When I try to run a video from code camera = cv2.VideoCapture("E:\\true2.mp4")it r |

|

2018-06-12 14:12:22 -0600

| received badge | ● Popular Question

(source)

|

|

2018-05-19 06:47:42 -0600

| asked a question | How to find pixel per meter How to find pixel per meter

I have a static camera through which I am focusing on the covered area, total covered area b |

|

2018-05-19 06:47:36 -0600

| asked a question | How to find pixel per meter How to find pixel per meter

I have a static camera through which I am focusing on the covered area, total covered area b |

|

2018-05-19 06:47:25 -0600

| asked a question | How to find pixel per meter How to find pixel per meter

I have a static camera through which I am focusing on the covered area, total covered area b |

|

2018-04-30 17:16:00 -0600

| received badge | ● Popular Question

(source)

|

|

2018-04-05 21:49:07 -0600

| received badge | ● Famous Question

(source)

|

|

2018-02-27 05:56:56 -0600

| received badge | ● Famous Question

(source)

|

|

2017-02-25 10:28:56 -0600

| received badge | ● Notable Question

(source)

|

|

2016-09-08 08:38:43 -0600

| received badge | ● Famous Question

(source)

|

|

2016-09-05 00:24:23 -0600

| marked best answer | How to Save frames to directory I am trying to save the frames which have entropy >0.78 , i want to save that specific frames from my video file , it may be more than 100 frames , i tried it , Below is my code if (entropy>0.78)

{

detector.detect(pre_img, keypoint2);

if (keypoint2.size() >= 20)

{

RetainBestKeypoints(keypoint2, 20);

dextract.compute( pre_img, keypoint2, descriptors_2);

Mat my_img_3 = descriptors_2.reshape(1,1);

float response = svm.predict(my_img_3);

if (response==1)

{

count_4++;

cv::imwrite("images.jpg", pre_img);

}

}

}

This code is not saving all the images , i want to save it in a specific directory like D:\images\ |

|

2016-06-25 05:13:48 -0600

| received badge | ● Popular Question

(source)

|

|

2016-04-26 08:05:26 -0600

| received badge | ● Notable Question

(source)

|

|

2016-02-11 01:41:27 -0600

| received badge | ● Notable Question

(source)

|

|

2016-02-04 13:21:58 -0600

| received badge | ● Popular Question

(source)

|

|

2016-01-28 06:55:47 -0600

| marked best answer | Merging two images showing brightness I am trying to blend two image or you can say put one image on other image , when i apply blending overlay on the image or simple merge two image it show me brightness in it. here are my two images (first image is empty from inside like PS vignette)

and the other is

The code which i did is int main( int argc, char** argv )

{

Mat img=imread("E:\\vig.png",-1);

Mat ch[4];

split(img,ch);

Mat im2 = ch[3]; // here's the vignette

im2 = 255 - im2; // eventually cure the inversion

Mat img2 = imread("E:\\ew.jpg");

Mat out2;

blending_overlay3(img2 , im2 , out2);

imshow("image",out2);

imwrite("E:\\image.jpg",out2);

waitKey();}

It show me the result like

but i require result like

EDIT The first image is hollow/empty from center (the vignette one) , but when i read the image (vignette one) with my program then it become solid(bright) from the center , the history behind its implementation is here There is the only problem and its with first (vignette) image reading , if it read as it is , like hollow/empty from the center , so that the other image with which we merge/blend/weight whatever apply it didn't effect the center part of the image , not even show brightness etc , that's what i want to do |

|

2016-01-26 13:09:04 -0600

| received badge | ● Nice Question

(source)

|

|

2015-12-18 11:31:07 -0600

| marked best answer | Drawing shapes in images I am trying to draw the circle in the center if the images , like the image below

there may be the images of many shapes/size but i want to draw circle/eclipse every time in the same way. Below is the code i tried for it Mat img = imread ("E:\\test.jpg");

int center_img = (img.rows*img.cols)/2;

double radius_img = img.rows/2+img.cols/2;

circle(img,Point(img.rows/2,img.cols/2), img.rows/2,1, 8,0);

imshow ("img" , img);

cvWaitKey(0);

|

|

2015-12-11 11:29:22 -0600

| marked best answer | How to implement filters ? I am trying to make filters like sepia filter in my app like other photo editing applications , till now I don't have any idea about it , Can any one help me out for it about its algorithms and how to do it ? and Can I implement in opencv using native c++ or we have any of its build in functions ? |

|

2015-11-09 06:30:36 -0600

| received badge | ● Popular Question

(source)

|

|

2015-10-03 01:26:34 -0600

| received badge | ● Popular Question

(source)

|

|

2015-08-25 14:57:52 -0600

| received badge | ● Nice Answer

(source)

|

|

2015-08-03 18:22:17 -0600

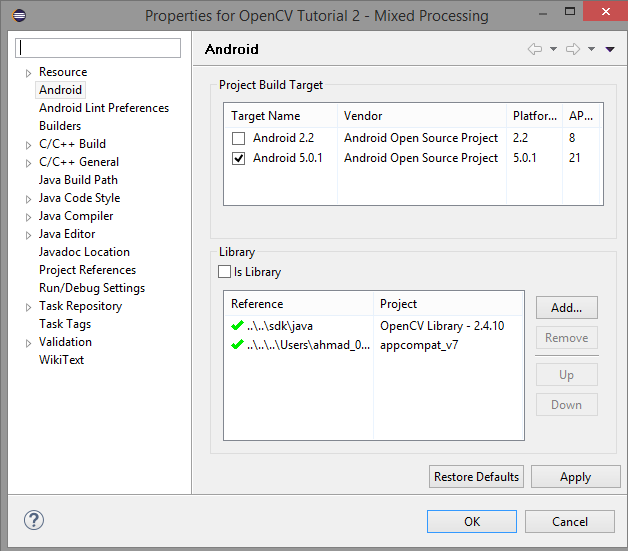

| marked best answer | Getting error using SVM with SURF Below is some part of my code , which is running fine but after a long processing it show me the run time error Initialization part std::vector< DMatch > matches;

Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create("FlannBased");

Ptr<DescriptorExtractor> extractor = new SurfDescriptorExtractor();

SurfFeatureDetector detector(500);

std::vector<KeyPoint> keypoints;

int dictionarySize = 1500;

TermCriteria tc(CV_TERMCRIT_ITER, 10, 0.001);

int retries = 1;

int flags = KMEANS_PP_CENTERS;

BOWKMeansTrainer bow(dictionarySize, tc, retries, flags);

BOWImgDescriptorExtractor dextract(extractor,matcher);

// Initialize constant values

const int nb_cars = files.size();

const int not_cars = files_no.size();

const int num_img = nb_cars + not_cars; // Get the number of images

As my personal approach i think error starts from here // Initialize your training set.

cv::Mat training_mat(num_img,image_area,CV_32FC1);

cv::Mat labels(num_img,1,CV_32FC1);

std::vector<string> all_names;

all_names.assign(files.begin(),files.end());

all_names.insert(all_names.end(), files_no.begin(), files_no.end());

// Load image and add them to the training set

int count = 0;

Mat unclustered;

vector<string>::const_iterator i;

string Dir;

for (i = all_names.begin(); i != all_names.end(); ++i)

{

Dir=( (count < files.size() ) ? YourImagesDirectory : YourImagesDirectory_2);

tmp_img = cv::imread( Dir +*i, 0 );

resize( tmp_img, tmp_dst, tmp_dst.size() );

Mat row_img = tmp_dst; // get a one line image.

detector.detect( row_img, keypoints);

extractor->compute( row_img, keypoints, descriptors_1);

unclustered.push_back(descriptors_1);

//bow.add(descriptors_1);

++count;

}

cout<<"second part";

int count_2=0;

vector<string>::const_iterator k;

Mat vocabulary = bow.cluster(unclustered);

dextract.setVocabulary(vocabulary);

for (k = all_names.begin(); k != all_names.end(); ++k)

{

Dir=( (count_2 < files.size() ) ? YourImagesDirectory : YourImagesDirectory_2);

tmp_img = cv::imread( Dir +*k, 0 );

resize( tmp_img, tmp_dst, tmp_dst.size() );

Mat row_img = tmp_dst; // get a one line image.

detector.detect( row_img, keypoints);

dextract.compute( row_img, keypoints, descriptors_1);

training_mat.push_back(descriptors_1);

labels.at< float >(count_2, 0) = (count_2<nb_cars)?1:-1; // 1 for car, -1 otherwise*/

++count_2;

}

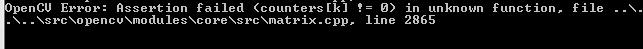

After processing sometime it show me the below runtime error

Error :

Edit : Mat training_mat(0,dictionarySize,CV_32FC1);

to Mat training_mat(1,extractor->descriptorSize(),extractor->descriptorType());

but didn't solve the problem |

|

2015-06-04 12:43:53 -0600

| marked best answer | Trying to translate formula for blending mode I am using opencv c++ for making the blending mode like in photoshop , i want to make overlay mode in it , i search its alternate in opencv in which i found this blending way , but its not the overlay as i want to use the overlay method in it. overlay method formula from this documentation (Target > 0.5) * (1 - (1-2*(Target-0.5)) * (1-Blend)) +

(Target <= 0.5) * ((2*Target) * Blend)

Can any one please explain this formula for implementation in opencv c++ , how i can easy understand it for implementation or is there any already build in function for it or any other easy way out :P |

|

2015-06-04 12:43:53 -0600

| received badge | ● Nice Answer

(source)

|

|

2015-05-21 02:59:51 -0600

| received badge | ● Notable Question

(source)

|

|

2015-04-22 20:37:09 -0600

| marked best answer | Object Detection using Surf I am trying to detect the vehicle from the video , I 'll do it in real time application but for the time being and for good understanding i am doing it on video , code is below: Mat img_template = imread("images.jpg"); // read template image

void surf_detection(Mat img_1,Mat img_2)

{

if( !img_1.data || !img_2.data )

{

std::cout<< " --(!) Error reading images " << std::endl;

}

//-- Step 1: Detect the keypoints using SURF Detector

int minHessian = 400;

SurfFeatureDetector detector( minHessian );

std::vector<KeyPoint> keypoints_1, keypoints_2;

std::vector< DMatch > good_matches;

do{

detector.detect( img_1, keypoints_1 );

detector.detect( img_2, keypoints_2 );

//-- Draw keypoints

Mat img_keypoints_1; Mat img_keypoints_2;

drawKeypoints( img_1, keypoints_1, img_keypoints_1, Scalar::all(-1), DrawMatchesFlags::DEFAULT );

drawKeypoints( img_2, keypoints_2, img_keypoints_2, Scalar::all(-1), DrawMatchesFlags::DEFAULT );

//-- Step 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat descriptors_1, descriptors_2;

extractor.compute( img_1, keypoints_1, descriptors_1 );

extractor.compute( img_2, keypoints_2, descriptors_2 );

//-- Step 3: Matching descriptor vectors using FLANN matcher

FlannBasedMatcher matcher;

std::vector< DMatch > matches;

matcher.match( descriptors_1, descriptors_2, matches );

double max_dist = 0;

double min_dist = 100;

//-- Quick calculation of max and min distances between keypoints

for( int i = 0; i < descriptors_1.rows; i++ )

{

double dist = matches[i].distance;

if( dist < min_dist )

min_dist = dist;

if( dist > max_dist )

max_dist = dist;

}

std::cout("-- Max dist : %f \n", max_dist );

std::cout("-- Min dist : %f \n", min_dist );

//-- Draw only "good" matches (i.e. whose distance is less than 2*min_dist )

//-- PS.- radiusMatch can also be used here.

for( int i = 0; i < descriptors_1.rows; i++ )

{

if( matches[i].distance < 2*min_dist )

{

good_matches.push_back( matches[i]);

}

}

}while(good_matches.size()<100);

//-- Draw only "good" matches

Mat img_matches;

drawMatches( img_1, keypoints_1, img_2, keypoints_2,good_matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//-- Localize the object

std::vector<Point2f> obj;

std::vector<Point2f> scene;

for( int i = 0; i < good_matches.size(); i++ )

{

//-- Get the keypoints from the good matches

obj.push_back( keypoints_1[ good_matches[i].queryIdx ].pt );

scene.push_back( keypoints_2[ good_matches[i].trainIdx ].pt );

}

// se non trova H....sarebbe da usare la vecchia H e disegnarla con un colore diverso

Mat H = findHomography( obj, scene, CV_RANSAC );

//-- Get the corners from the image_1 ( the object to be "detected" )

std::vector<Point2f> obj_corners(4);

obj_corners[0] = cvPoint(0,0);

obj_corners[1] = cvPoint( img_1.cols, 0 );

obj_corners[2] = cvPoint( img_1.cols, img_1.rows );

obj_corners[3] = cvPoint( 0, img_1.rows );

std::vector<Point2f> scene_corners(4);

perspectiveTransform( obj_corners, scene_corners, H);

//-- Draw lines between the corners (the mapped object in the scene - image_2 )

line( img_matches, scene_corners[0] + Point2f( img_1.cols, 0), scene_corners[1] + Point2f( img_1.cols, 0), Scalar(0, 255, 0), 4 );

line( img_matches, scene_corners[1] + Point2f( img_1.cols, 0), scene_corners[2] + Point2f( img_1.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[2] + Point2f( img_1.cols, 0), scene_corners[3] + Point2f( img_1.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[3] + Point2f( img_1.cols, 0), scene_corners[0] + Point2f( img_1.cols, 0), Scalar( 0, 255, 0), 4 );

I am getting the following output

and

But my question is why its not drawing rectangle on the object which is detected like:

I am doing ... (more) |

|

2015-04-18 21:16:50 -0600

| marked best answer | How to train my data only once This is my code , which i am using for train the dataset but whenever i run the code it again start vectorization and feature counting training etc , and it takes time every time whenever i start , i want it that it should train ones and not takes time again and again char ch[30];

//--------Using SURF as feature extractor and FlannBased for assigning a new point to the nearest one in the dictionary

Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create("FlannBased");

Ptr<DescriptorExtractor> extractor = new SurfDescriptorExtractor();

SurfFeatureDetector detector(500);

//---dictionary size=number of cluster's centroids

int dictionarySize = 1500;

TermCriteria tc(CV_TERMCRIT_ITER, 10, 0.001);

int retries = 1;

int flags = KMEANS_PP_CENTERS;

BOWKMeansTrainer bowTrainer(dictionarySize, tc, retries, flags);

BOWImgDescriptorExtractor bowDE(extractor, matcher);

void collectclasscentroids() {

IplImage *img;

int i,j;

for(j=1;j<=4;j++)

for(i=1;i<=60;i++){

sprintf( ch,"%s%d%s%d%s","train/",j," (",i,").jpg");

const char* imageName = ch;

img = cvLoadImage(imageName,0);

vector<KeyPoint> keypoint;

detector.detect(img, keypoint);

Mat features;

extractor->compute(img, keypoint, features);

bowTrainer.add(features);

}

return;

}

int _tmain(int argc, _TCHAR* argv[])

{

int i,j;

IplImage *img2;

cout<<"Vector quantization..."<<endl;

collectclasscentroids();

vector<Mat> descriptors = bowTrainer.getDescriptors();

int count=0;

for(vector<Mat>::iterator iter=descriptors.begin();iter!=descriptors.end();iter++)

{

count += iter->rows;

}

cout<<"Clustering "<<count<<" features"<<endl;

//choosing cluster's centroids as dictionary's words

Mat dictionary = bowTrainer.cluster();

bowDE.setVocabulary(dictionary);

cout<<"extracting histograms in the form of BOW for each image "<<endl;

Mat labels(0, 1, CV_32FC1);

Mat trainingData(0, dictionarySize, CV_32FC1);

int k = 0;

vector<KeyPoint> keypoint1;

Mat bowDescriptor1;

//extracting histogram in the form of bow for each image

for(j = 1; j <= 4; j++)

for(i = 1; i <= 60; i++)

{

sprintf( ch,"%s%d%s%d%s","train/",j," (",i,").jpg");

const char* imageName = ch;

img2 = cvLoadImage(imageName, 0);

detector.detect(img2, keypoint1);

bowDE.compute(img2, keypoint1, bowDescriptor1);

trainingData.push_back(bowDescriptor1);

labels.push_back((float) j);

}

//Setting up SVM parameters

CvSVMParams params;

params.kernel_type = CvSVM::RBF;

params.svm_type = CvSVM::C_SVC;

params.gamma = 0.50625000000000009;

params.C = 312.50000000000000;

params.term_crit = cvTermCriteria(CV_TERMCRIT_ITER, 100, 0.000001);

CvSVM svm;

printf("%s\n", "Training SVM classifier");

bool res = svm.train(trainingData, labels, cv::Mat(), cv::Mat(), params);

cout<<"Processing evaluation data..."<<endl;

Mat groundTruth(0, 1, CV_32FC1);

Mat evalData(0, dictionarySize, CV_32FC1);

k = 0;

vector<KeyPoint> keypoint2;

Mat bowDescriptor2;

Mat results(0, 1, CV_32FC1);;

for(j = 1; j <= 4; j++)

for(i = 1; i <= 60; i++)

{

sprintf( ch, "%s%d%s%d%s", "eval/", j, " (",i,").jpg");

const char* imageName = ch;

img2 = cvLoadImage(imageName,0);

detector.detect(img2, keypoint2);

bowDE.compute(img2, keypoint2, bowDescriptor2);

evalData.push_back(bowDescriptor2);

groundTruth.push_back((float) j);

float response = svm.predict(bowDescriptor2);

results.push_back(response);

}

//calculate the number of unmatched classes

double errorRate = (double) countNonZero(groundTruth- results) / evalData.rows;

I just learn about the method to save the file of trained data like train.xml and than use it in prediction , but i am not clear about it and its use , Demo code will prefer ... (more) |

|

2015-03-17 09:33:33 -0600

| commented question | What are some easy methods to find objects in image processing? As far circle detection concern : Study the curvature of every contour pixel and check if it fits a circle or ellipse. This check may be done by computing a histogram of edge orientations for the contour pixels, or by checking the gradients of orienations from contour pixel to contour pixel. In the second case, for a circle or ellipse, the gradients should be almost uniform . Refrence For Different color detection |

|

2015-03-17 09:32:23 -0600

| commented question | What are some easy methods to find objects in image processing? What about first detecting the circles and then with color values you can use detect green , as far as sun shine concern we can give a range of values to green color like if green color having value "1" then lets suppose with sun shine it become more light so cann't we suggest the program like if color goes light from 1 to 0.5 it that particular circle then its green as well . |

|

2015-03-16 16:55:13 -0600

| commented question | Simple OpenCV program crashes did you check the path by making it simple like cv::Mat image = cv::imread(C://bottle_label.jpg"); ? |

|

2015-03-16 16:37:44 -0600

| commented question | Simple OpenCV program crashes Did you check it with cv::namedWindow("My Image"); by removing 1 |

|

2015-03-16 12:16:04 -0600

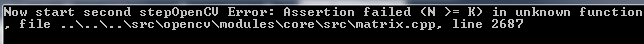

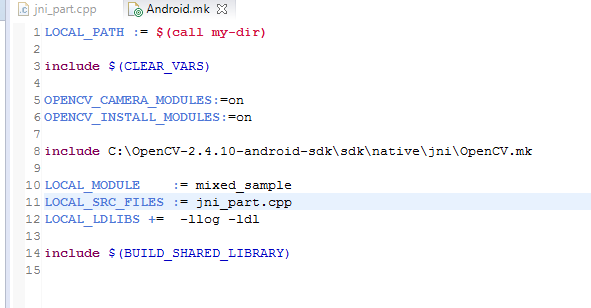

| edited question | OpenCv on Android without using OpenCv manager is not working I am using OpenCv on Android , when I run the application it give me option of installing OpenCv manager , I don't want to use this option for my application. For this I followed this answer from this forum , I follow the steps but still its asking me for OpenCv manager. Below is my android.mk

and Properties option

Followed OpenCv this link. |

|

2015-03-10 16:05:55 -0600

| received badge | ● Enthusiast

|

|

2015-03-08 11:17:13 -0600

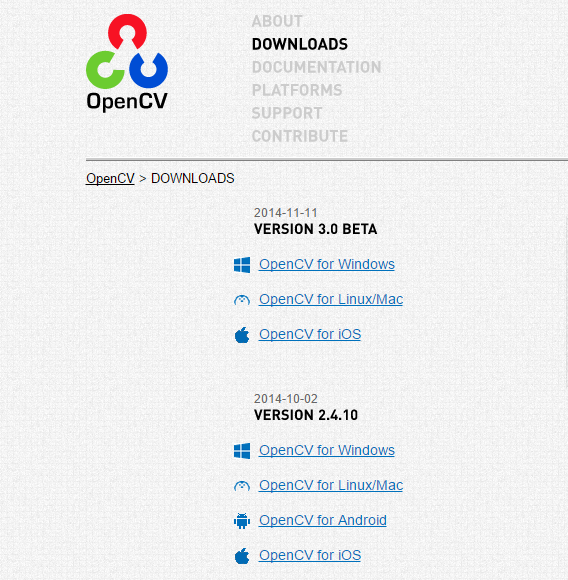

| asked a question | Dos run the .exe file of opencv I open the official opencv website for downloading opencv ,

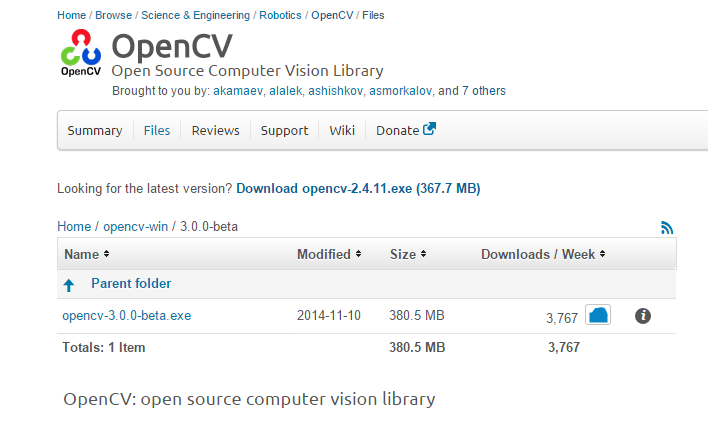

then i clicked on OpenCV for Windows from VERSION 3.0 BETA , then this page open

and when i click on opencv.2.4.11.exe then it start downloading , after completion of downloading dos command runs and I cannt get where my opencv folder is . As suggested by @StevenPuttemans I cleared my browsing history but still getting this. |

|

2015-03-06 10:31:23 -0600

| commented question | Windows 7 installation fail: 'Can not open file OpenCV...exe as archive' |

|

2015-03-06 06:02:09 -0600

| commented question | Windows 7 installation fail: 'Can not open file OpenCV...exe as archive' I will open new thread with snapshots |

|

2015-03-04 13:06:44 -0600

| commented question | Windows 7 installation fail: 'Can not open file OpenCV...exe as archive' Thanks @steven , Actually i use the official link as well , i Click on beta version 3.0 (OpenCV for Windows) then next page opens then next page displays Looking for the latest version? Download opencv-2.4.11.exe (367.7 MB) and i click on it. |