|

2017-02-08 03:56:28 -0600

| received badge | ● Supporter

(source)

|

|

2014-10-25 13:44:34 -0600

| received badge | ● Student

(source)

|

|

2014-04-09 05:40:18 -0600

| asked a question | Average histogram of multiple histograms Hallo, I calculated H-S Histograms of 100 images for the same object which located in different environment conditions, I need now one average histogram of these 100 histograms! vector<matnd> histImages;

let's assume that we have histImages[i] ; i=0 to 99 number of histograms Thank you in advance // hue varies from 0 to 179, see cvtColor

float hranges[] = { 0, 180 };

// saturation varies from 0 (black-gray-white) to

// 255 (pure spectrum color)

float sranges[] = { 0, 256 };

const float* ranges[] = { hranges, sranges };

MatND hist;

// we compute the histogram from the 0-th and 1-st channels

int channels[] = {0, 1};

calcHist( &hsv, 1, channels, Mat(), // do not use mask

hist, 2, histSize, ranges,

true, // the histogram is uniform

false );

double maxVal=0;

minMaxLoc(hist, 0, &maxVal, 0, 0);

int scale = 10;

Mat histImg = Mat::zeros(sbins*scale, hbins*10, CV_8UC3);

for( int h = 0; h < hbins; h++ )

for( int s = 0; s < sbins; s++ )

{

float binVal = hist.at<float>(h, s);

int intensity = cvRound(binVal*255/maxVal);

rectangle( histImg, Point(h*scale, s*scale),

Point( (h+1)*scale - 1, (s+1)*scale - 1),

Scalar::all(intensity),

CV_FILLED );

}

|

|

2014-03-31 06:26:55 -0600

| commented answer | vector of vectors This not work because if you have in first file 20 images and in the second 10 images at the end you will get FilesVector[30] (with 30 images)

but what I need is FilesVector[ vict(20) , vict(10)] so finally when I call FilesVector[0] this mean that I call the images in the first file |

|

2014-03-31 05:11:07 -0600

| asked a question | vector of vectors Hello, I am looping through multiple files to read images inside each one, I got the files paths and number of images in each file: //here is part of the code: vector <Mat>& Images;

for( size_t i = 0; i < ImagesCounts.size(); i++ )

{

Mat img = imread( filename, 1 ); //

Images.push_back( img );

}

I read by this code the images of the first file so: Images[0]= img1 , Images[1]=img2 ..... I am now in the second loop (which has different filename and ImageCounts) and I need to save first vector of Images in a global vector.. this mean: FilesVector(vect[1],vect[2]......vect[N]); N is number of files where: vect[1] should include the images of the first file vect[2] should include the images of the second file So how I can define the global vector and push the images I have in the first loop to vect[1].. ? I tried this code before going to the second loop but didn't work! vector<vector<Mat>> FilesVector;

FilesVector[0]=Images;

|

|

2014-03-03 08:17:25 -0600

| received badge | ● Scholar

(source)

|

|

2014-02-25 01:25:55 -0600

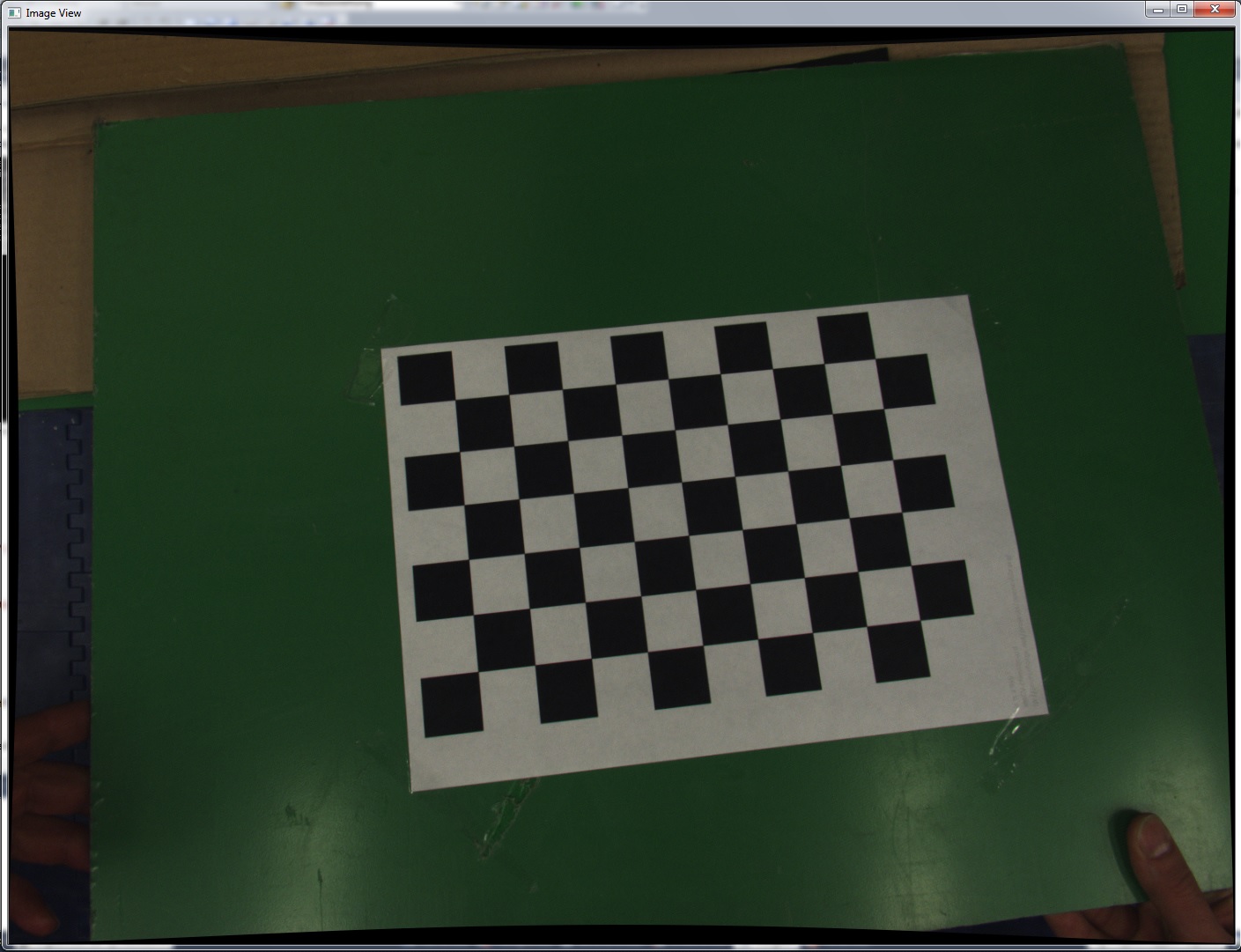

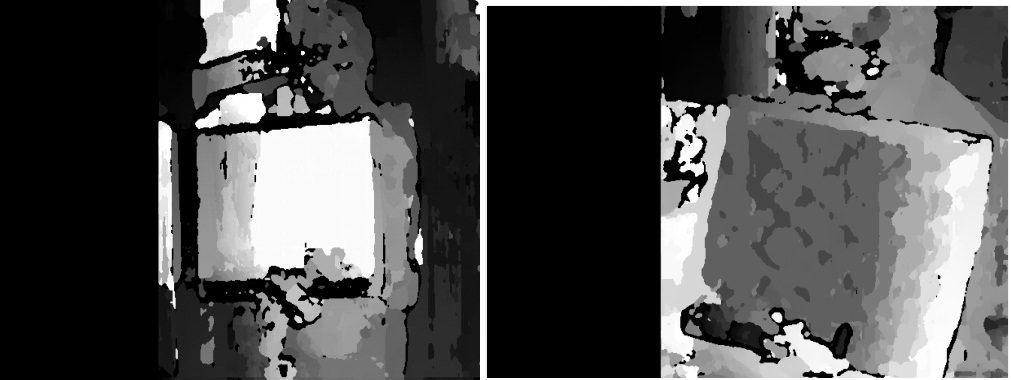

| asked a question | Remove black borders after undistortion Hallo, after calibration and undistortion I have this image and I need to remove the black borders(crop the image)only depending on the camera parameters which I have in the output XML file so how I can define the region of interest?

<Camera_Matrix type_id="opencv-matrix">

<rows>3</rows>

<cols>3</cols>

<dt>d</dt>

<data>

1.3117098501208040e+003 0. 6.9550000000000000e+002 0.

1.3117098501208040e+003 520. 0. 0. 1.</data></Camera_Matrix>

<Distortion_Coefficients type_id="opencv-matrix">

<rows>5</rows>

<cols>1</cols>

<dt>d</dt>

<data>

-2.1400301530776253e-001 4.6169624608789456e-002 0. 0.

1.8396036701643875e-001</data></Distortion_Coefficients>

<Avg_Reprojection_Error>3.6924789370054928e-001</Avg_Reprojection_Error>

<Per_View_Reprojection_Errors type_id="opencv-matrix">

<rows>16</rows>

<cols>1</cols>

<dt>f</dt>

<data>

3.56106251e-001 3.05902541e-001 3.21639299e-001 3.97623330e-001

4.79688823e-001 3.31597805e-001 2.92068005e-001 3.66955608e-001

3.19793940e-001 6.19786203e-001 4.36506212e-001 3.26359451e-001

3.68108034e-001 2.78367579e-001 2.72553772e-001 2.62321293e-001</data></Per_View_Reprojection_Errors>

<!-- a set of 6-tuples (rotation vector + translation vector) for each view -->

<Extrinsic_Parameters type_id="opencv-matrix">

<rows>16</rows>

<cols>6</cols>

<dt>d</dt>

<data>

8.2524798674623173e-002 1.1957588136538763e-002

-5.2492873372617030e-002 -8.7280621973657318e+001

-3.9451553914075618e+001 5.7852359123958058e+002

-2.0676032091414645e-001 -6.0286278602077602e-002 ...

<Image_points type_id="opencv-matrix">

<rows>16</rows>

<cols>54</cols>

<dt>"2f"</dt>

<data>

4.99537811e+002 4.31490295e+002 5.57311035e+002 4.28100739e+002

6.15545166e+002 4.24772125e+002 6.74481750e+002 4.21481506e+002

7.33297974e+002 4.18324432e+002 7.91958740e+002 4.15214600e+002

8.51019897e+002 4.12198181e+002 9.09479858e+002 4.09300568e+002

9.67362793e+002 4.06486938e+002 5.02951508e+002 4.89633362e+002

...

|

|

2014-02-19 03:48:49 -0600

| asked a question | create Header file of Calibration sample Hello, I need to create Header file mainCalib.h from the mainCalib.cpp file the mainCalib.cpp file include calibration Sample of opencv .. so finally I can execute the program from main.cpp file: this is mainCalib.cpp file: #include <iostream>

#include <sstream>

#include <time.h>

#include <stdio.h>

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include <opencv2/highgui/highgui.hpp>

#ifndef _CRT_SECURE_NO_WARNINGS

# define _CRT_SECURE_NO_WARNINGS

#endif

#include "mainCalib.h"

using namespace cv;

using namespace std;

void help()

{

cout << "This is a camera calibration sample." << endl

<< "Usage: calibration configurationFile" << endl

<< "Near the sample file you'll find the configuration file, which has detailed help of "

"how to edit it. It may be any OpenCV supported file format XML/YAML." << endl;

}

class Settings

{

public:

Settings() : goodInput(false) {}

enum Pattern { NOT_EXISTING, CHESSBOARD, CIRCLES_GRID, ASYMMETRIC_CIRCLES_GRID };

enum InputType {INVALID, CAMERA, VIDEO_FILE, IMAGE_LIST};

void write(FileStorage& fs) const //Write serialization for this class

{

fs << "{" << "BoardSize_Width" << boardSize.width

<< "BoardSize_Height" << boardSize.height

<< "Square_Size" << squareSize

<< "Calibrate_Pattern" << patternToUse

<< "Calibrate_NrOfFrameToUse" << nrFrames

<< "Calibrate_FixAspectRatio" << aspectRatio

<< "Calibrate_AssumeZeroTangentialDistortion" << calibZeroTangentDist

<< "Calibrate_FixPrincipalPointAtTheCenter" << calibFixPrincipalPoint

<< "Write_DetectedFeaturePoints" << bwritePoints

<< "Write_extrinsicParameters" << bwriteExtrinsics

<< "Write_outputFileName" << outputFileName

<< "Show_UndistortedImage" << showUndistorsed

<< "Input_FlipAroundHorizontalAxis" << flipVertical

<< "Input_Delay" << delay

<< "Input" << input

<< "}";

}

void read(const FileNode& node) //Read serialization for this class

{

node["BoardSize_Width" ] >> boardSize.width;

node["BoardSize_Height"] >> boardSize.height;

node["Calibrate_Pattern"] >> patternToUse;

node["Square_Size"] >> squareSize;

node["Calibrate_NrOfFrameToUse"] >> nrFrames;

node["Calibrate_FixAspectRatio"] >> aspectRatio;

node["Write_DetectedFeaturePoints"] >> bwritePoints;

node["Write_extrinsicParameters"] >> bwriteExtrinsics;

node["Write_outputFileName"] >> outputFileName;

node["Calibrate_AssumeZeroTangentialDistortion"] >> calibZeroTangentDist;

node["Calibrate_FixPrincipalPointAtTheCenter"] >> calibFixPrincipalPoint;

node["Input_FlipAroundHorizontalAxis"] >> flipVertical;

node["Show_UndistortedImage"] >> showUndistorsed;

node["Input"] >> input;

node["Input_Delay"] >> delay;

interprate();

}

void interprate()

{

goodInput = true;

if (boardSize.width <= 0 || boardSize.height <= 0)

{

cerr << "Invalid Board size: " << boardSize.width << " " << boardSize.height << endl;

goodInput = false;

}

if (squareSize <= 10e-6)

{

cerr << "Invalid square size " << squareSize << endl;

goodInput = false;

}

if (nrFrames <= 0)

{

cerr << "Invalid number of frames " << nrFrames << endl;

goodInput = false;

}

if (input.empty()) // Check for valid input

inputType = INVALID;

else

{

if (input[0] >= '0' && input[0] <= '9')

{

stringstream ss(input);

ss >> cameraID;

inputType = CAMERA;

}

else

{

if (readStringList(input, imageList))

{

inputType = IMAGE_LIST;

nrFrames = (nrFrames < (int)imageList.size()) ? nrFrames : (int)imageList.size();

}

else

inputType = VIDEO_FILE;

}

if (inputType == CAMERA)

inputCapture.open(cameraID);

if (inputType == VIDEO_FILE)

inputCapture.open(input);

if (inputType != IMAGE_LIST && !inputCapture.isOpened())

inputType = INVALID;

}

if (inputType == INVALID)

{

cerr << " Inexistent input: " << input;

goodInput = false;

}

flag = 0;

if(calibFixPrincipalPoint) flag |= CV_CALIB_FIX_PRINCIPAL_POINT;

if(calibZeroTangentDist) flag |= CV_CALIB_ZERO_TANGENT_DIST;

if(aspectRatio) flag |= CV_CALIB_FIX_ASPECT_RATIO;

calibrationPattern = NOT_EXISTING;

if (!patternToUse.compare("CHESSBOARD")) calibrationPattern = CHESSBOARD;

if (!patternToUse.compare("CIRCLES_GRID")) calibrationPattern = CIRCLES_GRID;

if (!patternToUse.compare("ASYMMETRIC_CIRCLES_GRID")) calibrationPattern = ASYMMETRIC_CIRCLES_GRID;

if (calibrationPattern == NOT_EXISTING)

{

cerr << " Inexistent camera calibration mode: " << patternToUse << endl;

goodInput = false;

}

atImageList = 0;

}

Mat nextImage()

{

Mat result;

if( inputCapture.isOpened() )

{

Mat view0;

inputCapture >> view0;

view0.copyTo(result);

}

else if( atImageList < (int)imageList.size() )

result = imread(imageList[atImageList++], CV_LOAD_IMAGE_COLOR);

return result;

}

static bool readStringList( const string& filename, vector<string>& l )

{

l.clear();

FileStorage fs(filename, FileStorage::READ);

if( !fs.isOpened() )

return false;

FileNode n = fs.getFirstTopLevelNode();

if( n.type() != FileNode::SEQ )

return false;

FileNodeIterator it = n.begin(), it_end = n.end();

for( ; it != it_end; ++it )

l.push_back((string)*it);

return true;

}

public:

Size boardSize; // The size of the board -> Number of items by width and ...

(more) |

|

2014-02-19 01:08:31 -0600

| commented answer | Camera Undistortion Thank you for this explaination |

|

2014-02-14 06:02:47 -0600

| asked a question | Camera Undistortion Hello, I have the camera calibration Parameters as followings, and it works well for undistortion function, how I can Transfer these parametrs if I changed the angle of camera axes 90 degree to the left or the right?! uint w= 1040, h= 1392;

double disto[5]= { -0.205410121, 0.091357057, 0.000740608, 0.000895488, 0.053117702, }; cv::Mat cameraMatrix;

cv::Mat distCoeffs;

cv::Mat cameraIdeal;

cv::Mat ROTATION(3,3,CV_64F);

ocv_setcam(cameraMatrix, 1286.635225995, 1287.007162383, 520, 730.910560759);

ocv_setcam(cameraIdeal, 1288, 1288, 520, 762);

vect2ocv(distCoeffs, disto,5);

assert(ROTATION.isContinuous());

double * R= ((double*) ROTATION.data);

R[0]= 1.0; // col=0 auf row=0

R[1]= 0.0;

R[2]= 0.0;

R[3]= 0.0;

R[4]= 1.0;

R[5]= 0.0;

R[6]= 0.0;

R[7]= 0.0;

R[8]= 1.0;

|

|

2013-05-15 08:38:57 -0600

| answered a question | From 3d point cloud to disparity map Hello, I need to know if you got your coordinate system and translate the output of ReprojectImageTo3D

function as I am working in the same topic, I have like this result X coordinate values always between 350 - 450

Y coordinate values always between -80 to -30

z coordinates values always between 230 - 280 and sometimes 10000 when so far Thank you for your help |

|

2013-05-15 03:34:22 -0600

| asked a question | StereoSGBM algorithm Hello, the result of 3d reprojection using StereoSGBM algorithm is the X,Y,Z coordinates of each pixel in the depth image. public void Computer3DPointsFromStereoPair(Image<Gray, Byte> left, Image<Gray, Byte> right, out Image<Gray, short> disparityMap, out MCvPoint3D32f[] points)

{

points = PointCollection.ReprojectImageTo3D(disparityMap, Q);

}

by taking the first element of this result: points[0] = { X= 414.580017 Y= -85.03029 Z= 10000.0 } I'm confused here!! to which pixel this point refers to ? and why it is not like this X=0,Y=0,Z=10000.0!

|

|

2013-05-14 05:47:13 -0600

| asked a question | Stereo imaging & Calibration Hello, the result of 3d reprojection function is a matrix that has X,Y,Z of each pixel. let's take the first element of this matrix points[0] which has:

X= 414.580017

Y= -85.03029

Z= 10000.0 what is the unit here and where I can find this pixel in the image ?

and why it is not like this X=0,Y=0,Z=10000.0! points = PointCollection.ReprojectImageTo3D(disparityMap, Q);

|

|

2013-04-24 02:45:57 -0600

| asked a question | Capture Class & Axis Camera Hello, I can connect to robot Axis cameras, but I can not set Capture class to get frames from these Camera devices ? or is there any other method to do that! this.Video_Source1 = new AxAXISMEDIACONTROLLib.AxAxisMediaControl();

this.Video_Source2 = new AxAXISMEDIACONTROLLib.AxAxisMediaControl();

Video_Source1.MediaURL = CompleteURL("192.168.0.57:8083", "mjpeg");

Video_Source1.Play();

Video_Source2.MediaURL = CompleteURL("192.168.0.58:8082", "mjpeg");

Video_Source2.Play();

_Capture1 = new Capture(here is the problem);

_Capture2 = new Capture(here is the problem);

_Capture1.ImageGrabbed += ProcessFrame;

_Capture1.Start();

}

private void ProcessFrame(object sender, EventArgs arg)

{

Image<Bgr, Byte> frame_S1 = _Capture1.RetrieveBgrFrame();

Image<Bgr, Byte> frame_S2 = _Capture2.RetrieveBgrFrame();

.

.

.

}

|

|

2013-04-24 02:20:28 -0600

| received badge | ● Editor

(source)

|

|

2013-04-24 02:14:34 -0600

| asked a question | Capture Class & Axis Camera Hello, I can connect to robot Axis cameras but how I can set Capture class to get frames from these Camera devices ? or is there any other method to do that! Video_Source1.MediaURL = CompleteUR ("192.168.0.57:8083", "mjpeg");

Video_Source1.Play(); Video_Source2.MediaURL = CompleteURL("192.168.0.58:8082", "mjpeg");

Video_Source2.Play(); _Capture1 = new Capture(here is the problem);

_Capture2 = new Capture(here is the problem); _Capture1.ImageGrabbed += ProcessFrame;

_Capture1.Start();} private void ProcessFrame(object sender, EventArgs arg) { Image<bgr> frame_S1 = _Capture1.RetrieveBgrFrame();

Image<bgr> frame_S2 = _Capture2.RetrieveBgrFrame();

.

.

.

} |