|

2019-12-11 00:40:12 -0600

| received badge | ● Nice Question

(source)

|

|

2019-12-11 00:40:04 -0600

| received badge | ● Nice Question

(source)

|

|

2019-01-28 03:44:57 -0600

| received badge | ● Popular Question

(source)

|

|

2018-03-19 14:54:06 -0600

| received badge | ● Famous Question

(source)

|

|

2016-09-09 10:53:59 -0600

| received badge | ● Famous Question

(source)

|

|

2016-08-15 04:39:25 -0600

| received badge | ● Necromancer

(source)

|

|

2016-06-15 13:51:50 -0600

| received badge | ● Notable Question

(source)

|

|

2016-01-19 00:31:40 -0600

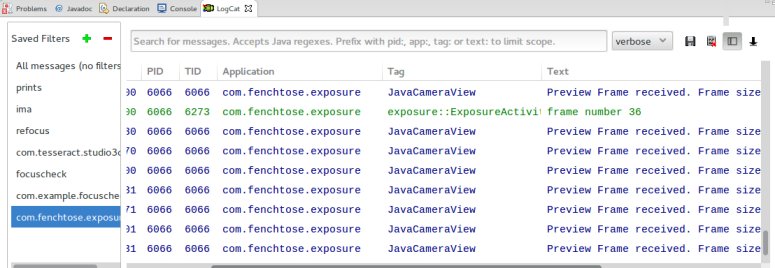

| answered a question | Android Camera2 YUV to RGB conversion turns out green? I have been able to do it successfully. Image.Plane Y = image.getPlanes()[0];

Image.Plane U = image.getPlanes()[1];

Image.Plane V = image.getPlanes()[2];

int Yb = Y.getBuffer().remaining();

int Ub = U.getBuffer().remaining();

int Vb = V.getBuffer().remaining();

byte[] data = new byte[Yb + Ub + Vb];

Y.getBuffer().get(data, 0, Yb);

U.getBuffer().get(data, Yb, Ub);

V.getBuffer().get(data, Yb+ Ub, Vb);

I'm using OpenCV JNI and was able to convert it to BGR image using CV_YUV2BGR_I420 with cvtColor Here's my C++ code. jboolean Java_com_fenchtose_myPackage_myClass_myMethod(

JNIEnv* env, jobject thiz,

jint width, jint height,

jbyteArray YUVFrameData)

{

jbyte * pYUVFrameData = env->GetByteArrayElements(YUVFrameData, 0);

double alpha;

alpha = (double) alphaVal;

Mat mNV(height + height/2, width, CV_8UC1, (unsigned char*)pYUVFrameData);

Mat mBgr(height, width, CV_8UC3);

cv::cvtColor(mNV, mBgr, CV_YUV2BGR_I420);

env->ReleaseByteArrayElements(YUVFrameData, pYUVFrameData, 0);

return true;

}

|

|

2016-01-08 05:38:41 -0600

| received badge | ● Notable Question

(source)

|

|

2015-06-12 10:30:29 -0600

| received badge | ● Notable Question

(source)

|

|

2015-05-18 02:58:53 -0600

| received badge | ● Popular Question

(source)

|

|

2015-02-22 10:10:00 -0600

| received badge | ● Good Question

(source)

|

|

2014-12-09 13:49:16 -0600

| marked best answer | How to set Parameters for StereoBM in Android/Java? I am trying to make disparity map of two images that I took from the phone's camera. I have written the code in Android/Java (not using C++). I'm using stereoBM to calculate the disparity map but I am unable to set the parameters. In C++, it can be done by StereoBM sbm;

sbm.state->SADWindowSize

sbm.state->numberOfDisparities

sbm.state->preFilterSize

sbm.state->preFilterCap

sbm.state->minDisparity

sbm.state->textureThreshold

sbm.state->uniquenessRatio

sbm.state->speckleWindowSize

sbm.state->speckleRange

sbm.state->disp12MaxDiff

but in Android/Java, I can only set PRESET, SADWindowSize, and nDisparities using the constructor. Is there any way in which I can set these parameters? And I also once tried StereoSGBM (the parameters can be set in that), but during USB debugging, while computing the disparity map, the application took too long and hung. So I am trying to stick to StereoBM. |

|

2014-12-09 13:47:26 -0600

| marked best answer | How to access pixel values of CV_32F/CV_64F Mat? I was working on homography and whenever I try to check the values of H matrix (type CV_64F) using H.at<float>(i, j) I get random numbers(sometimes garbage value). I want to access pixel values of float matrix. Is there any way to do it? Mat A = Mat::eye(3, 3, CV_64F);

float B;

for(int i=0; i<A.rows; i++)

{

for(int j=0; j<A.cols; j++)

{

printf("%f\n", A.at<float>(i, j));

}

}

imshow("identity", A);

waitKey(0);

This shows correct image of an identity matrix but while trying to access pixel values, I get 0.000000

1.875000

0.000000

0.000000

0.000000

0.000000

0.000000

0.000000

0.000000 Why is this so? |

|

2014-12-09 13:43:38 -0600

| marked best answer | VideoCapture is not working in OpenCV 2.4.2 I recently installed OpenCV 2.4.2 in Ubuntu 12.04. cap = VideoCapture(0)

is working. but I can't grab frames from some video source. cap = VideoCapture("input.avi")

img = cap.read()

gives me a numpy with all zero elements. I have also installed ffmpeg 0.11, Latest snapshot of x264, v4l-0.8.8 (All are latest stable versions) cmake -D WITH_QT=ON -D WITH_FFMPEG=ON -D WITH_OPENGL=ON -D WITH_TBB=ON -D BUILD_EXAMPLES=OFF WITH_V4L=ON ..

make

sudo make install

When I do cmake, I get this -- Detected version of GNU GCC: 46 (406)

-- Found OpenEXR: /usr/lib/libIlmImf.so -- Looking for linux/videodev.h -- Looking for linux/videodev.h - not found -- Looking for linux/videodev2.h -- Looking for linux/videodev2.h - found -- Looking for libavformat/avformat.h -- Looking for libavformat/avformat.h - found -- Looking for ffmpeg/avformat.h -- Looking for ffmpeg/avformat.h - not found -- checking for module 'tbb' -- package 'tbb' not found And -- Video I/O: -- DC1394 1.x: NO -- DC1394 2.x: YES (ver 2.2.0) -- FFMPEG: YES -- codec: YES (ver 54.23.100) -- format: YES (ver 54.6.100) -- util: YES (ver 51.54.100) -- swscale: YES (ver 2.1.100) -- gentoo-style: YES -- GStreamer: -- base: YES (ver 0.10.36) -- app: YES (ver 0.10.36) -- video: YES (ver 0.10.36) -- OpenNI: NO -- OpenNI PrimeSensor Modules: NO -- PvAPI: NO -- UniCap: NO -- UniCap ucil: NO -- V4L/V4L2: Using libv4l (ver 0.8.8) -- XIMEA: NO -- Xine: NO What's the problem here ? |

|

2014-12-09 13:43:05 -0600

| marked best answer | Assertion Error in Kalman Filter python OpenCV 2.4.0 When I try to predict Kalman, k = cv2.KalmanFilter(2,1,0)

c = np.array([(0.0),(0.0)]).reshape(2,1)

a = k.predict(c)

I am getting following Assertion Error. error:

/home/jay/Downloads/OpenCV-2.4.0/modules/core/src/matmul.cpp:711:

error: (-215) type == B.type() && (type == CV32FC1 || type ==

CV64FC1 || type == CV32FC2 || type == CV64FC2) in function gemm So, I added following line of code in OpenCV-2.4.0/modules/core/src/matmul.cpp and rebuilt it from the source. printf("%d %d\n",type,B.type());

So, now it gives me output as, 5 5 0 6 Am I doing something wrong here ? What's the problem ? |

|

2014-11-17 09:19:01 -0600

| received badge | ● Popular Question

(source)

|

|

2014-05-06 06:34:17 -0600

| received badge | ● Nice Answer

(source)

|

|

2014-04-11 05:52:00 -0600

| asked a question | Static Initialization of Android Application makes it a lot slower I have made a simple application using Android OpenCV. It also has a jni part. Everything is working fine. Now, I wanted to initialize it statically so I followed instructions and it seemed to work. PROBLEM: The Application works really slow. I'm capturing image from camera using CameraBridgeViewBase. I process it in C++ using jni and show the processed image on another adjacent window (ImageView). When I was using OpenCV's Android manager to link the application, it was working at 10 FPS. But when I linked it statically, it slowed down to 2 FPS. I changed to native camera from java camera which was more slower. (native camera seems slower even when linked to opencv manager). I'm testing this on NEXUS 4 with Android 4.4 and using OpenCV 2.4.8. Android.mk: LOCAL_PATH := $(call my-dir)

include $(CLEAR_VARS)

OPENCV_CAMERA_MODULES:=on

OPENCV_INSTALL_MODULES:=on

include /home/jay/Android_OpenCV/OpenCV-2.4.8-android-sdk/sdk/native/jni/OpenCV.mk

LOCAL_MODULE := light_exposure

LOCAL_SRC_FILES := jni_part.cpp

LOCAL_LDLIBS += -llog -ldl

include $(BUILD_SHARED_LIBRARY)

Application.mk: APP_STL := gnustl_static

APP_CPPFLAGS := -frtti -fexceptions

APP_ABI := armeabi-v7a

APP_PLATFORM := android-9

Java Code: public class ExposureActivity extends Activity implements CvCameraViewListener2 {

private static final String TAG = "exposure::ExposureActivity";

private CameraBridgeViewBase mOpenCvCameraView;

private int tFrame=0;

// other code

static {

if(!OpenCVLoader.initDebug())

{

Log.i(TAG, "Static linking failed");

}

else

{

System.loadLibrary("opencv_java");

System.loadLibrary("light_exposure"); // jni module

Log.i(TAG, "static linking success");

}

}

// other code

@Override

public void onResume()

{

super.onResume();

//OpenCVLoader.initAsync(OpenCVLoader.OPENCV_VERSION_2_4_8, this, mLoaderCallback);

}

// When I press start button in the application

public void onClickStartButton(View view){

mOpenCvCameraView = (CameraBridgeViewBase) findViewById(R.id.opencv_part_java_surface_view);

mOpenCvCameraView.setVisibility(SurfaceView.VISIBLE);

mOpenCvCameraView.setCvCameraViewListener(this);

mOpenCvCameraView.enableView();

}

public Mat onCameraFrame(CvCameraViewFrame inputFrame) {

tFrames ++;

Log.i(TAG, "frame number " + String.valueOf(tFrames));

mRgba = inputFrame.rgba();

processForExposure(mRgba.getNativeObjAddr(), mDis.getNativeObjAddr()); // native function

int w = mDis.width();

int h = mDis.height();

Bitmap.Config conf = Bitmap.Config.ARGB_8888;

Bitmap retBmp = Bitmap.createBitmap(w, h, conf);

Utils.matToBitmap(mDis, retBmp);

Message msg = new Message();

msg.obj = retBmp;

mHandler.sendMessage(msg); // to set the bitmap image in ImageView

return mRgba;

}

}

Am I missing something? Why is the performance so slow? Also, java_camera seems to drop frames.

|

|

2014-04-08 01:48:37 -0600

| asked a question | Multithreading for Image Processing I do not have much experience with multithreading. I just want to create 4 threads. Devide image in 4 parts and process each part in different thread and join each part back. I have written following code. #include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/core/core.hpp>

#include <stdio.h>

#include <cstdlib>

#include <pthread.h>

#include <unistd.h>

using namespace std;

struct thread_data {

int thread_id;

cv::Mat img;

cv::Mat retVal;

double alpha;

};

void *processFrame(void *threadarg)

{

//printf("processFrame started\n");

struct thread_data *my_data;

my_data = (struct thread_data *)threadarg;

printf("accumulateWeighted starting %d\n", my_data->thread_id);

printf("%d %d %d %d %d\n",my_data->thread_id, my_data->img.rows, my_data->img.cols, my_data->retVal.rows, my_data->retVal.cols);

//cv::accumulateWeighted(my_data->img, my_data->retVal, my_data->alpha);

printf("accumulateWeighted done %d\n", my_data->thread_id);

pthread_exit(NULL);

}

int main(int argc, char* argv[])

{

int NUM_THREADS = 4;

pthread_t threads[NUM_THREADS];

struct thread_data td[NUM_THREADS];

int rc;

cv::Mat img, acc;

double alpha = 0.1;

std::vector<cv::Mat> cutImg, accImg;

img = cv::imread(argv[1]);

acc = cv::Mat::zeros(img.size(), CV_32FC3);

cutImg.push_back(cv::Mat(img, cv::Range(0, img.rows/2), cv::Range(0, img.cols/2)));

cutImg.push_back(cv::Mat(img, cv::Range(0, img.rows/2), cv::Range(img.cols/2, img.cols)));

cutImg.push_back(cv::Mat(img, cv::Range(img.rows/2, img.rows), cv::Range(0, img.cols/2)));

cutImg.push_back(cv::Mat(img, cv::Range(img.rows/2, img.rows), cv::Range(img.cols/2, img.cols)));

accImg.push_back(cv::Mat(acc, cv::Range(0, img.rows/2), cv::Range(0, img.cols/2)));

accImg.push_back(cv::Mat(acc, cv::Range(0, img.rows/2), cv::Range(img.cols/2, img.cols)));

accImg.push_back(cv::Mat(acc, cv::Range(img.rows/2, img.rows), cv::Range(0, img.cols/2)));

accImg.push_back(cv::Mat(acc, cv::Range(img.rows/2, img.rows), cv::Range(img.cols/2, img.cols)));

for(int i=0; i<NUM_THREADS; i++)

{

td[i].thread_id = i;

td[i].img = cutImg[i];

td[i].retVal = accImg[i];

td[i].alpha = alpha;

rc = pthread_create(&threads[i], NULL, processFrame, (void*)&td[i]);

if(rc) {

printf("Error: Unable to create thread\n");

exit(-1);

}

}

pthread_exit(NULL);

return(1);

}

So when I run the program, most of the times it shows that the images that I passed have 0 rows. accumulateWeighted starting 1

1 270 480 270 480

accumulateWeighted starting 3

3 0 480 0 480

accumulateWeighted done 3

accumulateWeighted starting 2

2 0 480 0 480

accumulateWeighted done 2

accumulateWeighted starting 0

0 270 480 270 480

accumulateWeighted done 0

accumulateWeighted done 1

What am I doing wrong here? I have tried looking for examples but couldn't find any. Is there any other way that this can be done? |

|

2014-02-28 09:14:42 -0600

| received badge | ● Popular Question

(source)

|

|

2014-02-03 03:54:00 -0600

| answered a question | how can convert rgb to cie l*a*b with cpp use cvtColor with CV_RGB2Lab |

|

2013-09-14 00:19:48 -0600

| asked a question | How to find errors in stereo calibration and rectification? I'm working on Stereo Calibration. I have taken 20 images of chessboard in different orientations, and corners are found in each of them. Everything seems to work fine. I just want to check if there could be any error in calibation or rectification. How to look for errors? Epipoloar lines or any such clues? |

|

2013-08-24 12:05:40 -0600

| commented question | Detect Skin color of Palm using HSV Use simple regression machine learning algorithm to get weights for each H, S and V values. That'd work really well. |

|

2013-07-24 02:51:21 -0600

| received badge | ● Critic

(source)

|

|

2013-07-11 02:02:09 -0600

| commented answer | cv2.imread a url |

|

2013-07-10 04:11:34 -0600

| commented answer | cv2.imread a url SimpleCV uses urllib2.Request to fetch the data, and uses StringIO and PIL to convert image. |

|

2013-07-05 15:55:30 -0600

| asked a question | StereoSGBM Parameters based on Camera parameters? I have two identical cameras and I am taking two images and trying to find the disparity map. I am getting a good disparity map but I'd like to improve it. I am using StereoSGBM method to get the disparity map. I was wondering if it's possible to set StereoSGBM parameteres based on Camera Calibration and it's parameters. If yes, could someone give me some reading material if possible? |

|

2013-07-04 00:00:00 -0600

| commented answer | Python Legacy no longer supported? Thanks a lot for the info. |

|

2013-07-01 15:21:52 -0600

| received badge | ● Organizer

(source)

|

|

2013-07-01 15:13:18 -0600

| asked a question | Python Legacy no longer supported? I just build OpenCV from GitHub source and I'm getting the ImportError. cv2.cv. I looked in the source code and couldn't find cv.py file. So has it been deprecated? Is Python Legacy code no longer supported? |

|

2013-06-18 09:08:07 -0600

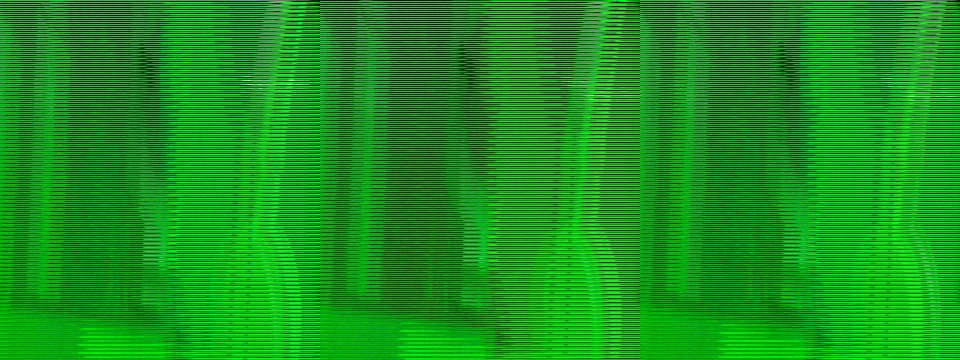

| asked a question | Unable to save proper Image grabbed from Camera of Evo V 4G I'm trying to get 3D image using Evo V 4G. It has 2 cameras and hence I am using HTC Sense to acces both cameras and it is working and I can see the camera preview (in 3D) also. So I'm trying to save bytes data in Mat, but upon saving, I'm getting following output. img = new Mat(height, width, CvType.CV_8UC1);

gray = new Mat(height, width, CvType.CV_8UC3); // 3 channel image named 'gray'.

img.put(0, 0, data);

Imgproc.cvtColor(img, gray, Imgproc.COLOR_YUV420sp2RGB);

File path = Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_PICTURES);

String filename = "evodispimg2.jpg";

File file = new File(path, filename);

Boolean bool = null;

filename = file.toString();

bool = Highgui.imwrite(filename, decodeimg);

height and width are taken from surface size. I'm getting the following Image.

And when I try this, decodeimg = new Mat(height, width, CvType.CV_8UC3);

decodeimg = Highgui.imdecode(gray, 1);

And try to save the image, I'm getting a NULL image. imdecode is returning Mat of 0, 0 size.

What could be the problem? How can I properly convert image between colorspaces? |

|

2013-06-17 02:26:14 -0600

| marked best answer | How does drawMatches work? (with respect to DMatch) Hey, So I have been working on a tracker based on SIFT descriptor. I saw this example of flannMatcher. http://docs.opencv.org/2.4.2/doc/tutorials/features2d/feature_flann_matcher/feature_flann_matcher.html I tried to implement it and worked really well. Now, my question is how does drawMatches work? As it is mentioned here, http://docs.opencv.org/modules/features2d/doc/drawing_function_of_keypoints_and_matches.html?highlight=drawmatch#void%20drawMatches%28const%20Mat&%20img1,%20const%20vector%3CKeyPoint%3E&%20keypoints1,%20const%20Mat&%20img2,%20const%20vector%3CKeyPoint%3E&%20keypoints2,%20const%20vector%3CDMatch%3E&%20matches1to2,%20Mat&%20outImg,%20const%20Scalar&%20matchColor,%20const%20Scalar&%20singlePointColor,%20const%20vector%3Cchar%3E&%20matchesMask,%20int%20flags%29 matches1to2 is a vector of DMatch and it matches from the first image to the second one, which means that keypoints1[i] has a corresponding point in keypoints2[matches[i]]. Now, how does this work? DMatch has float distance;

int queryIdx; // query descriptor index

int trainIdx; // train descriptor index

int imgIdx; // train image index

So, using these parameters, how can I (manually) check if keypoints1[i] has a corresponding point in keypoints2[matches[i]] ? |

|

2013-06-15 15:52:19 -0600

| edited answer | OpenCV libraries with c++ in ubuntu |

|

2013-06-02 05:31:56 -0600

| commented question | How to increase the quality of Disparity Map? |

|

2013-05-31 16:50:42 -0600

| received badge | ● Nice Question

(source)

|

|

2013-05-31 09:21:38 -0600

| commented question | OpenCV 2.4.5 can't find OpenNI and PrimeSensor Module can someone close this question? |

|

2013-05-31 09:20:45 -0600

| commented question | OpenCV 2.4.5 can't find OpenNI and PrimeSensor Module It was a silly mistake. I had forgot to add -D before WITH_OPENNI=ON |

|

2013-05-29 10:02:30 -0600

| asked a question | OpenCV 2.4.5 can't find OpenNI and PrimeSensor Module I have OpenCV 2.4.5 and I just got a kinect and I installed OpenNI and PrimeSensor Module on my Ubuntu 12.04 64 bit machine. I tried the given example in OpenNI and it was working. I could see the depth image and RGB image. I tried rebuilding OpenCV with OpenNI support by using WITH_OPENNI=ON flag but it still shows -- OpenNI: NO

-- OpenNI PrimeSensor Modules: NO

I checked /usr/lib, /usr/include/ni, and /usr/bin and found all the files there (I guess). I found following relevant files in /usr/lib libOpenNI.jni.so

libOpenNI.so

libXnCore.so

libXnDDK.so

libXnDeviceFile.so

libXnDeviceSensorV2KM.so

libXnFormats.so

libXRes.so.1

libXRes.so.1.0.0

libXvMC.so.1

libXvMC.so.1.0.0

libXvMCW.so.1

libXvMCW.so.1.0.0

libXxf86dga.so.1

libXxf86dga.so.1.0.0

and following files in /usr/include/ni IXnNodeAllocator.h

Linux-Arm

Linux-x86

MacOSX

XnAlgorithms.h

XnArray.h

XnBaseNode.h

XnBitSet.h

XnCallback.h

XnCodecIDs.h

XnContext.h

XnCppWrapper.h

XnCyclicQueueT.h

XnCyclicStackT.h

XnDataTypes.h

XnDerivedCast.h

XnDump.h

XnDumpWriters.h

XnEnumerationErrors.h

XnEvent.h

XnEventT.h

XnFPSCalculator.h

XnGeneralBuffer.h

XnHash.h

XnHashT.h

XnInternalDefs.h

XnLicensing.h

XnList.h

XnListT.h

XnLog.h

XnLogTypes.h

XnLogWriterBase.h

XnMacros.h

XnModuleCFunctions.h

XnModuleCppInterface.h

XnModuleCppRegistratration.h

XnModuleInterface.h

XnNodeAllocator.h

XnNode.h

XnOpenNI.h

XnOSCpp.h

XnOS.h

XnOSMemory.h

XnOSStrings.h

XnPlatform.h

XnPrdNode.h

XnPrdNodeInfo.h

XnPrdNodeInfoList.h

XnProfiling.h

XnPropNames.h

XnQueries.h

XnQueue.h

XnQueueT.h

XnScheduler.h

XnStack.h

XnStackT.h

XnStatusCodes.h

XnStatus.h

XnStatusRegister.h

XnStringsHash.h

XnStringsHashT.h

XnThreadSafeQueue.h

XnTypes.h

XnUSBDevice.h

XnUSB.h

XnUtils.h

XnVersion.h

I couldn't find any such relevant files in /usr/bin. What files should be there? Is there any solution to this problem? |

|

2013-04-26 13:20:21 -0600

| asked a question | How to manage multiple versions of Python OpenCV? I'm using Ubuntu and OpenCV 2.4.4. I am running some program made using Python bindings of opencv. Now, I want to test this program for python bindings of OpenCV 2.4.4 and OpenCV 2.3.1. Is there a way by which I can manage to do this? |