I'm implementing a 3d laser scanner based on a rotating table with aruco markers on it for camera pose estimation.

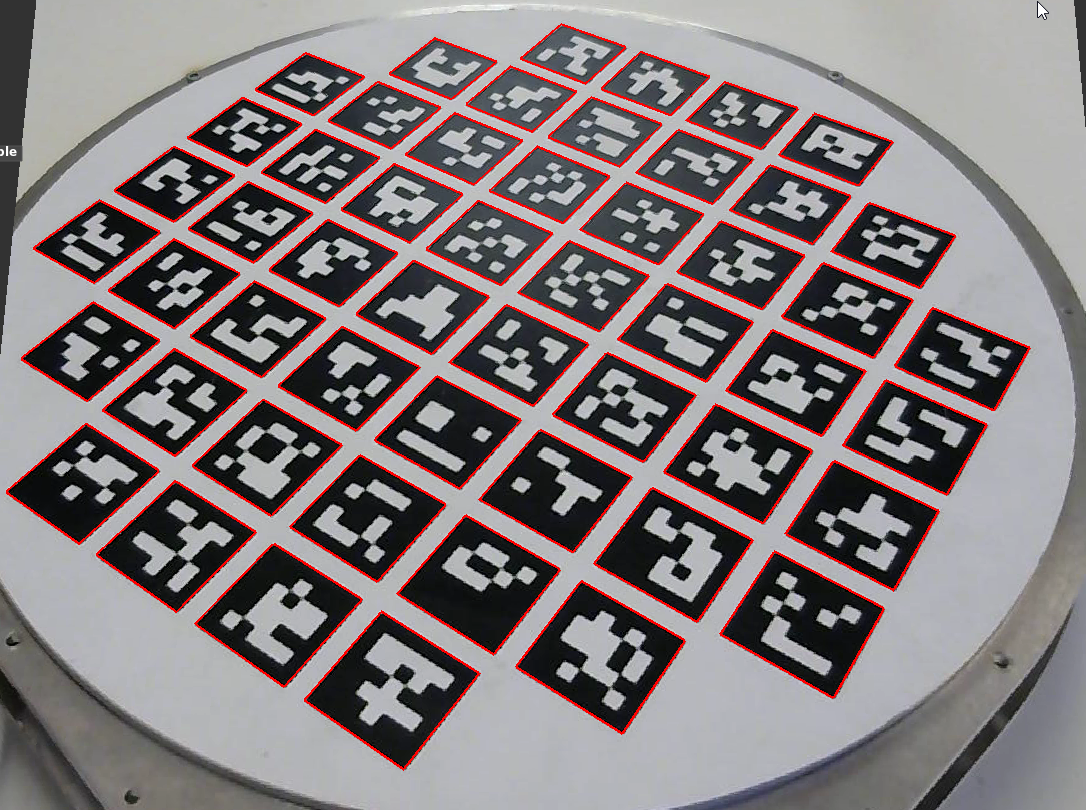

Here is a sample captured camera frame, with the aruco markers detected:

The basic pose estimation flow (for one frame) can be summarized as follows:

camera_matrix, distortion_coefficients = readCalibrationParameters()

board_configuration = readBoardConfiguration()

frame = captureCameraFrame()

found_marker_corners = aruco::detectMarkers(frame, board_configuration)

rvec, tvec = cv::solvePNP(found_marker_corners, board_configuration,

camera_matrix, distortion_coefficients)

world_to_camera = compose_matrix(rvec,tvec)

camera_to_world = invert(world_to_camera)

camera_position = camera_to_world * [0,0,0,1]

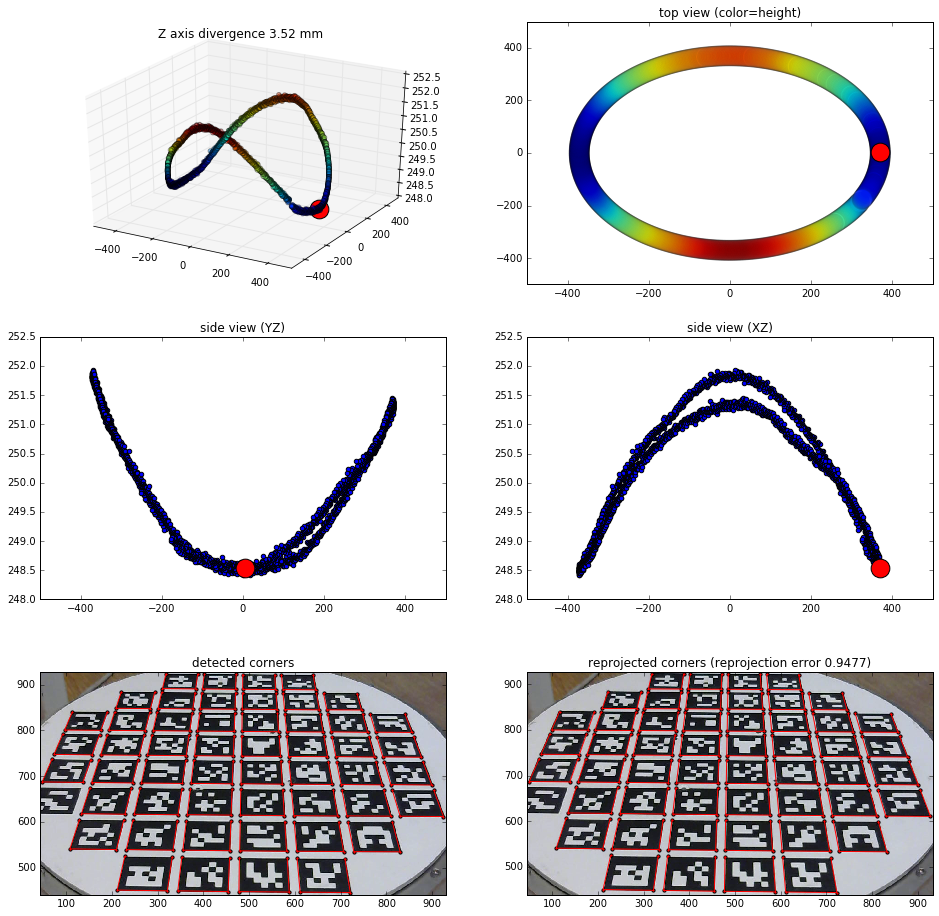

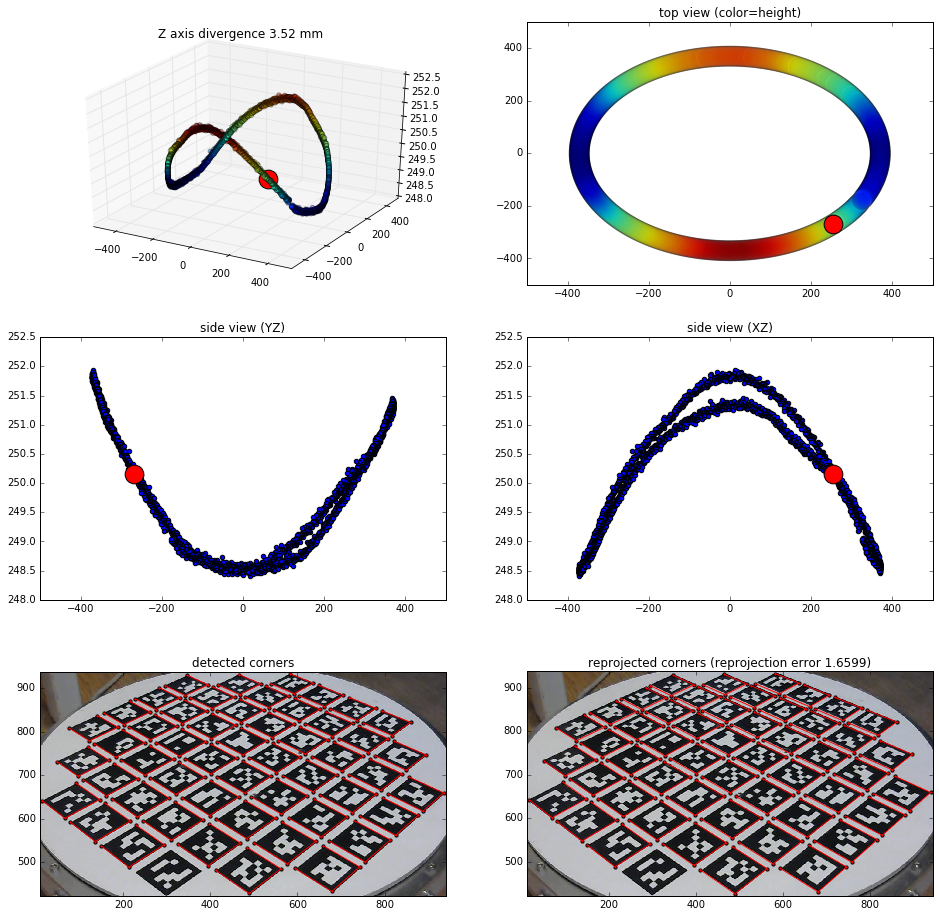

As the scanner's turntable is flat, the computed camera's Z position (height) should be consistent when rotating the turntable around.

However, this is not the case.

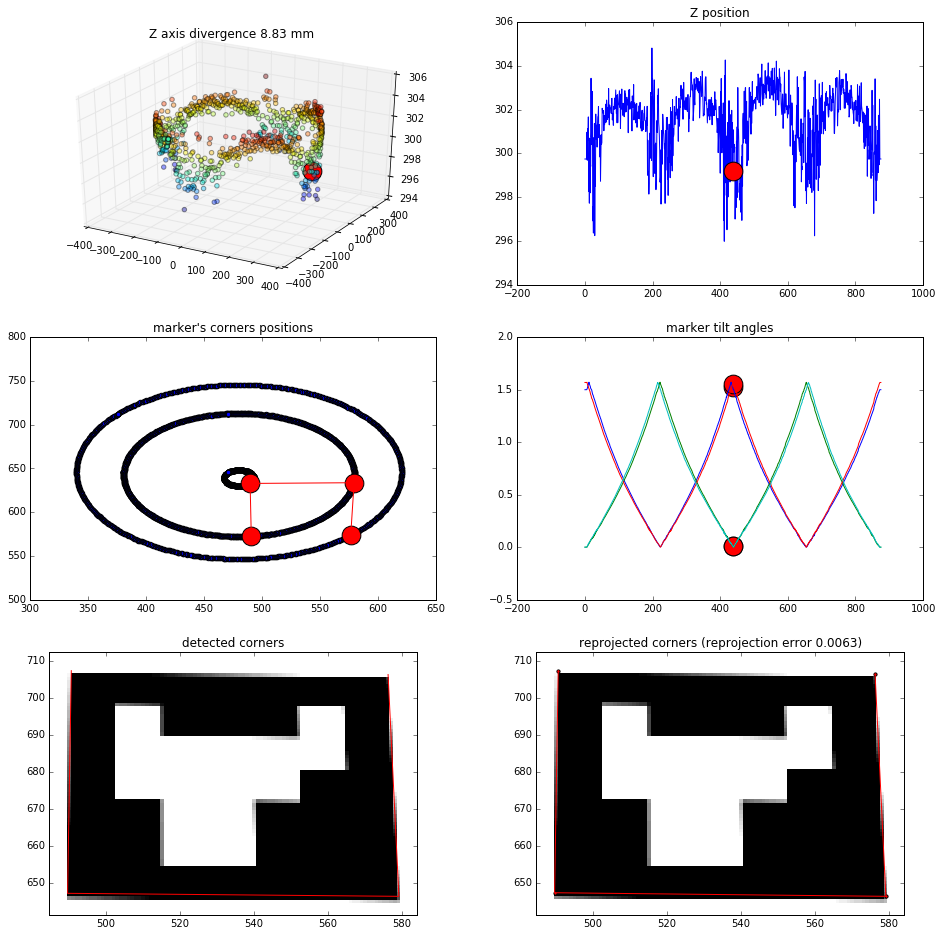

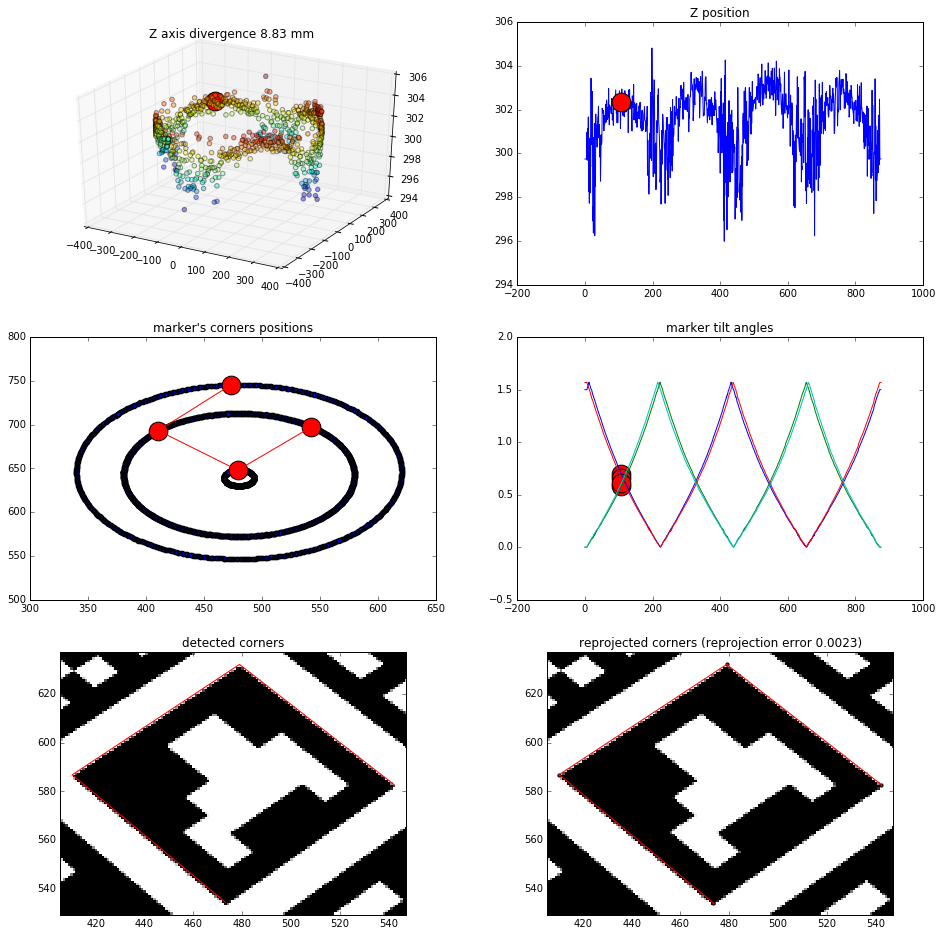

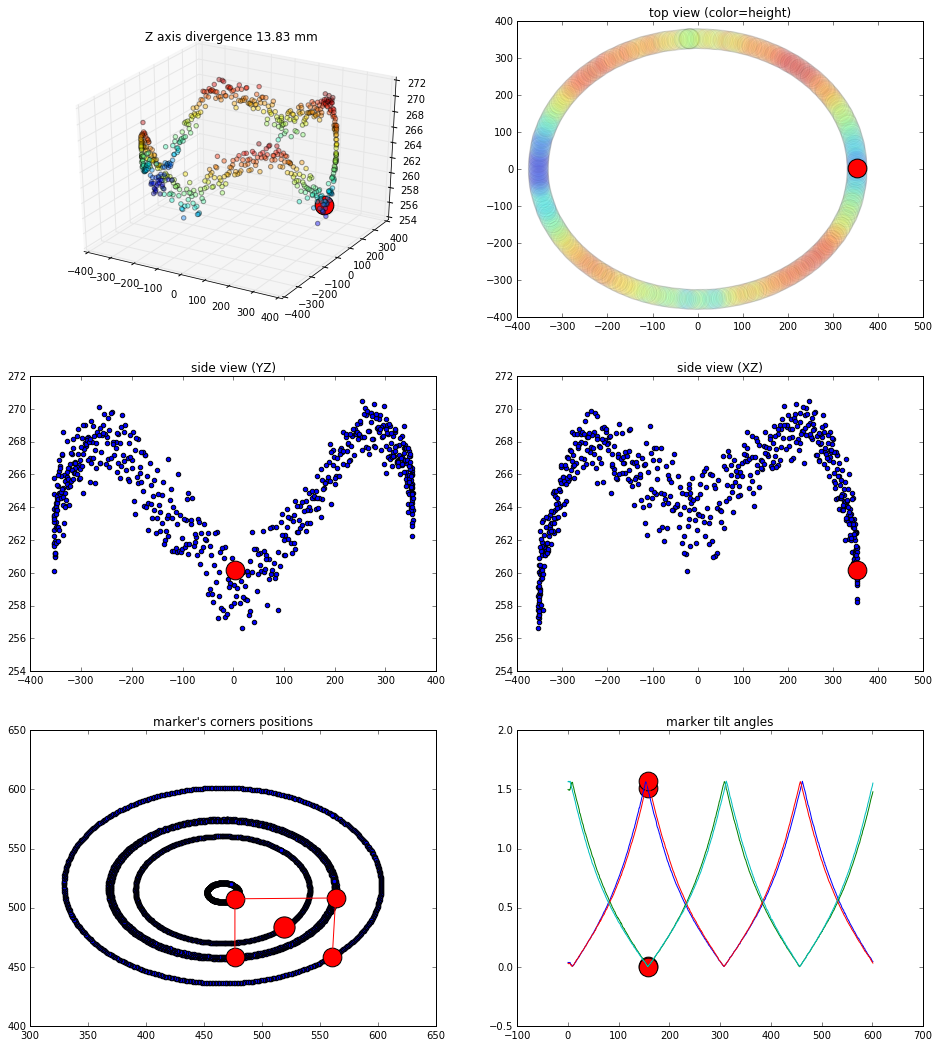

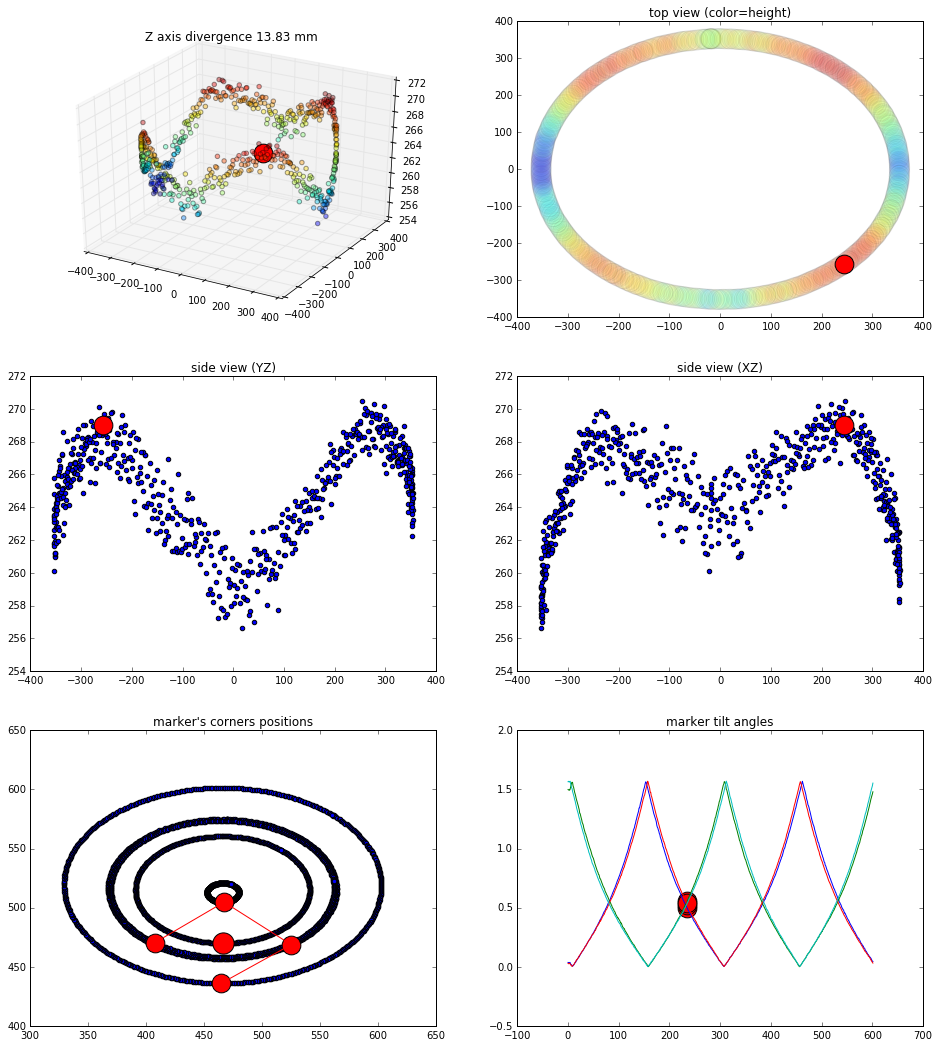

There is a direct relationship between the perceived angle of marker's rotation and the computed camera's Z position, with extremes when the marker is seen straight on and when it's rotated by 45 degrees.

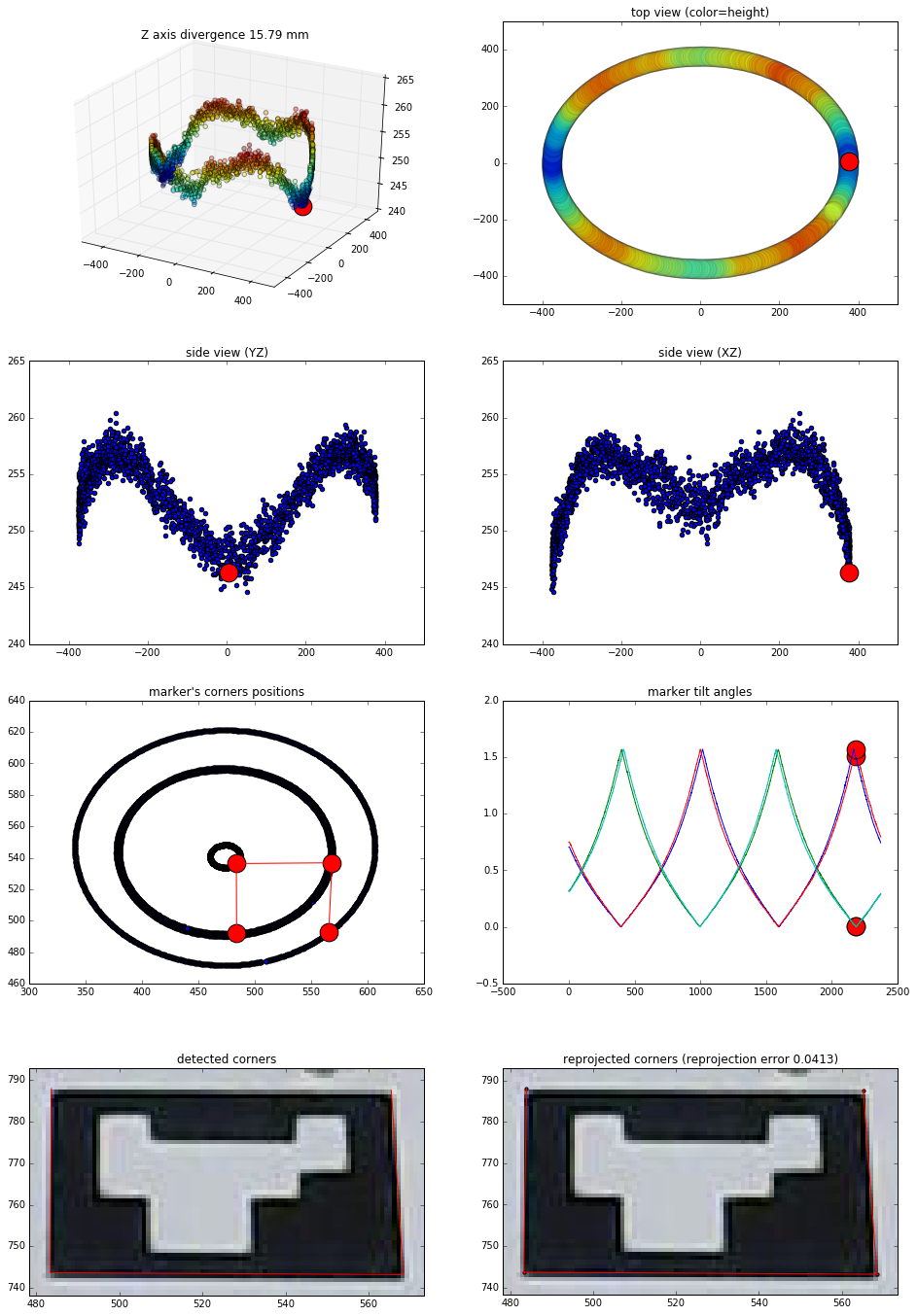

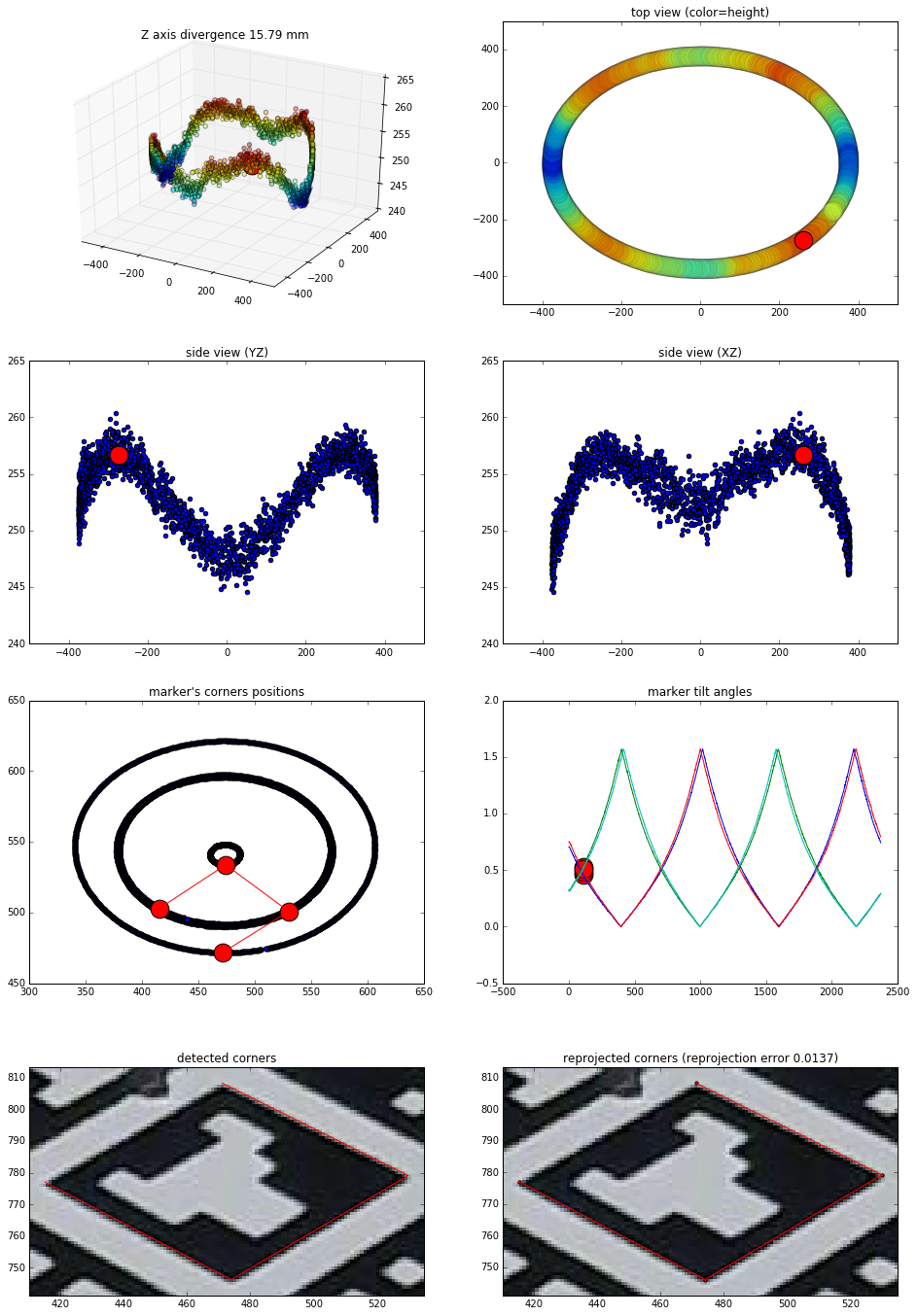

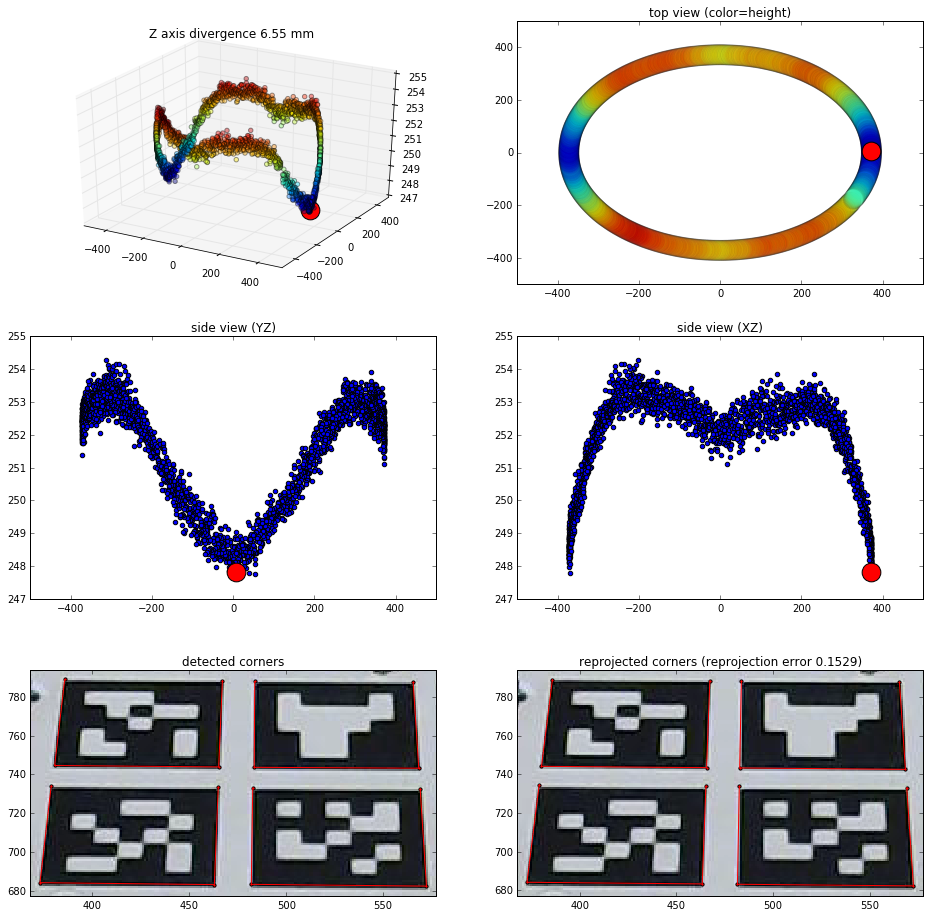

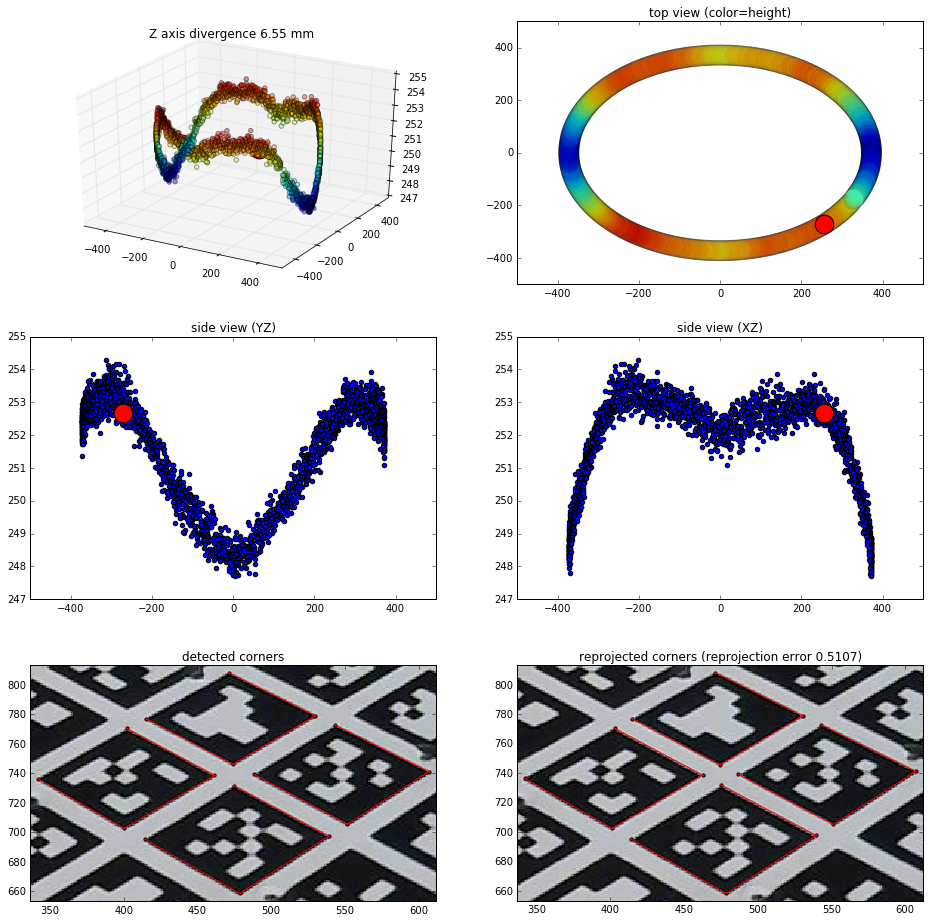

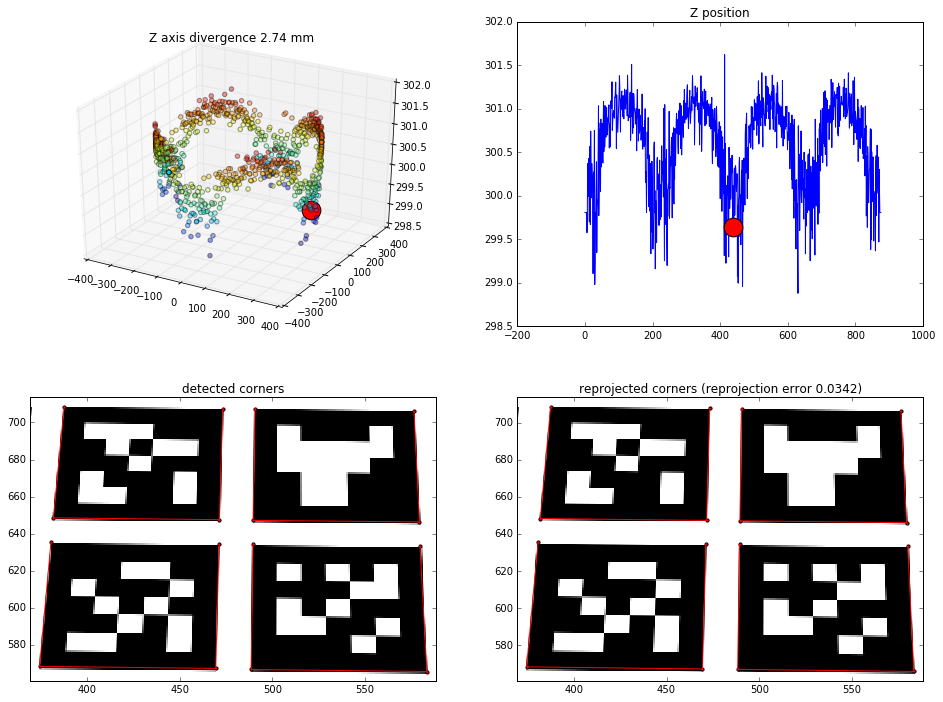

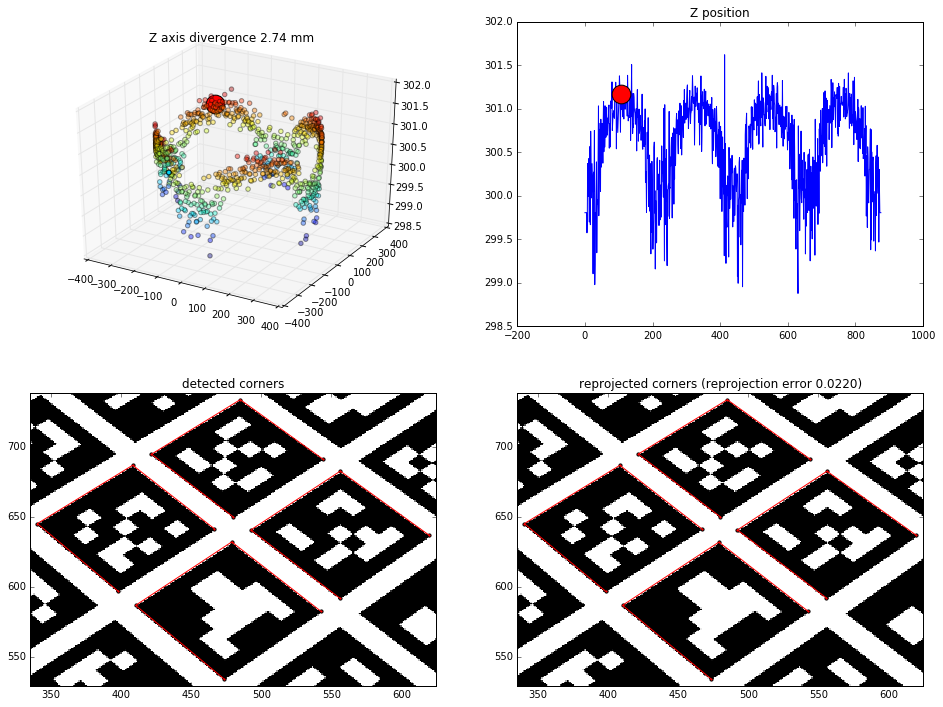

The following charts illustrate this relationship (for detection of just one marker, one of the four in the middle, finding correspondences between 4 points).

Straight view:

View from 45 degrees rotation:

Some of the things I tried which had no effect on the problem (the relationship was still there):

- using low-resolution camera frames (320x240)

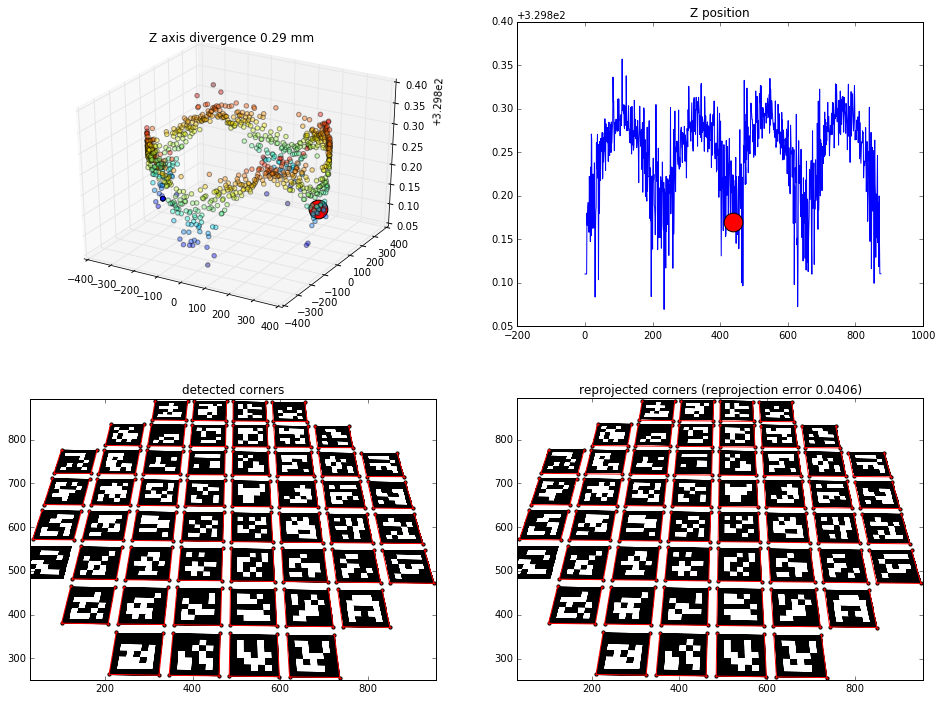

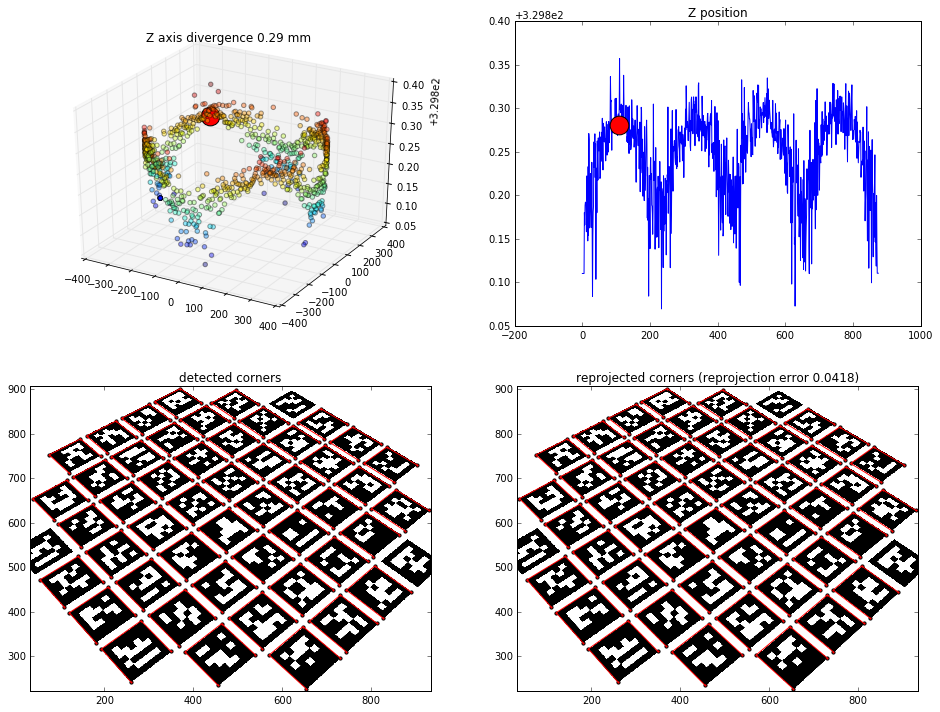

- detecting 4 extra-large markers instead of 52 small ones

- using a different camera

- using different camera calibration / using zero distortion

- changing corner detection parameters

- using the two other solvePNP methods, CV_EPNP and CV_P3P (the relationship was still there, but the precision was much worse)

Are the results a limitation of solvePNP, or am I doing something wrong?