As the title says I want to calculate the surface normals of a given depth image by using the cross product of neighboring pixels. However, I do not really understand the procedure. Does anyone have any experience?

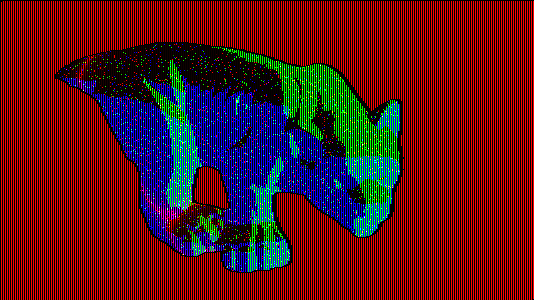

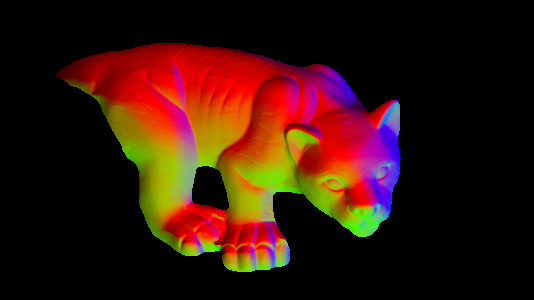

Lets say that we have the following image:

what are the steps to follow?

Update:

I am trying to translate the following pseudocode from this answer to opencv.

dzdx=(z(x+1,y)-z(x-1,y))/2.0;

dzdy=(z(x,y+1)-z(x,y-1))/2.0;

direction=(-dxdz,-dydz,1.0)

magnitude=sqrt(direction.x**2 + direction.y**2 + direction.z**2)

normal=direction/magnitude

where z(x,y) is my depth image. However, the output of the following does not seem correct to me:

for(int x = 0; x < depth.rows; ++x)

{

for(int y = 0; y < depth.cols; ++y)

{

double dzdx = (depth.at<double>(x+1, y) - depth.at<double>(x-1, y)) / 2.0;

double dzdy = (depth.at<double>(x, y+1) - depth.at<double>(x, y-1)) / 2.0;

Vec3d d = (dzdx, dzdy, 1.0);

Vec3d n = normalize(d);

}

}

Update2:

Ok I think I am close:

Mat3d normals(depth.size(), CV_32FC3);

for(int x = 0; x < depth.rows; ++x)

{

for(int y = 0; y < depth.cols; ++y)

{

double dzdx = (depth.at<double>(x+1, y) - depth.at<double>(x-1, y)) / 2.0;

double dzdy = (depth.at<double>(x, y+1) - depth.at<double>(x, y-1)) / 2.0;

Vec3d d;

d[0] = dzdx;

-dzdx;

d[1] = dzdy;

-dzdy;

d[2] = 1.0;

Vec3d n = normalize(d);

normals.at<Vec3d>(x, y)[0] = n[0];

normals.at<Vec3d>(x, y)[1] = n[1];

normals.at<Vec3d>(x, y)[2] = n[2];

}

}

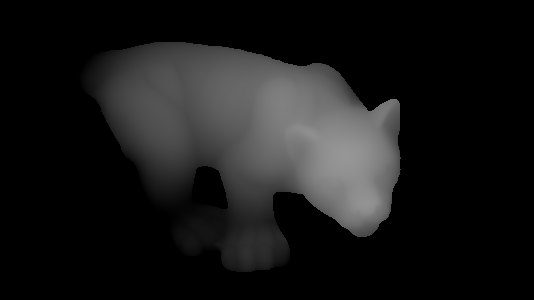

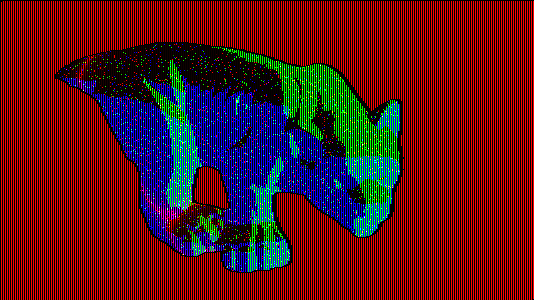

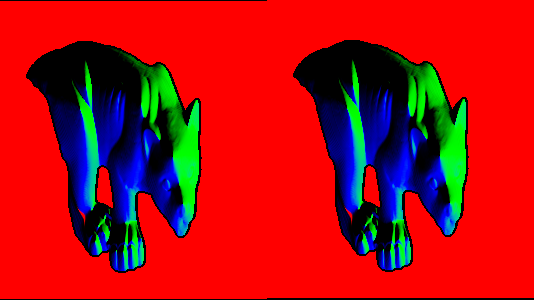

which gives me the following image:

Update 3:

following @berak's approach:

depth.convertTo(depth, CV_64FC1); // I do not know why it is needed to be transformed to 64bit image my input is 32bit

Mat nor(depth.size(), CV_64FC3);

for(int x = 1; x < depth.cols - 1; ++x)

{

for(int y = 1; y < depth.rows - 1; ++y)

{

/*double dzdx = (depth(y, x+1) - depth(y, x-1)) / 2.0;

double dzdy = (depth(y+1, x) - depth(y-1, x)) / 2.0;

Vec3d d = (-dzdx, -dzdy, 1.0);*/

Vec3d t(x,y-1,depth.at<double>(y-1, x)/*depth(y-1,x)*/);

Vec3d l(x-1,y,depth.at<double>(y, x-1)/*depth(y,x-1)*/);

Vec3d c(x,y,depth.at<double>(y, x)/*depth(y,x)*/);

Vec3d d = (l-c).cross(t-c);

Vec3d n = normalize(d);

nor.at<Vec3d>(y,x) = n;

}

}

imshow("normals", nor);

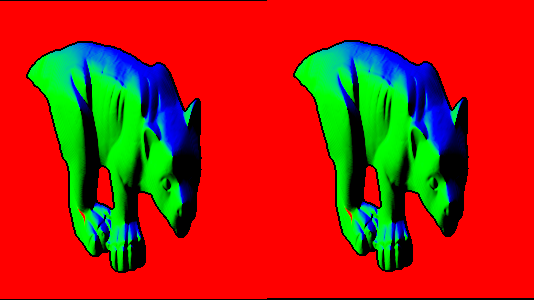

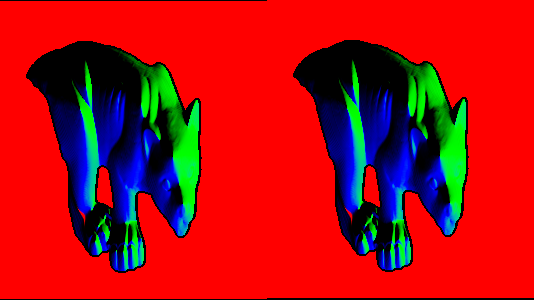

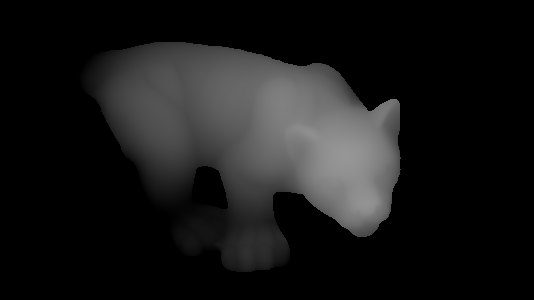

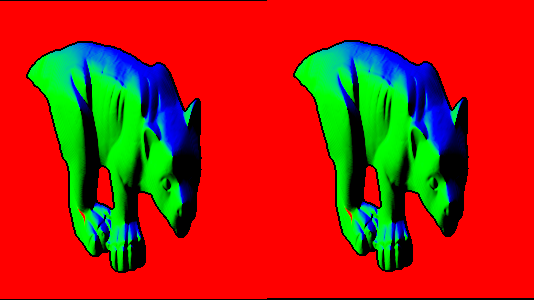

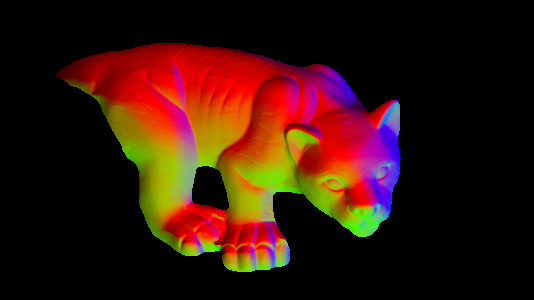

I get this one:

which seems quite ok. However, if I use a 32bit image instead of 64bit the image is corrupted: