Did anybody try to implement it? I did and now try to evaluate the benefits.

This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

Did anybody try to implement it? I did and now try to evaluate the benefits.

Did anybody try to implement it? I did and now try to evaluate the benefits.benefits. In principle, nothing special. Formal neuron is an elementary voting device so we can perfectly apply it as Hough accumulator. The result looks 1:1,

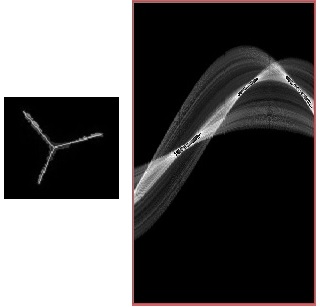

but the implementation is different and we can make use of this difference. On this picture Hough space has parameters: X - angle of line clockwise from X on image (0 - 180). Y (downwards) - rho, may be negative. Zero - in the centre.

Did anybody try to implement it? I did and now try to evaluate the benefits. In principle, nothing special. Formal neuron is an elementary voting device so we can perfectly apply it as Hough accumulator. The result looks 1:1,

but the implementation is different and we can make use of this difference. On this picture Hough space has parameters: X - angle of line clockwise from X on image (0 - 180). Y (downwards) - rho, may be negative. Zero - in the centre.

As I already noted in a reply to one question, Hough transform exists in 2 variants - standard and probabilistic - and advantages are divided between them. You can't use all of them simultaneously. My goal is to fuse them together and join benefits of both variants. For this purpose, we can use a hybrid NN. The simple perceptron will do standard Hough transform, that is each neuron of its 2 layer will represent 1 pair of theta-rho values. Then, we will add positive feedback from these neurons to initial image (RNN). As a result, all pixels belonging to the same line will be boosted relatively to the background and we will be able to extract line segments using simple threshold operation.

Did anybody try to implement it? I did and now try to evaluate the benefits. In principle, nothing special. Formal neuron is an elementary voting device so we can perfectly apply it as Hough accumulator. The result looks 1:1,

but the implementation is different and we can make use of this difference. On this picture Hough space has parameters: X - angle of line clockwise from X on image (0 - 180). Y (downwards) - rho, may be negative. Zero - in the centre.

As I already noted in a reply to one question, Hough transform exists in 2 variants - standard and probabilistic - and advantages are divided between them. You can't use all of them simultaneously. My goal is to fuse them together and join benefits of both variants. For this purpose, we can use a hybrid NN. The simple perceptron will do standard Hough transform, that is each neuron of its 2 layer will represent 1 pair of theta-rho values. Then, we will add positive feedback from these neurons to initial image (RNN). As a result, all pixels belonging to the same line will be boosted relatively to the background and we will be able to extract line segments using simple threshold operation.

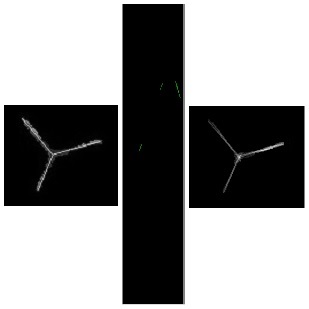

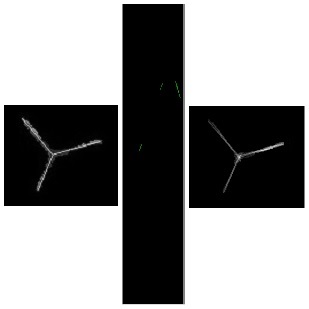

There was a bug in the previous version. I forgot that when I do Mat Img1 = Img; the same array will be used. Turns out that even 1-layered perceptron is workable to some extent. Keep it in mind if you need to process a very large image and can't afford a copy. The corrected variant looks better,

but still there is a problem. Frankly, my primary goal was to develop a method which can detect corners of the cube. Do you see the bright spot in the centre of the right image? That's it. Unfortunately, the right line is highlighted too and the maximal intensity is here, not in the centre...

Did anybody try to implement it? I did and now try to evaluate the benefits. In principle, nothing special. Formal neuron is an elementary voting device so we can perfectly apply it as Hough accumulator. The result looks 1:1,

but the implementation is different and we can make use of this difference. On this picture Hough space has parameters: X - angle of line clockwise from X on image (0 - 180). Y (downwards) - rho, may be negative. Zero - in the centre.

As I already noted in a reply to one question, Hough transform exists in 2 variants - standard and probabilistic - and advantages are divided between them. You can't use all of them simultaneously. My goal is to fuse them together and join benefits of both variants. For this purpose, we can use a hybrid NN. The simple perceptron will do standard Hough transform, that is each neuron of its 2 layer will represent 1 pair of theta-rho values. Then, we will add positive feedback from these neurons to initial image (RNN). As a result, all pixels belonging to the same line will be boosted relatively to the background and we will be able to extract line segments using simple threshold operation.

There was a bug in the previous version. I forgot that when I do Mat Img1 = Img; the same array will be used. Turns out that even 1-layered perceptron is workable to some extent. Keep it in mind if you need to process a very large image and can't afford a copy. The corrected variant looks better,

but still there is a problem. Frankly, my primary goal was to develop a method which can detect corners of the cube. Do you see the bright spot in the centre of the right image? That's it. Unfortunately, the right line is highlighted too and the maximal intensity is here, not in the centre...

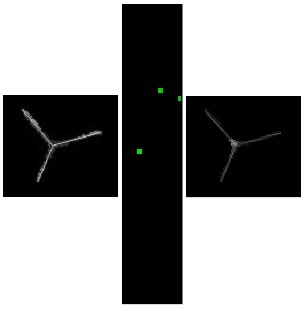

The reason is that Hough representation of that line (the rightmost dash in Hough space) is larger. I equated them all using eroding and dilating (without neural nets, but need to modify one function a bit). Now the result is quite acceptable.

You can read a more comprehensible description of this journey on my site: article. If somebody wants to use this method and needs assistance, contact me here or via support Email on that site.