I am working on a project in Python to calibrate a small thermal camera sensor (FLIR Lepton). Because of limited resolution initial distortion removal is not very exact. By using an iterative method I should be able to refine this calibration (for those of you with access to scientific articles, see this link). This requires me to take the following steps:

- Use a set of images of a calibration pattern to estimate the initial distortion

- Undistort the images

- Apply a perspective correction to the undistorted images

- Re-estimate the calibration point positions

- Remap these refined calibration points back to the original images

- Use the refined points to re-estimate the distortion

- Repeat until the RMS-error converges

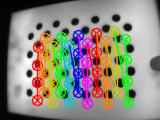

I am stuck at step four. Below you see the commands I used to remove the camera distortion from the original image using the camera matrices and the distortion matrix.

mapx,mapy = cv2.initUndistortRectifyMap(mtx,dist,None,newcameramtx,(w,h),5)

dst = cv2.remap(img,mapx,mapy,cv2.INTER_LINEAR)

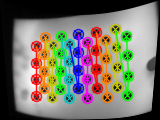

So far I am not able to figure out how to reverse these commands to remap the new point positions to the original image. So far I am able to do (roughly in order of the steps above):

I have looked online and found the same question a bunch of times, with several examples in C++, which I cannot fully comprehend and modify for my purposes. I have tried the solution suggested by this post but this has not yielded the desired results, see last image above. There is my code of that solution

def distortBackPoints(x, y, cameraMatrix, dist):

fx = cameraMatrix[0,0]

fy = cameraMatrix[1,1]

cx = cameraMatrix[0,2]

cy = cameraMatrix[1,2]

k1 = dist[0][0] * -1

k2 = dist[0][1] * -1

k3 = dist[0][4] * -1

p1 = dist[0][2] * -1

p2 = dist[0][3] * -1

x = (x - cx) / fx

y = (y - cy) / fy

r2 = x*x + y*y

xDistort = x * (1 + k1 * r2 + k2 * r2 * r2 + k3 * r2 * r2 * r2)

yDistort = y * (1 + k1 * r2 + k2 * r2 * r2 + k3 * r2 * r2 * r2)

xDistort = xDistort + (2 * p1 * x * y + p2 * (r2 + 2 * x * x))

yDistort = yDistort + (p1 * (r2 + 2 * y * y) + 2 * p2 * x * y)

xDistort = xDistort * fx + cx;

yDistort = yDistort * fy + cy;

return xDistort, yDistort

Then using this command to call the function

corners2 = []

for point in corners:

x, y = distortBackPoints(point[0][0], point[0][1], newcameramtx, dist)

corners2.append([x,y])

I am new to OpenCV and computer vision, so my knowledge about the algebra of these solutions is limited. Any hands on examples or correction to my current code would be greatly appreciated.

Kind regards,

Bart