Estimate white background

Hi,

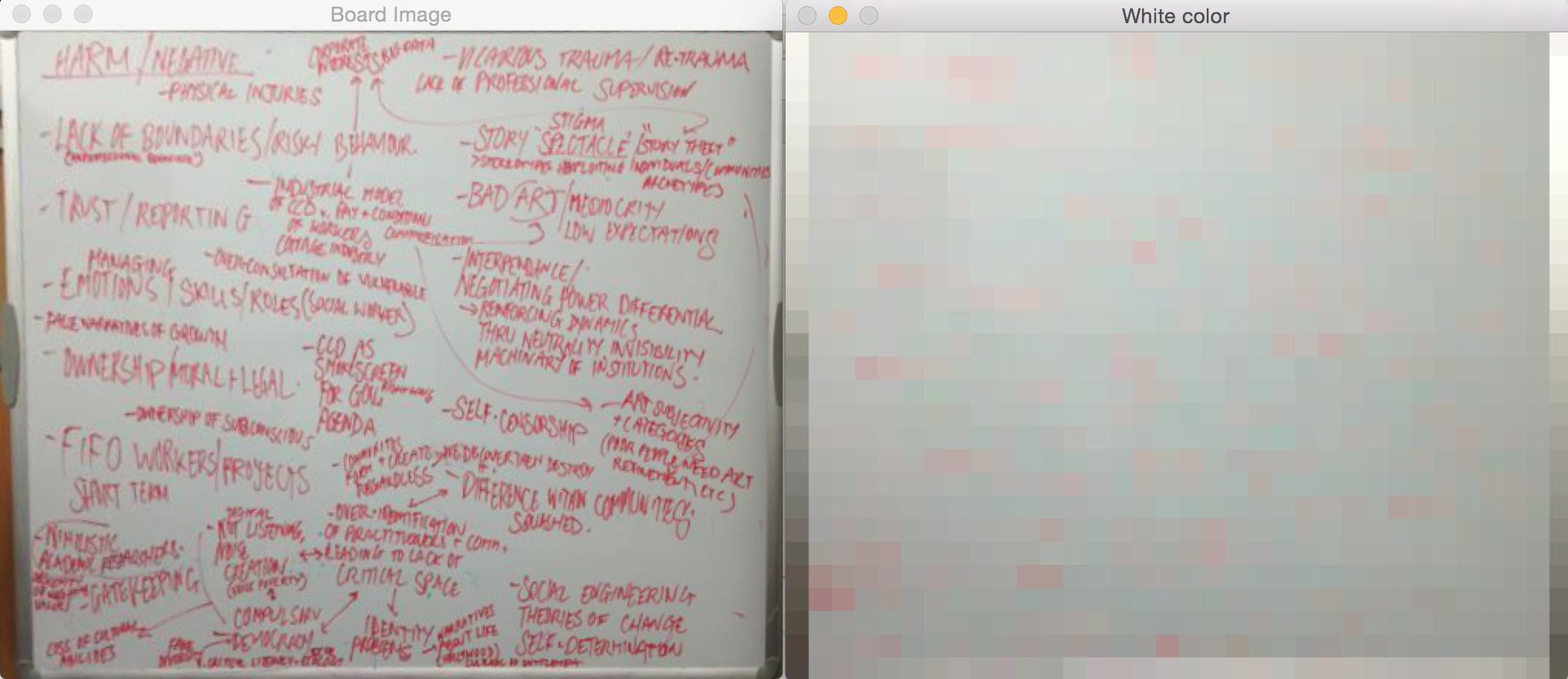

I have image with white uneven background (due to lighting). I'm trying to estimate background color and transform image into image with true white background. For this I estimated white color for each 15x15 pixels block based on its luminosity. So I've got the following map (on the right):

Now I want to interpolate color so it will be more smooth transition from 15x15 block to neighboring block, plus I want it to eliminate outliers (pink dots on left hand side). Could anyone suggest good technique/algorithm for this? (Ideally within OpenCV library, but not necessary)

Have a look here