OpenCV_traincascade monster RAM usage

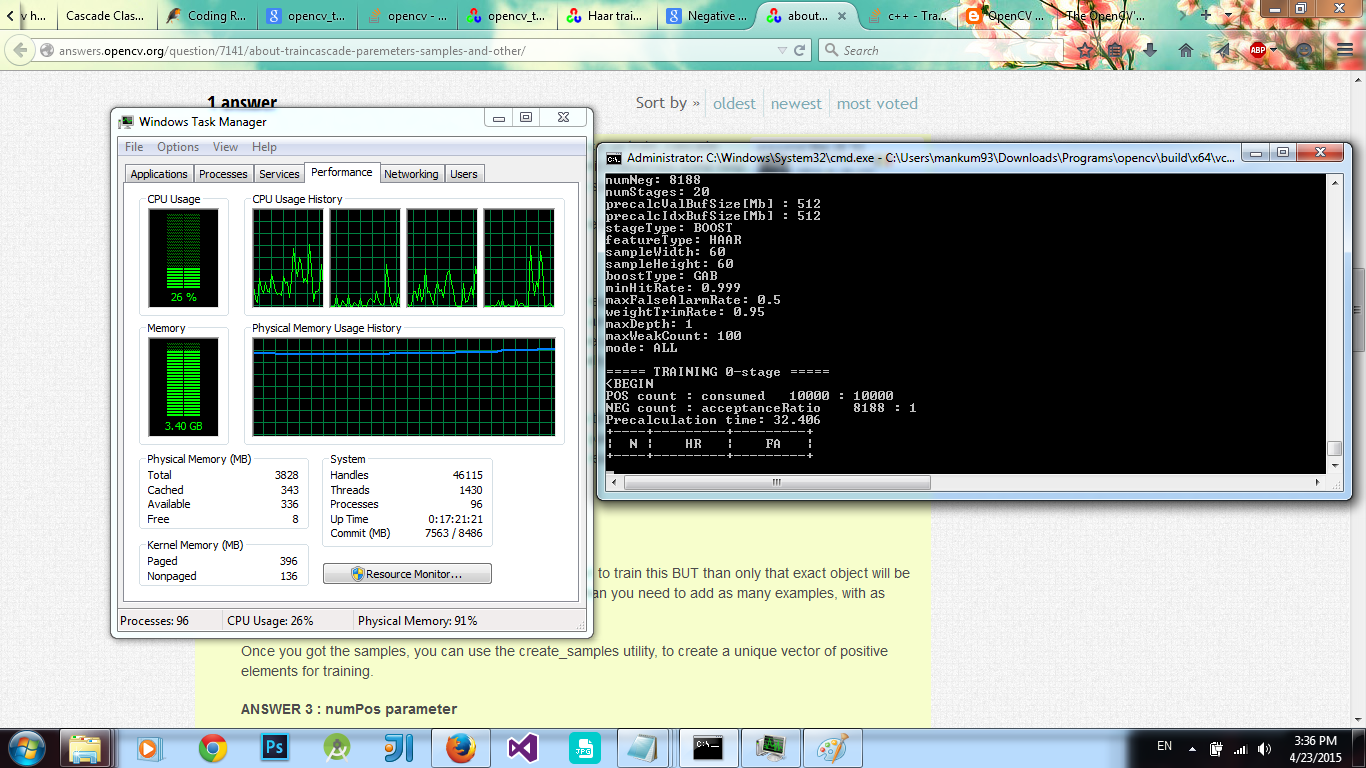

Hello, I just put my flower classifier for training with opencv_traincascade utility. Here is the status :

Can anybody tell me how much time it is gonna take? Is LBP gonna take less then a day?(How much approx?)

My specs are pretty low:

Corei3

Win7-x64

4GB RAM

The RAM usage in the pic is with Firefox RAM usage included.

Also, can I press ctrl+C to abort the training and Resume later?

P.S : Here is the command I used,

opencv_traincascade -data classifier -vec samples1.vec -bg negatives.txt -numStages 20 -minHitRate 0.999 -maxFalseAlarmRate 0.5 -numPos 10000 -numNeg 8188 -w 60 -h 60 -mode ALL -precalcValBufSize 512 -precalcIdxBufSize 512

Yes, as stated in the OpenCV docs (http://docs.opencv.org/doc/user_guide...) you can stop and resume the training later as long as you don't delete the files in the training folder:

"After the opencv_traincascade application has finished its work, the trained cascade will be saved in cascade.xml file in the folder, which was passed as -data parameter. Other files in this folder are created for the case of interrupted training, so you may delete them after completion of training."

In fact, if my memory serves me right, next time you run the app it will ask you to resume previous training or start from scratch

@Lorena GdL : I checked the classifier folder. It doesn't even have the .xml file yet. As you can see in the image, its at stage 0. Should I be able to cancel yet?

I don't think so. Again if I remember right, training is "saved" as each stage completes, and so files with names "stage0", "stage1", ect will appear in the folder over time, and such files are the ones used for resuming. Anyway, the .xml doesn't appear until the training is completed

Could you supply the complete training command? Keep in mind that all features and weak classifiers are stored in memory. At a rate of 180.000 features for a 24x24 window, this increase exponentially if you grow your training samples. With only 4GB RAM available and more than 8000 negatives, this is indeed going to require a RAM based monster :P

@StevenPuttemans : The command I used is :

opencv_traincascade -data classifier -vec samples1.vec -bg negatives.txt -numStages 20 -minHitRate 0.999 -maxFalseAlarmRate 0.5 -numPos 10000 -numNeg 8188 -w 60 -h 60 -mode ALL -precalcValBufSize 512 -precalcIdxBufSize 512@StevenPuttemans : Feel free to tell me to change any option you want, like decreasing the samples, tuning of parameter,etc I just checked it a minute ago, it is still running with appearance of | 2 | 1| 1| appearing in the second row

Lol o_O this will crash for sure with only 4GB of ram. You have 10.000 unique positives and then you require to grab 8.000 unique negatives at each stage that are not classified as negatives by previous stages. This means you will need at least, lets say 80.000 negative windows to train 6-7 stages. Do you have those? Also setting the minHitRate to 0.999 is quite high and quite strict. It will result in a very complex cascade classifier. Lower it to 0.995 for example. Also the

-precalcValBufSize 512 -precalcIdxBufSize 512will need to be increased. With your settings at least set it to 1024 to speed it all up!@StevenPuttemans : Pretty Scary what you said just now!! I am gonna try with LBP I guess, people said it was faster.and I will tweak rest of the parameters as you said.

Ow i didnt notice that you were using HAAR. O yes, it is a float based approach, why LBP is pure integer calculations and thus go a lot faster!

@StevenPuttemans : Yeah, I started it and the memory consumption has been brought down to, 830MB something, which is just like running Photoshop or Firefox. What time should I expect it to complete it in approximately?