[Solved] find all the cards that are above and their center

Here is my situation .... it is only an example, I do not work with static image, I work with camera capture, but the strategy to use to get the result is very similar...

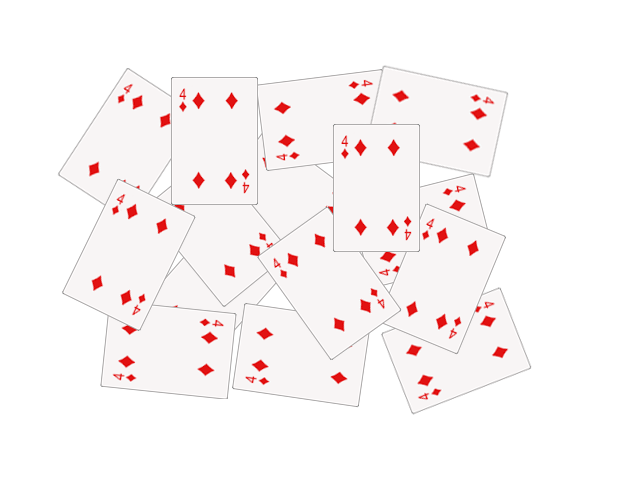

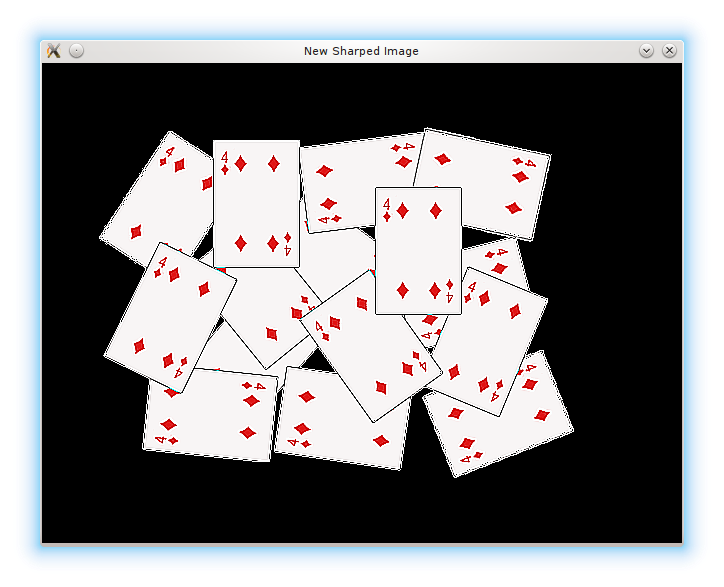

input :

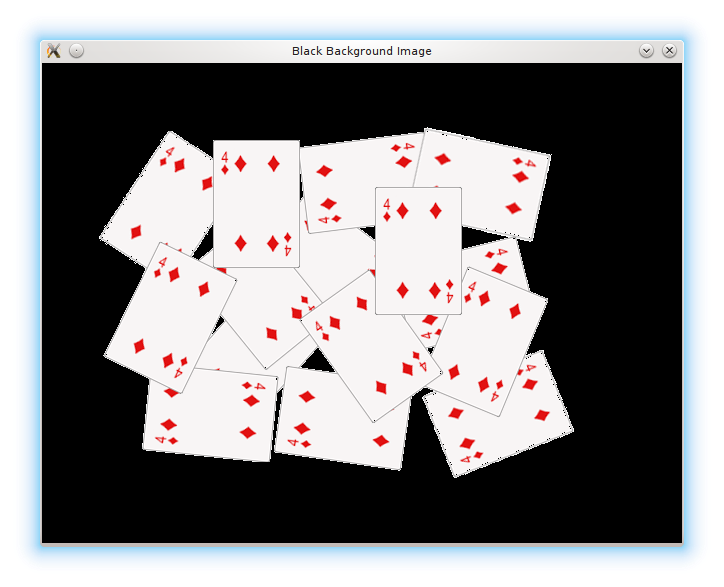

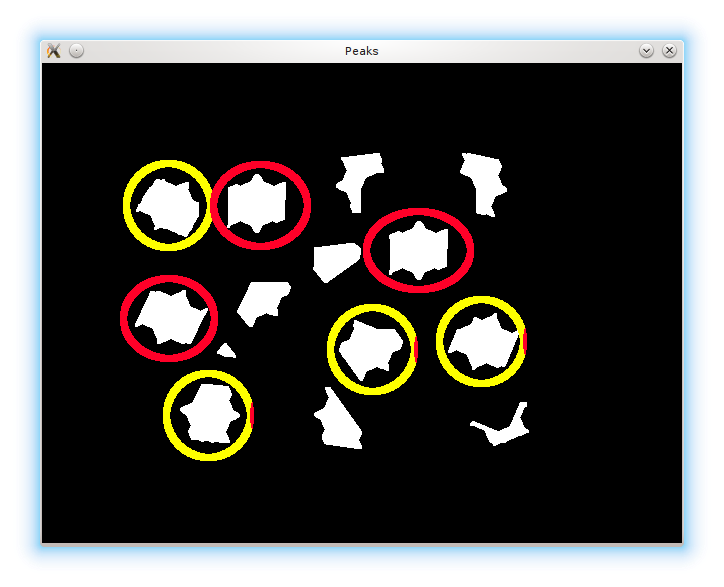

I want to find all the cards that are above and their center... at present to increase the speed of calculation transform the image first in 16 colors .. then filter only red colour zone.

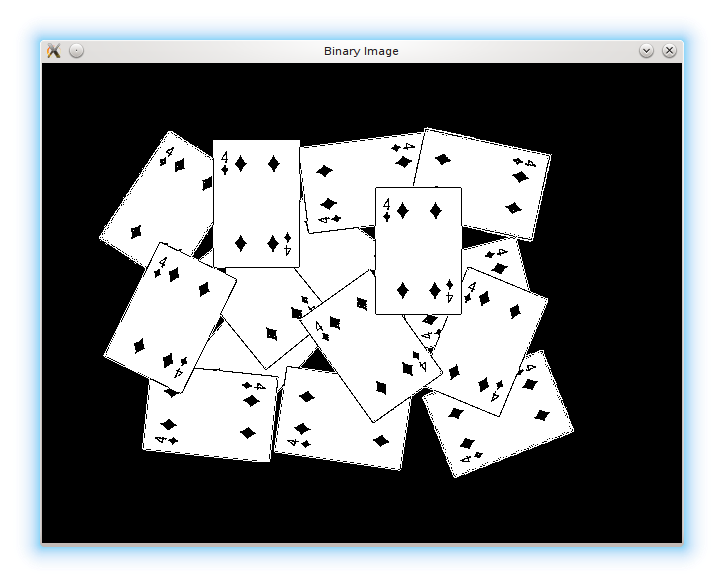

- transform in 1ch b/w

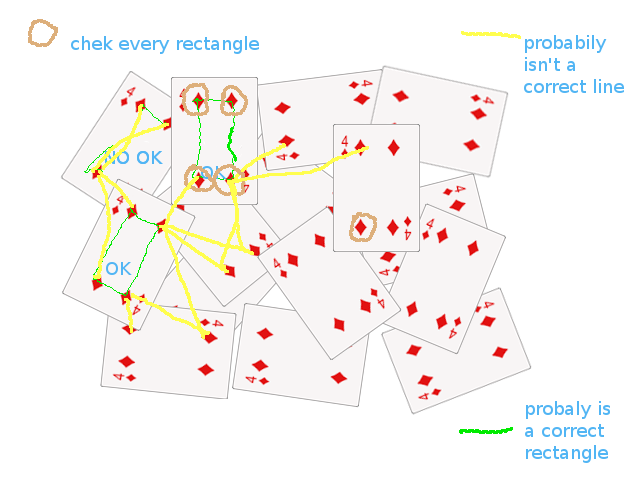

- find all contour and contour center point (save in a vector) with an area as large as rectangles (see second image brown circle)

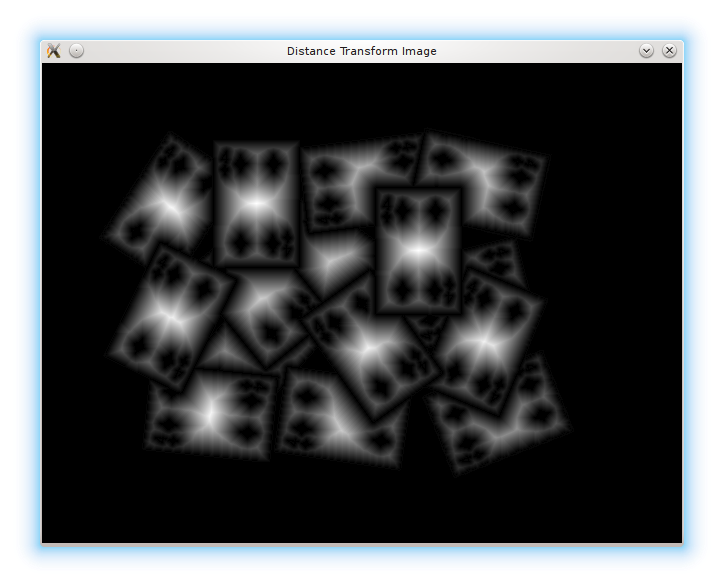

- iterate vector with center point and compute distance betwen all founded points

- if the distance satisfies the construction of a rectangle, then the point is good ( second image green lines ) otherwise the line is not saved ( yellow lines ) . The rectangles found are stored in a vector.

- iterate a vector with rectangle and find center point of founded rectangle.

explain my actual strategy:

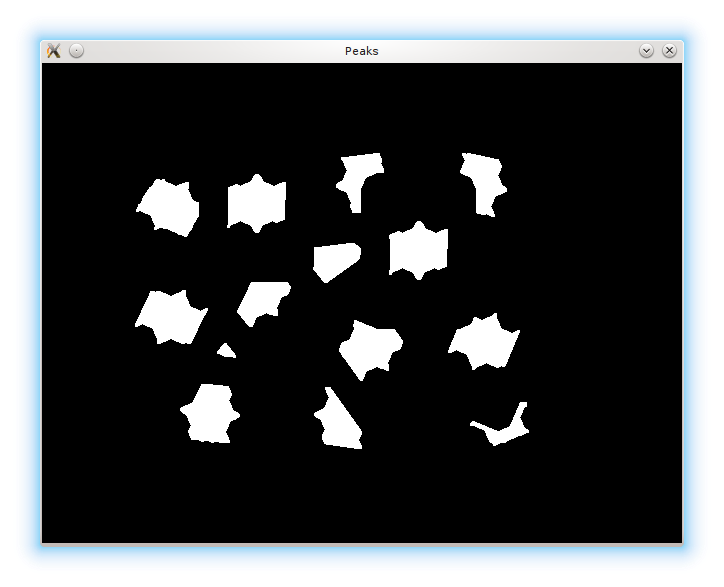

this strategy works , but often asked false positives especially if there are a lot of cards . In fact I can also find green rectangles also between two different cards .... this is not good .

Someone with more experience I can suggest a more precise strategy.... and that also uses few resources ? In the examples I showed static images , but the program should run in real-time with images taken by the camera....I have just tray with ORB for example .... but I have not been able to get accurate results and speed with multiple items. ( I did not know how to behave to be precise with ORB ).

Tanks a lot for all suggest

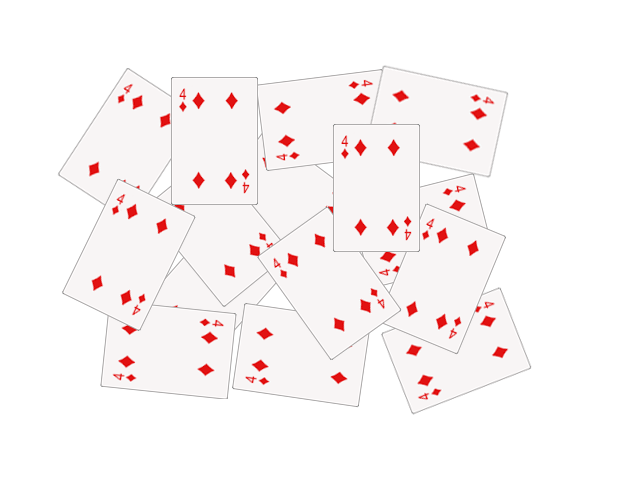

when you say all the cards that are above, you mean every card that is neither full or partially occluded? So, in the example picture 3 cards all in all.

or

all the cards in total.

excuse much of the delay ... I could not answer before today .... only 3 cards all in all .....